This is the pytorch implementation of Basisformer in the Neurips paper: BasisFormer: Attention-based Time Series Forecasting with Learnable and Interpretable Basis

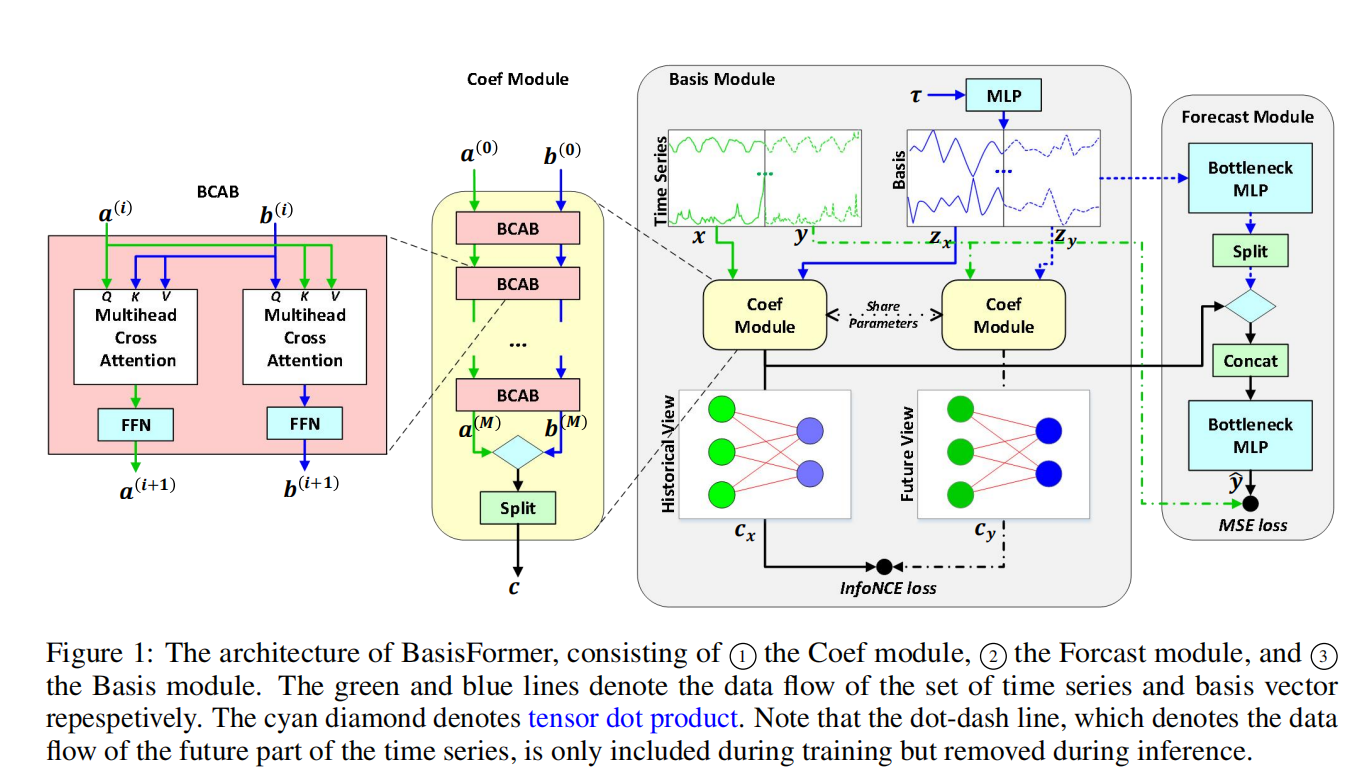

The architecture of our model(Basisformer) is shown as below:

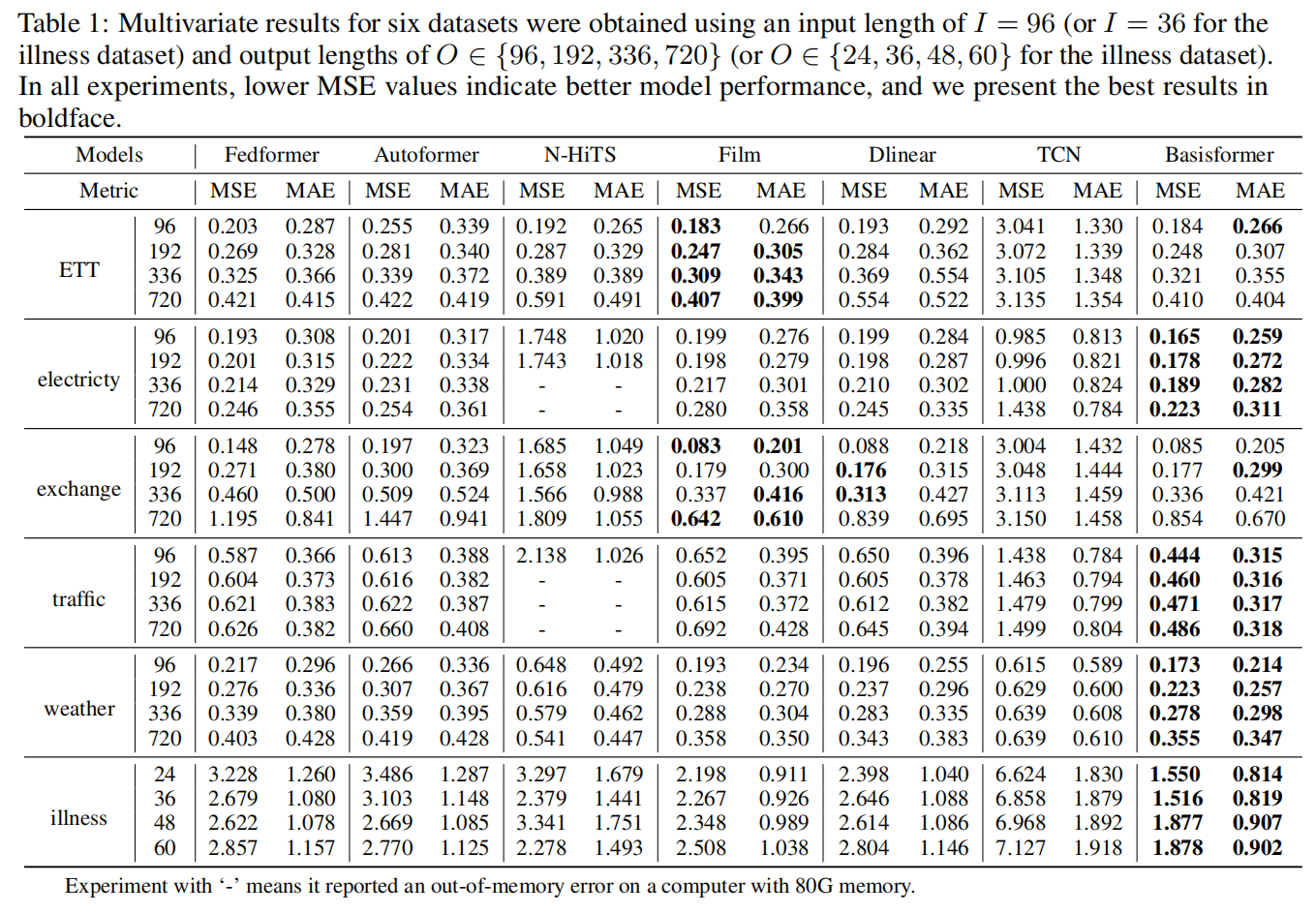

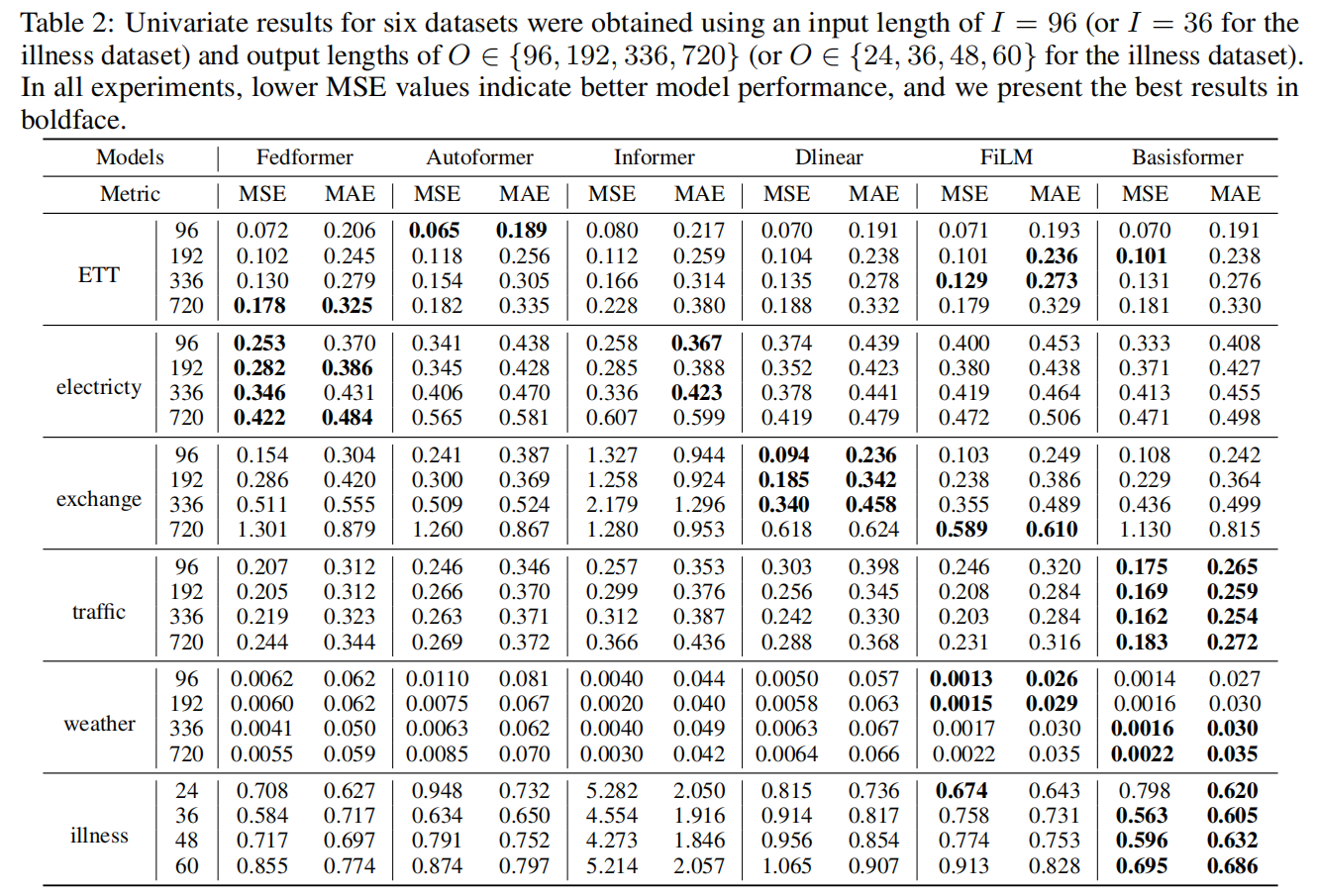

Through extensive experiments on six datasets, we demonstrate that BasisFormer outperforms previous state-of-the-art methods by 11.04% and 15.78% respectively for univariate and multivariate forecasting tasks.

conda create -n basisformer -y python=3.8

conda activate basisformer

Install the required packages

pip install -r requirements.txt

We follow the same setting as previous work. The datasets for all the six benchmarks can be obtained from [Autoformer]. The datasets are placed in the 'all_six_datasets' folder of our project. The tree structure of the files are as follows:

Basisformer\all_six_datasets

│

├─electricity

│

├─ETT-small

│

├─exchange_rate

│

├─illness

│

├─traffic

│

└─weather

The length of the historical input sequence is maintained at

sh script/M.sh

sh script/S.sh

Note:

If you want to run multiple GPUs in parallel, you can replace script/M.sh and script/S.sh with script/M_parallel.sh and script/S_parallel.sh, respectively.

Training logs and weight files can be obtained from Baidu Netdisk(with an extract code: jqsr) or Google Drive

If you find this repo useful, please cite our paper.

@inproceedings{ni2023basisformer,

title={{Basisformer}: Attention-based Time Series Forecasting with Learnable and Interpretable Basis},

author={Ni, Zelin and Yu, Hang and Liu, Shizhan and Li, Jianguo and Lin, Weiyao},

booktitle={Advances in Neural Information Processing Systems},

year={2023}

}

If there are any issues, please ask in the GitHub Issue module.

We appreciate the following github repos a lot for their valuable code base or datasets:

https://github.com/MAZiqing/FEDformer

https://github.com/thuml/Autoformer

https://github.com/zhouhaoyi/Informer2020