CAPS: Cypher for Apache Spark

CAPS extends Apache Spark™ with Cypher, the industry's most widely used property graph query language defined and maintained by the openCypher project. It allows for the integration of many data sources and supports multiple graph querying. It enables you to use your Spark cluster to run analytical graph queries. Queries can also return graphs to create processing pipelines.

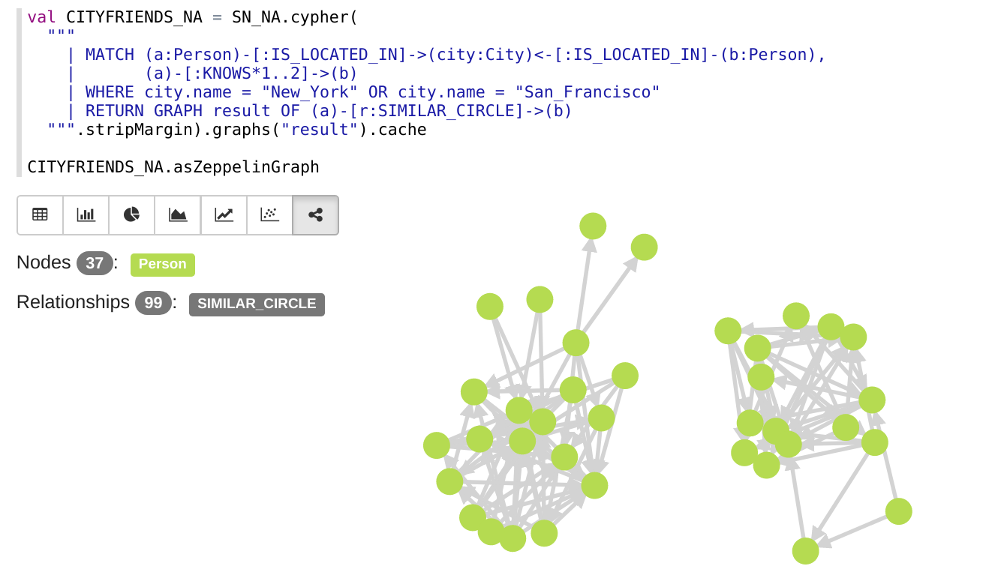

Below you see a screenshot of running a Cypher query with CAPS running in a Zeppelin notebook:

Intended audience

CAPS allows you to develop complex processing pipelines orchestrated by a powerful and expressive high-level language. In addition to developers and big data integration specialists, CAPS is also of practical use to data scientists, offering tools allowing for disparate data sources to be integrated into a single graph. From this graph, queries can extract subgraphs of interest into new result graphs, which can be conveniently exported for further processing.

In addition to traditional analytical techniques, the graph data model offers the opportunity to use Cypher and Neo4j graph algorithms to derive deeper insights from your data.

Current status: Alpha

The project is currently in an alpha stage, which means that the code and the functionality are still changing. We haven't yet tested the system with large data sources and in many scenarios. We invite you to try it and welcome any feedback.

CAPS Features

CAPS is built on top of the Spark DataFrames API and uses features such as the Catalyst optimizer. The Spark representations are accessible and can be converted to representations that integrate with other Spark libraries.

CAPS supports a subset of Cypher and is the first implementation of multiple graphs and graph query compositionality.

CAPS currently supports importing graphs from both Neo4j and from custom CSV format in HDFS. CAPS has a data source API that allows you to plug in custom data importers for external sources.

CAPS Roadmap

CAPS is under rapid development and we are planning to:

- Support more Cypher features

- Make it easier to use by offering it as a Spark package and by integrating it into a notebook

- Provide additional integration APIs for interacting with existing Spark libraries such as SparkSQL and MLlib

Get started with CAPS

CAPS is currently easiest to use with Scala. Below we explain how you can import a simple graph and run a Cypher query on it.

Building CAPS

CAPS is built using Maven

mvn clean install

Hello CAPS

Cypher is based on the property graph model, comprising labelled nodes and typed relationships, with a relationship either connecting two nodes, or forming a self-loop on a single node.

Both nodes and relationships are uniquely identified by an ID of type Long, and contain a set of properties.

The following example shows how to convert a friendship graph represented as Scala case classes to a CAPSGraph representation.

The CAPSGraph representation is constructed from node and relationship scans.

The scan construction describes to CAPSGraph how this graph structure is read from a DataFrame.

Once the graph is constructed the CAPSGraph instance supports Cypher queries with its cypher method.

import org.opencypher.caps.api.record._

import org.opencypher.caps.api.spark.{CAPSGraph, CAPSSession}

case class Person(id: Long, name: String) extends Node

case class Friend(id: Long, source: Long, target: Long, since: String) extends Relationship

object Example extends App {

// Node and relationship data

val persons = List(Person(0, "Alice"), Person(1, "Bob"), Person(2, "Carol"))

val friendships = List(Friend(0, 0, 1, "23/01/1987"), Friend(1, 1, 2, "12/12/2009"))

// Create CAPS session

implicit val caps = CAPSSession.local()

// Create graph from nodes and relationships

val graph = CAPSGraph.create(persons, friendships)

// Query graph with Cypher

val results = graph.cypher(

"""| MATCH (a:Person)-[r:FRIEND]->(b)

| RETURN a.name AS person, b.name AS friendsWith, r.since AS since""".stripMargin

)

case class ResultSchema(person: String, friendsWith: String, since: String)

// Print result rows mapped to a case class

results.as[ResultSchema].foreach(println)

}The above program prints:

ResultSchema(Alice,Bob,23/01/1987)

ResultSchema(Bob,Carol,12/12/2009)

Next steps

- How to use CAPS in Apache Zeppelin

- Look at and contribute to the Wiki

How to contribute

We'd love to find out about any issues you encounter. We welcome code contributions -- please open an issue first to ensure there is no duplication of effort.

License

The project is licensed under the Apache Software License, Version 2.0

Copyright

© Copyright 2016-2017 Neo4j, Inc.

Apache Spark™, Spark, and Apache are registered trademarks of the Apache Software Foundation.