[ICLR2022] Entroformer: A Transformer-based Entropy Model for Learned Image Compression [pdf]

The official repository for Entroformer: A Transformer-based Entropy Model for Learned Image Compression.

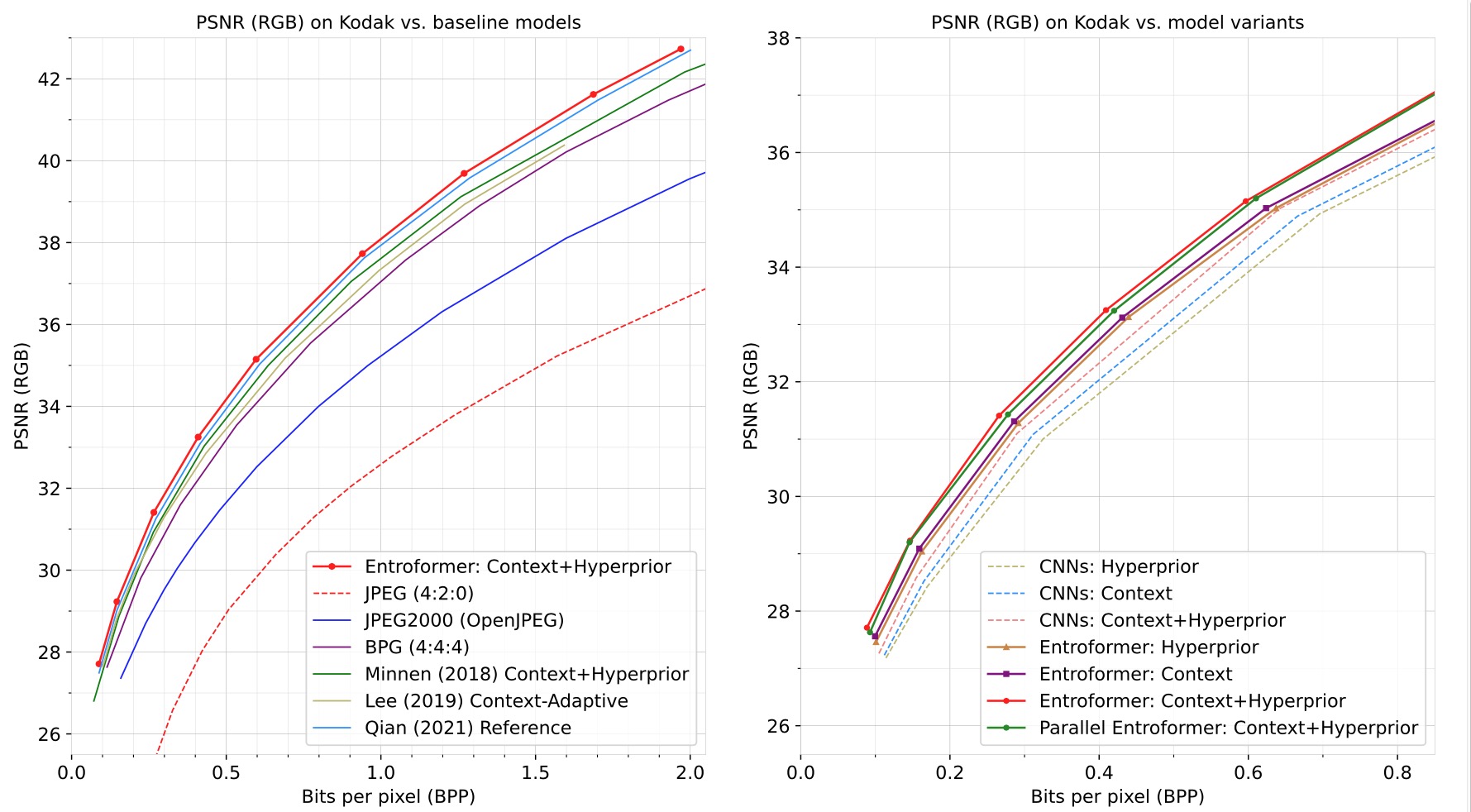

Evaluation on Kodak Dataset

Clone the repo and create a conda environment as follows:

conda create --name entroformer python=3.7

conda activate entroformer

conda install pytorch=1.7 torchvision cudatoolkit=10.1

pip install torchac(We use PyTorch 1.7, CUDA 10.1. We use torchac for arithmetic coding.)

Kodak Dataset

kodak

├── image1.jpg

├── image2.jpg

└── ...

Train:

sh scripts/pretrain.sh 0.3

sh scripts/train.sh [tradeoff_lambda(e.g. 0.02)]

(You may use your own dataset by modifying the train/test data path.)Evaluate:

# Kodak

sh scripts/test.sh [/path/to/kodak] [model_path]

(sh test_parallel.sh [/path/to/kodak] [model_path])Compress:

sh scripts/compress.sh [original.png] [model_path]

(sh compress_parallel.sh [original.png] [model_path])Decompress:

sh scripts/decompress.sh [original.bin] [model_path]

(sh decompress_parallel.sh [original.bin] [model_path])Download the pre-trained models optimized by MSE.

Note: We reorganize code and the performances are slightly different from the paper's.

Codebase from L3C-image-compression , torchac

If you find this code useful for your research, please cite our paper

@InProceedings{Yichen_2022_ICLR,

author = {Qian, Yichen and Lin, Ming and Sun, Xiuyu and Tan, Zhiyu and Jin, Rong},

title = {Entroformer: A Transformer-based Entropy Model for Learned Image Compression},

booktitle = {International Conference on Learning Representations},

month = {May},

year = {2022},

}