Why does compressed JSON usually smaller than compressed Msgpack?

CNSeniorious000 opened this issue · comments

I benchmarked JSON and Msgpack in some real data, but it shows that after compression, data encoded by msgpack is always larger than JSON, although raw msgpack is smaller than JSON. I've tested brotli, lzma, blosc on python.

There is a common use case and here is an Reproducible example:

>>> a = { ... } # the embeddings API response from OpenAI

>>> len(msgpack.encode(a))

13951

>>> len(json.encode(a))

19506

>>> len(compress(msgpack.encode(a)))

9620

>>> len(compress(json.encode(a)))

6409I wonder why and I am thinking maybe it is not worthy to use Msgpack in Web responses (because almost every browser supports compressing nowdays)? No offence, I was a big fan of Msgpack and used to use it everywhere.

I find this already discussed in #203 but I've also tested msgpack on data of string (like OpenAI's chat completion response), and compressed JSON is still a bit smaller. I am confusing. Isn't Length-Prefixed Data better than Delimiter-Separated Data?

(Wild speculation follows.)

I've not investigated this with practical data, but your results seem relatively intuitive to me for the following reasons:

Generic compression algorithms like those you tried benefit from a good probability distribution with both sequences that appear very frequently and sequences that appear rarely: the algorithm would then select a very short encoding for the frequent symbols at the expense of using a longer encoding for the rarer symbols.

A typical (minified) JSON document has lots of { } [ ] " ,, and some frequently-occurring sequences of those like [{", }], ":", ",", etc. Those are complemented by repetitive object key strings (middling-to-high-probability) and low-frequency unique or near-unique property values, giving a general compression algorithm lots of wiggle-room to trade shorter encodings of the frequent sequences for longer encodings of the less common sequences.

On the other hand, MessagePack is in some sense domain-specific compression, and so the problem here could be similar to trying to compress already-compressed data. Its encoding format does use shorter encodings for more common elements, such as packing small numbers into single bytes and minimizing the overhead of short arrays and maps. But I suspect that means that there's a worse distribution of high-frequency vs low-frequency sequences for a general compression algorithm to benefit from.

For example, there are 18 distinct "delimiters" for arrays of different sizes in MessagePack, and so I would guess (haven't measured) that the probability of any one of those in a typical document is lower than any of JSON's more repetitive delimiters. A compression algorithm can still chew on stuff like repetitive map keys, but unless the strings are full of escape sequences (unlikely for object attributes, I think) their contents would have equal size in both MessagePack and JSON.

Without carefully studying the output of the compressors for your real input I can only speculate, of course. Truly answering this question would, I think, require taking equivalent structures in both JSON and MessagePack, compressing them both with the same algorithm, and then studying the resulting compressed encoding in detail to understand exactly what tradeoffs the compressor made. I don't really feel motivated to do that sort of analysis, since my intuition already agrees with your result. 😀

MessagePack should typically still "win" in situations where using compression is infeasible for some reason, but as you implied those situations are becoming fewer over time. 🤷♂️

Those are complemented by repetitive object key strings (middling-to-high-probability) and low-frequency unique or near-unique property values, giving a general compression algorithm lots of wiggle-room to trade shorter encodings of the frequent sequences for longer encodings of the less common sequences.

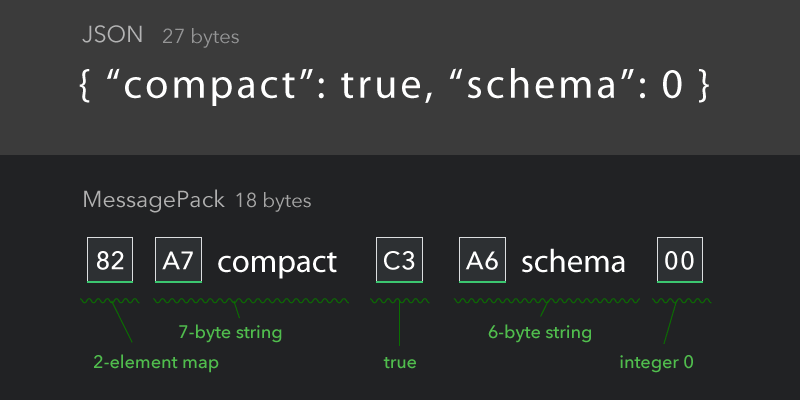

This is convincing. Here is my understanding. Take MsgPack's official demo as an example:

- After compression, constants like

truein JSON is treated the same asC3in MsgPack - Human culture is based on Decimals, so there would be some high-frequency numbers like

10,100, which is compressable while its binary do not have any pattern. Meanwhile, numbers may appear in strings too. JSON may take advantages of it. {"beforecompactis treated as a single token after compression, and the following":is the same. However, in MsgPack, if the length of strings are not always the same (which is the common situation), there will be moreA6andA7, these bytes can not be compressed. In another word, after compression, MsgPack may behaves worse onstrings because it should use an uncompressable HEAD but JSON just use two (or less) compressable separator.

I think this is the point. It indicates that Length-Prefixed serialization languages is less friendly to compressors compared to Delimiter-Separated serialization languages indeed.

Your insights inspire me a lot, Thank you very much!!

So I personally conclude as follow:

-

Length-Prefixed data is less size-efficient during compression compared to Delimiter-Separated data

-

More fine-grained research can be done through

taking equivalent structures in both JSON and MessagePack, compressing them both with the same algorithm, and then studying the resulting compressed encoding in detail to understand exactly what tradeoffs the compressor made.

-

Web developers should consider JSON fist now and in the future, because compressing is almost at zero cost

-

This is not saying Length-Prefixed data is of no use. I think its usage is representing larger data like a HEAD with 100KB body following. And the performance on speed and RAM usage is still huge advantages of Length-Prefixed data

-

Finally, I think best practice of transmitting data when pursuing ultimate size efficiency, IDLs like

protobufare what you need. IDLs remove the separator of JSON or the HEAD of basic element in MsgPack representing the basic type. If you really want self-explanatory (you want the data can be interpreted without additional type definitions), you can transmit type definition before data 😂

I would like to add to this that:

- While compressing can be low-cost, for domain-specific messaging and storage it can be far better to define a format before hand. (I.E. Player movement messages, game save files, etc.)

- Smaller messages will always be smaller with MP vs JSON even with compression since there is less possibility to compress.

- MP offers additional degrees of type-safety vs JSON which can't due to lack of extensibility.

Honestly the third point makes MP my default simply because it's far more type-safe without significant work. Not saying JSON can't also be type-safe, but it's harder to make auto-convert's for Set's, Map's or BigInt's. Which alone is enough for me.

In my experience, when people complain about size differences between JSON and MessagePack, 99% of the time it's because the data is full of real numbers that get encoded as MessagePack doubles. They end up being 9 bytes in the MessagePack as opposed to a decimal expansion in the JSON. (I don't know why the above wild speculation doesn't address this. It's always the doubles.)

You mentioned this is OpenAI embeddings data. Is it this? Sure looks like a lot of real numbers there. Can you attach a JSON response here so we don't need an API key to test this?

All of the compression algorithms you mentioned work in the domain of bytes so they don't do a good job of compressing floats. A decimal expansion near zero with a prefix like "-0.00" and 14 decimal digits is probably more compressible than a double that has 8 incompressible bytes plus the tag prefix.