We introduce RF-Diffusion, a versatile generative model designed for wireless data. RF-Diffusion is capable of generating various types of signals, including Wi-Fi, FMCW Radar, 5G, and even modalities beyond RF, showcasing RF-Diffusion's prowess across different signal categories. We extensively evaluate RF-Diffusion's generative capabilities and validate its effectiveness in multiple downstream tasks, including wireless sensing and 5G channel estimation.

Our basic implementation of RF-Diffusion is provided in this repository. We have released several medium-sized pre-trained models (each containing 16 to 32 blocks, with 128 or 256 hidden dim) and part of the corresponding data files in releases, which can be used for performance testing.

An intuitive comparison between RF-Diffusion and three other prevalent generative models is shown as follows. For demonstration purposes, we provide the Doppler Frequency Shift (DFS) spectrogram of the Wi-Fi signal, and the Range Doppler Map (RDM) spectrogram of the FMCW Radar signal, which are representative features of the two signals, respectively. Please note that all these methods generate the raw complex-valued signals, and the spectrograms are shown for ease of illustration.

Note: The GIFs in the table below may take some time to load. If they don't appear immediately, please wait for a moment or try refreshing the webpage.

| Ground Truth | RF-Diffusion | DDPM1 | DCGAN2 | CVAE3 | |

|---|---|---|---|---|---|

| Wi-Fi |   |

|

|

|

|

| FMCW |   |

|

|

|

|

As shown, RF-Diffusion generates signals that accurately retain their physical features.

You can run the evaluation script that produces the major figures in our paper in two ways.

1. (Recommended) Google Colab Notebook

- Simply open this notebook. Under the

Runtimetab, selectRun all. - Please wait for 15 minutes as the data are being processed.

- The figures will be displayed in your browser.

2. Local Setup

- Clone this repository.

- Install Python 3 if you have not already. Then, run pip3 install

-r requirements.txtat the root directory of/plotsfolder to install the dependencies. - Run code files in

/plots/codedirectory one by one and wait for 15 minutes as the data are being processed. - In

/plots/imgdirectory, figures used in our paper can be found.

In this section, we offer training code, testing code, and pre-trained models. You can utilize our pre-trained models for further testing and even customize the models according to your specific tasks. This will significantly foster the widespread application of RF-Diffusion within the community.

RF-Diffusion is implemented with Python 3.8 and PyTorch 2.0.1. We manage the development environment using Conda. Execute the following commands to configure the development environment.

-

Create a conda environment called

RF-Diffusionbased on python 3.8, and activate the environment.conda create -n RF-Diffusion python=3.8 conda activate RF-Diffusion

-

Install PyTorch, as well as other required packages.

pip3 install torch

pip3 install numpy scipy tensorboard tqdm matplotlib torchvision pytorch_fid

For more details about the environment configuration, refer to the requirements.txt file in releases.

Download or git clone the RF-Diffusion project. Download and unzip dataset.zip and model.zip in releases to the project directory.

unzip -q dataset.zip -d 'RF-Diffusion/dataset'

unzip -q model.zip -d 'RF-Diffusion'The project structure is shown as follows:

In the following part, we use task_id to differentiate between four types of tasks of synthesising Wi-Fi, FMCW signals, performing 5G channel estimation, and denoising the EEG to 0, 1, 2 and 3 respectively.

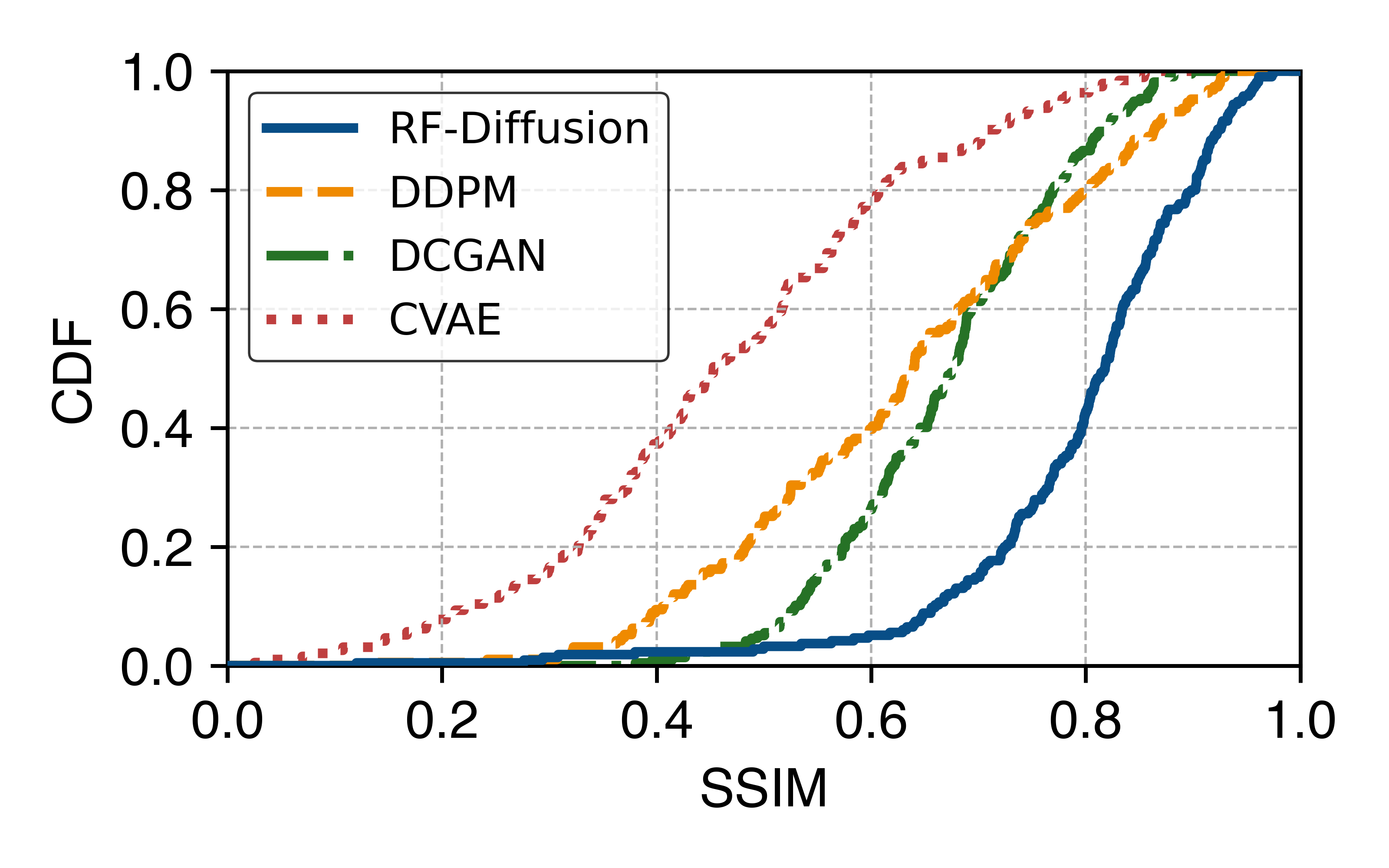

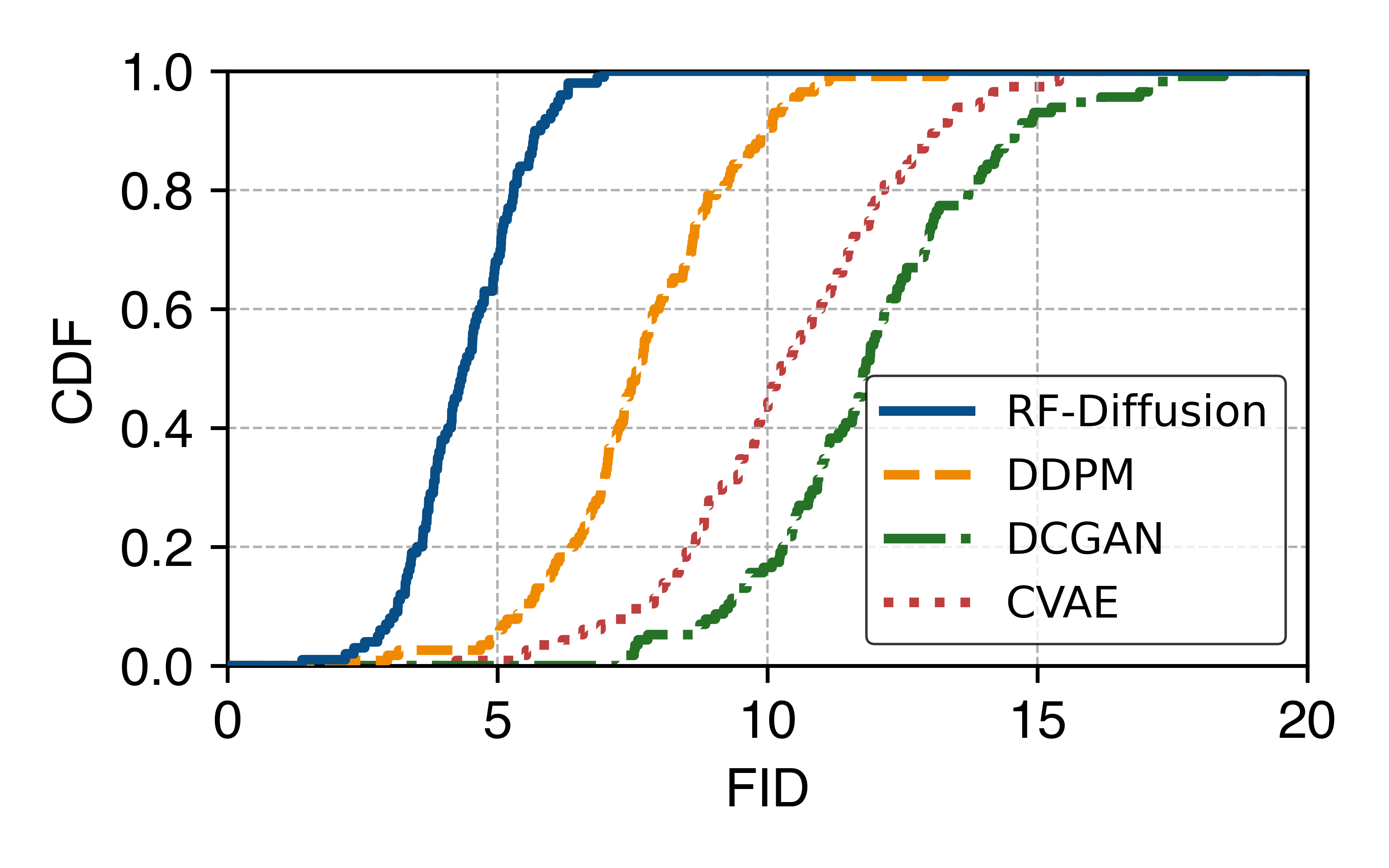

By executing the following code, you can generate new Wi-Fi data, and the corresponding average SSIM (Structural Similarity Index Measure) and FID (Fréchet Inception Distance) will be displayed in the command line, which matches the values reported in section 6.2: Overall Generation Quality of the paper.

python3 inference.py --task_id 0The generated data are stored in .mat format, and can be found in ./dataset/wifi/output.

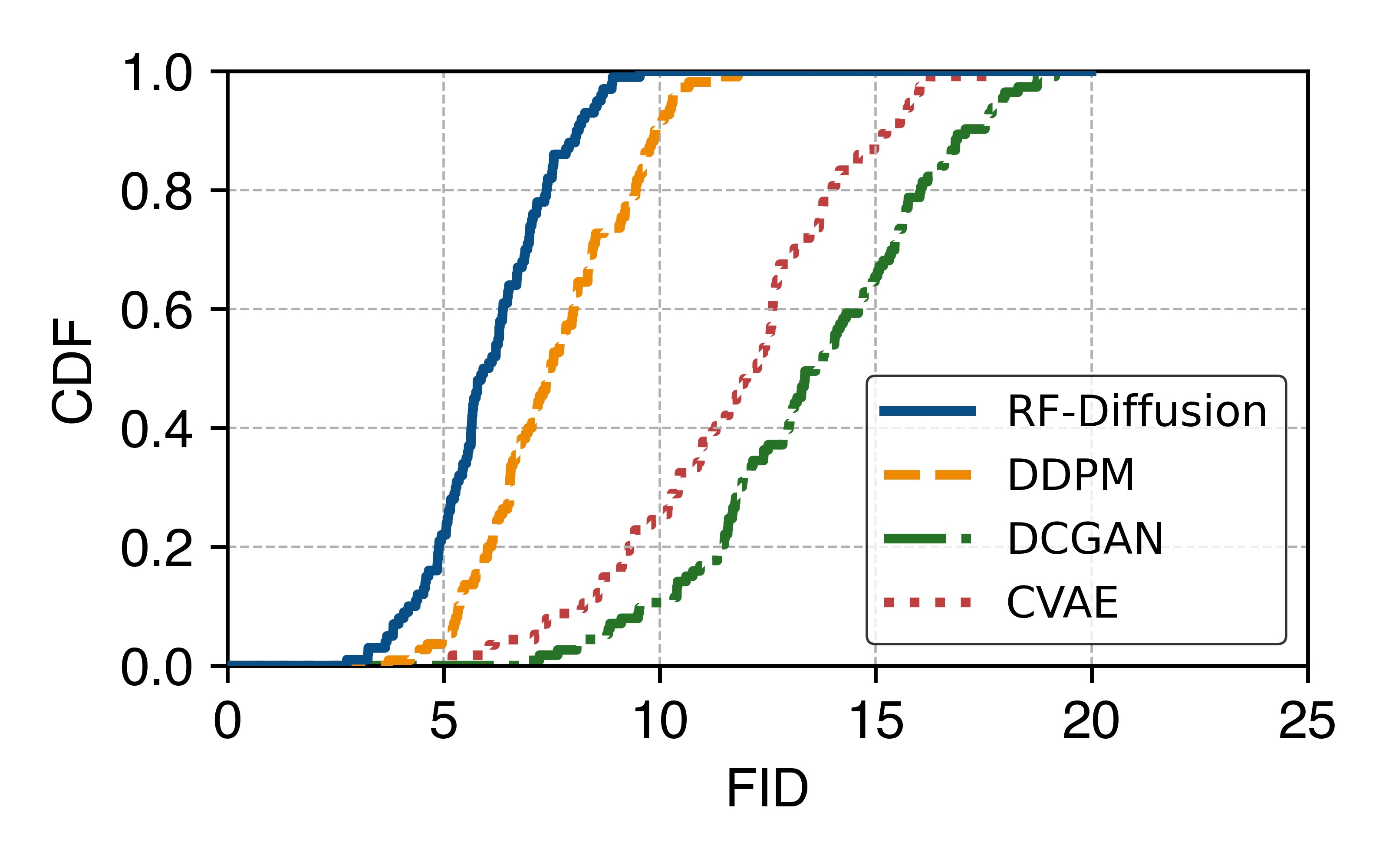

Our model showcases the best performance in both SSIM (Structure Similarity Index Measure) and FID (Frechet Inception Distance) compared to other prevalent generative models:

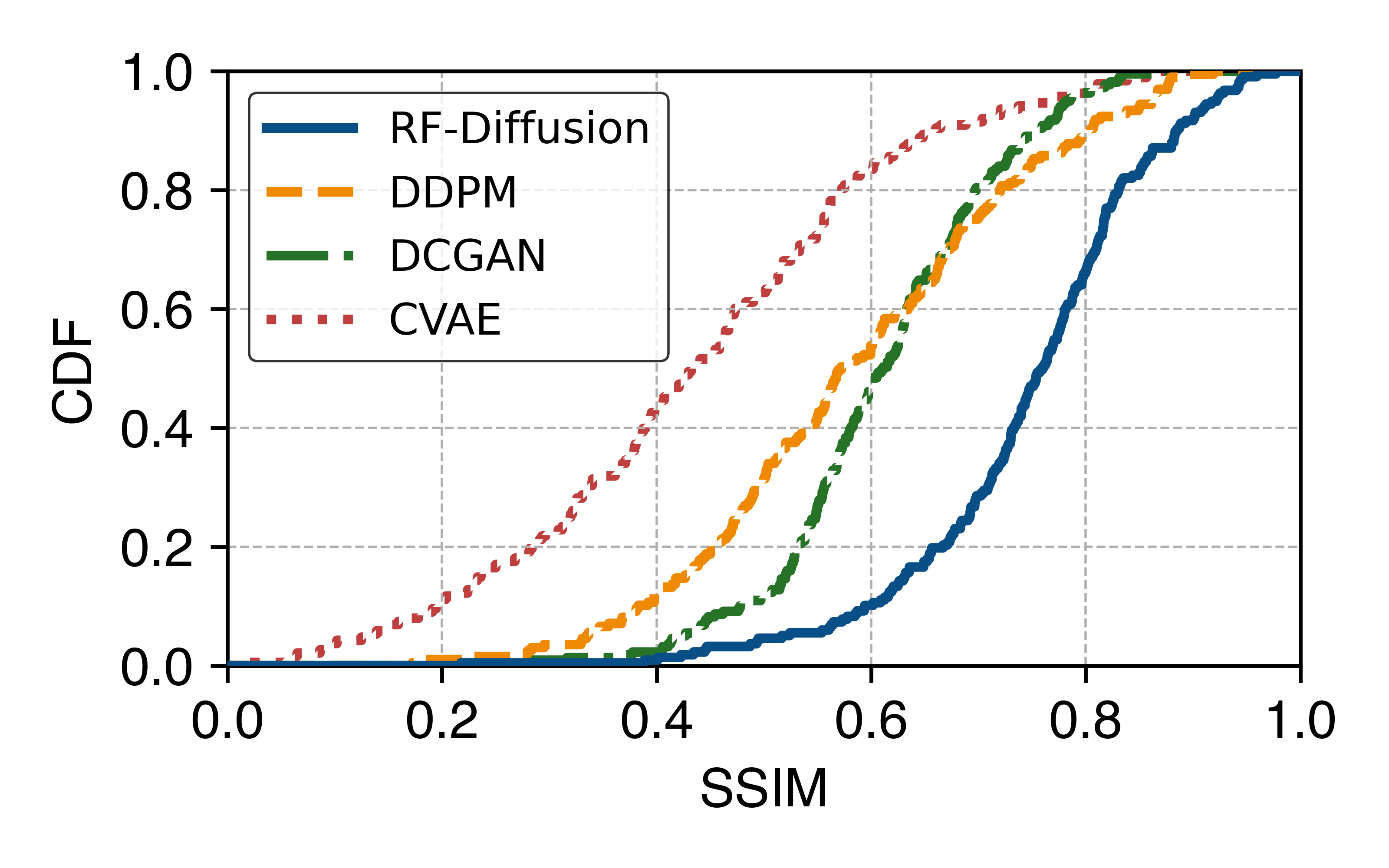

By executing the following code, you will generate FMCW data, and the corresponding average SSIM (Structural Similarity Index Measure) and FID (Fréchet Inception Distance) will be displayed in the command line, which matches the values reported in section 6.2: Overall Generation Quality of the paper.

python3 inference.py --task_id 1The generated data are stored in .mat format, and can be found at ./dataset/fmcw/output.

Our model showcases the best performance in both SSIM (Structure Similarity Index Measure) and FID (Frechet Inception Distance) among all prevalent generative models:

A pre-trained RF-Diffusion can be leveraged as a data augmenter, which generates synthetic RF signals of the designated type. The synthetic samples are then mixed with the original dataset, collectively employed to train the wireless sensing model. You can try performing the data generation task on your own dataset based on the instructions in RF Data Generation, and train your own model with both real-world and synthetic data.

To retrain a new model, you only need to place your own data files within the ./dataset/wifi/raw or ./dataset/fmcw/raw directory, and then execute the train.py script to retrain. You may need to properly set the ./tfdiff/params.py file to correspond to your input data format.

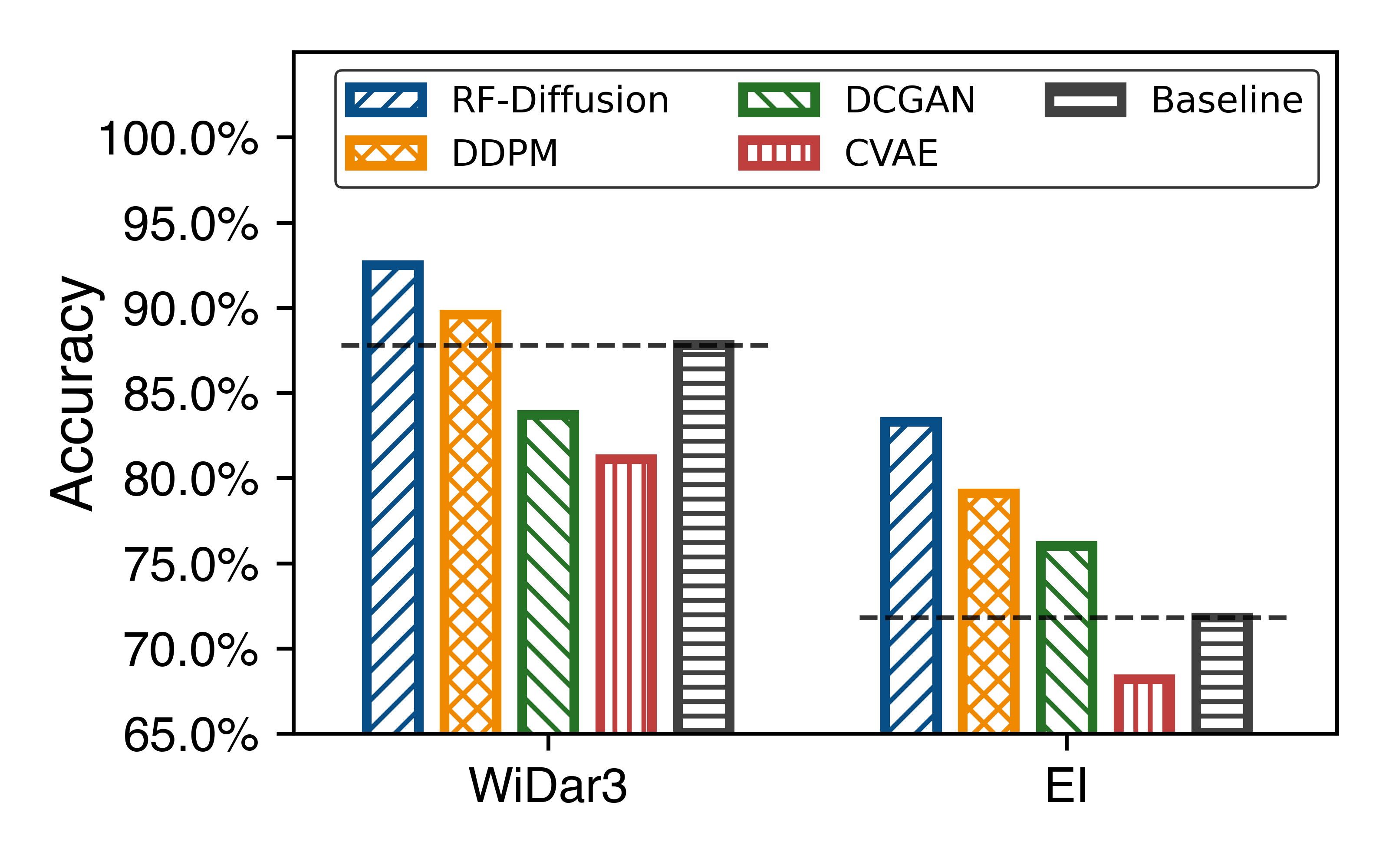

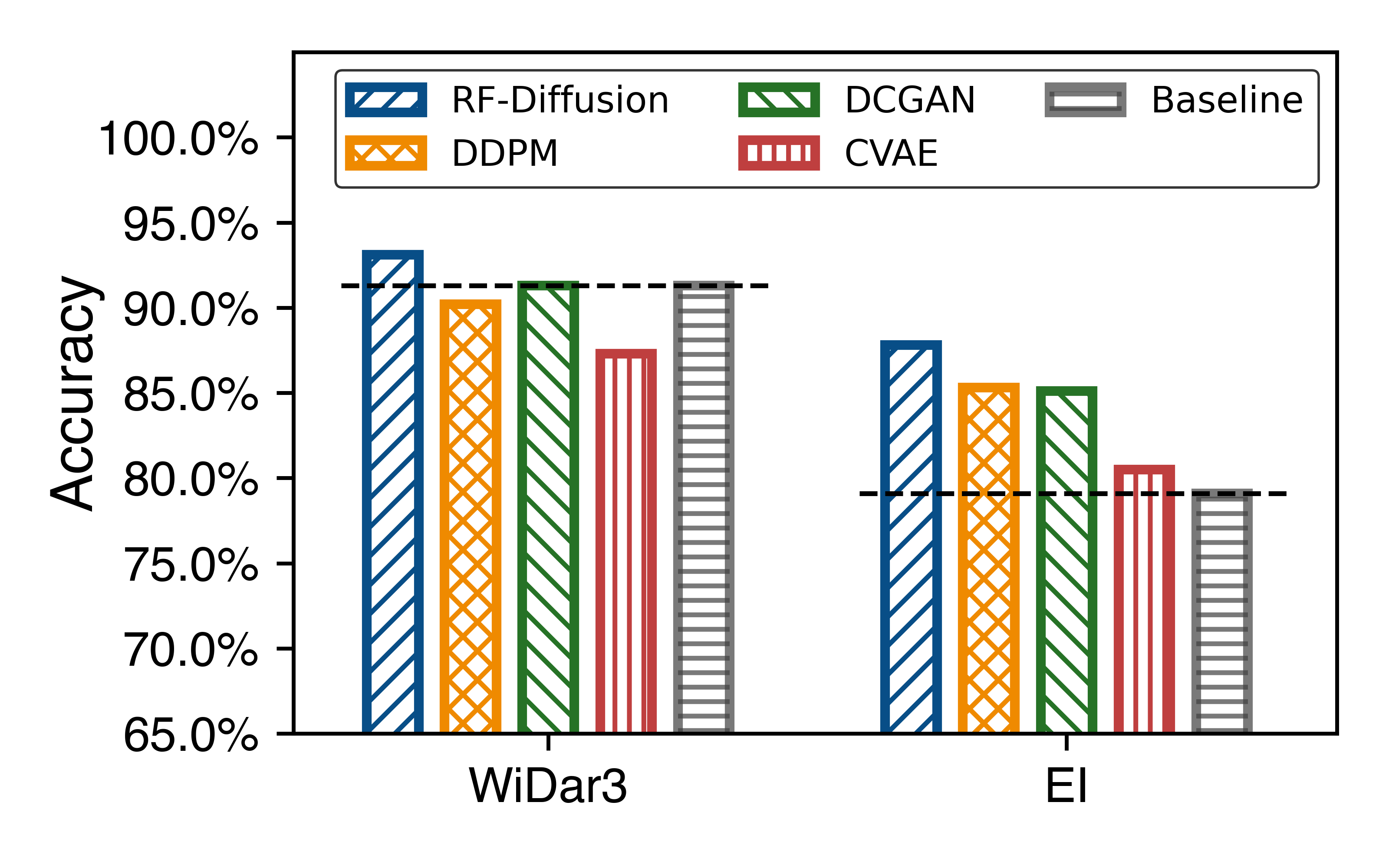

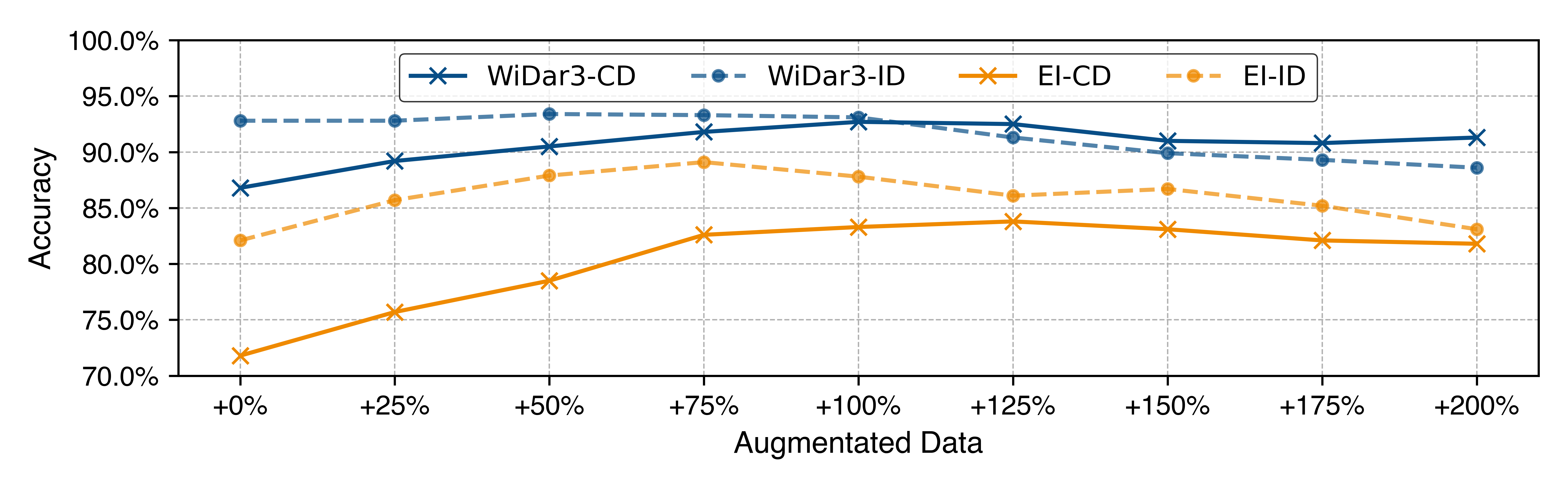

Taking Wi-Fi gesture recognition as an example. We choose the Widar3.0 dataset and perform augmented wireless sensing on two models, Widar3.0 and EI, to test the performance gain of data augmentation in both cross-domain and in-domain scenarios, which can be found in section 7.1: Wi-Fi Gesture Recognition of the paper.

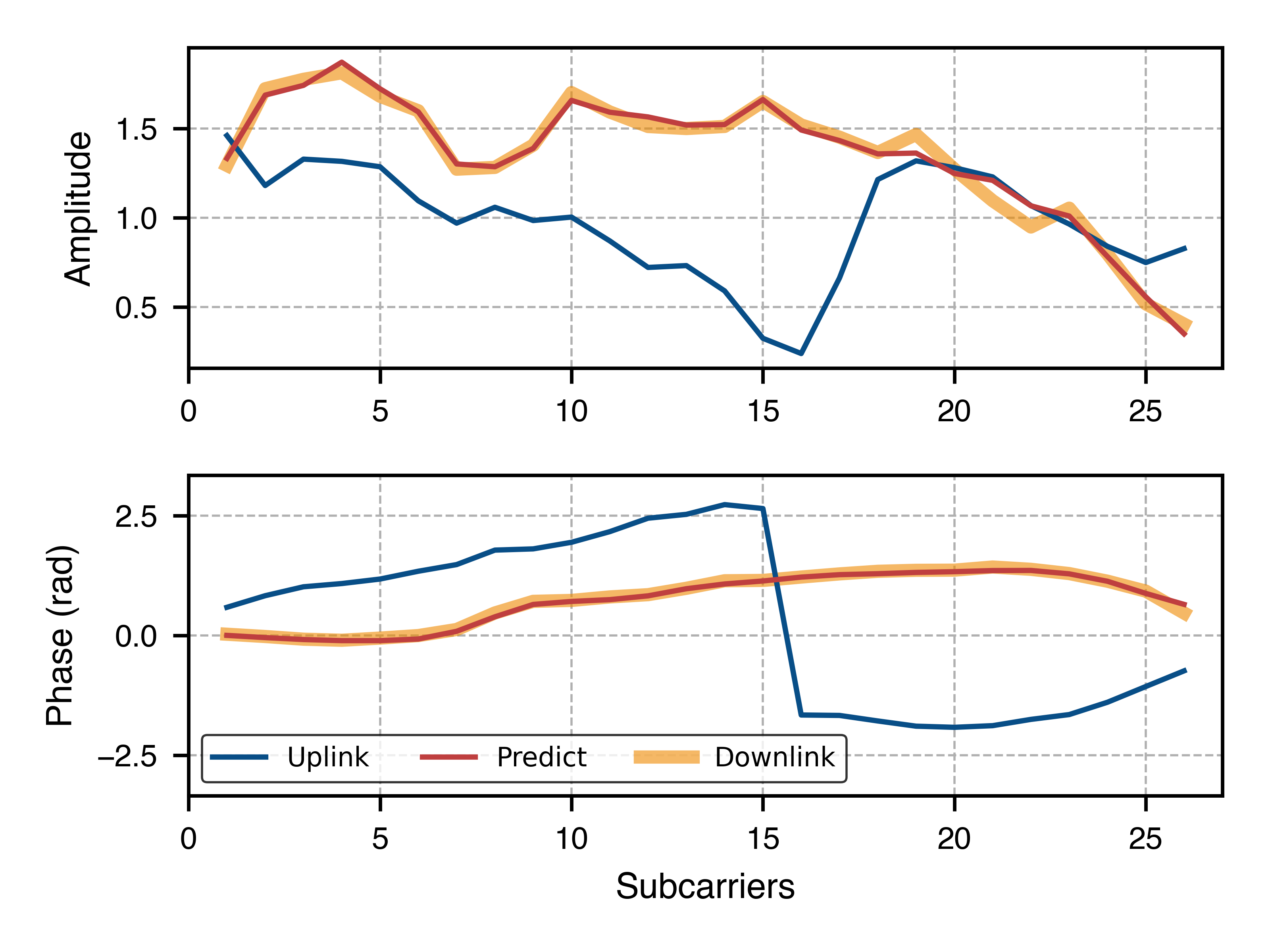

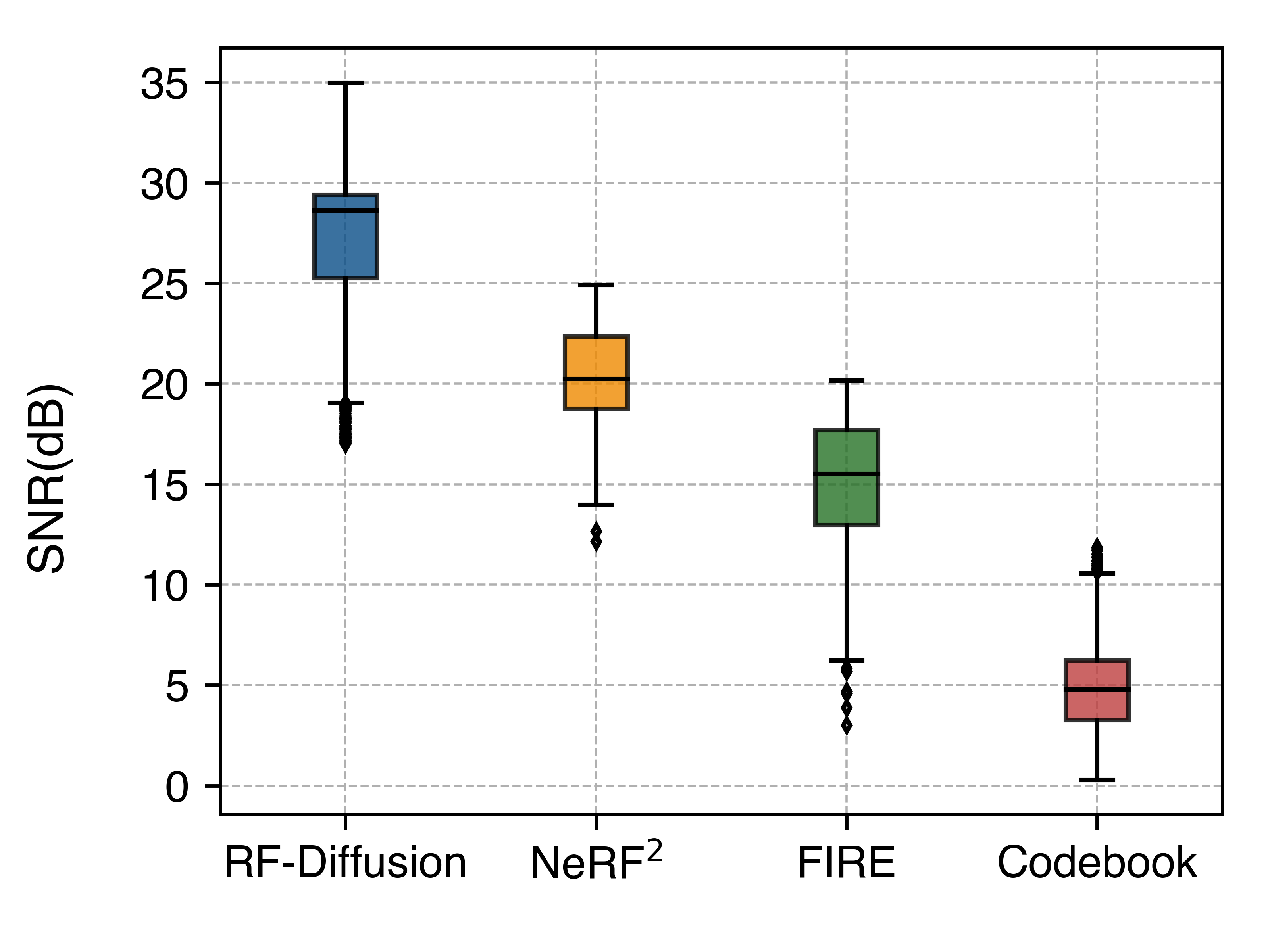

By executing the following command, a downlink channel estimation for 5G FDD system can be performed.

python3 inference.py --task_id 2The corresponding average Signal-to-Noise Ratio (SNR) will be displayed in the command line and found in section 7.2: 5G FDD Channel Estimation of the paper.

The channel estimation is evaluated based on the Argos dataset. As the results show, our model showcases the best performance compared to state-of-the-art models.

The code, data and related scripts are made available under the GNU General Public License v3.0. By downloading it or using them, you agree to the terms of this license.

If you use our dataset in your work, please reference it using

@inproceedings{chi2024rf,

title={RF-Diffusion: Radio Signal Generation via Time-Frequency Diffusion},

author={Chi, Guoxuan and Yang, Zheng and Wu, Chenshu and Xu, Jingao and Gao, Yuchong and Liu, Yunhao and Han, Tony Xiao},

booktitle={Proceedings of the 30th Annual International Conference on Mobile Computing and Networking},

pages={77--92},

year={2024}

}

Footnotes

-

Ho J, Jain A, Abbeel P. Denoising diffusion probabilistic models[J]. Advances in neural information processing systems, 2020, 33: 6840-6851. ↩

-

Radford A, Metz L, Chintala S. Unsupervised representation learning with deep convolutional generative adversarial networks[J]. arXiv preprint arXiv:1511.06434, 2015. ↩

-

Sohn K, Lee H, Yan X. Learning structured output representation using deep conditional generative models[J]. Advances in neural information processing systems, 2015, 28. ↩