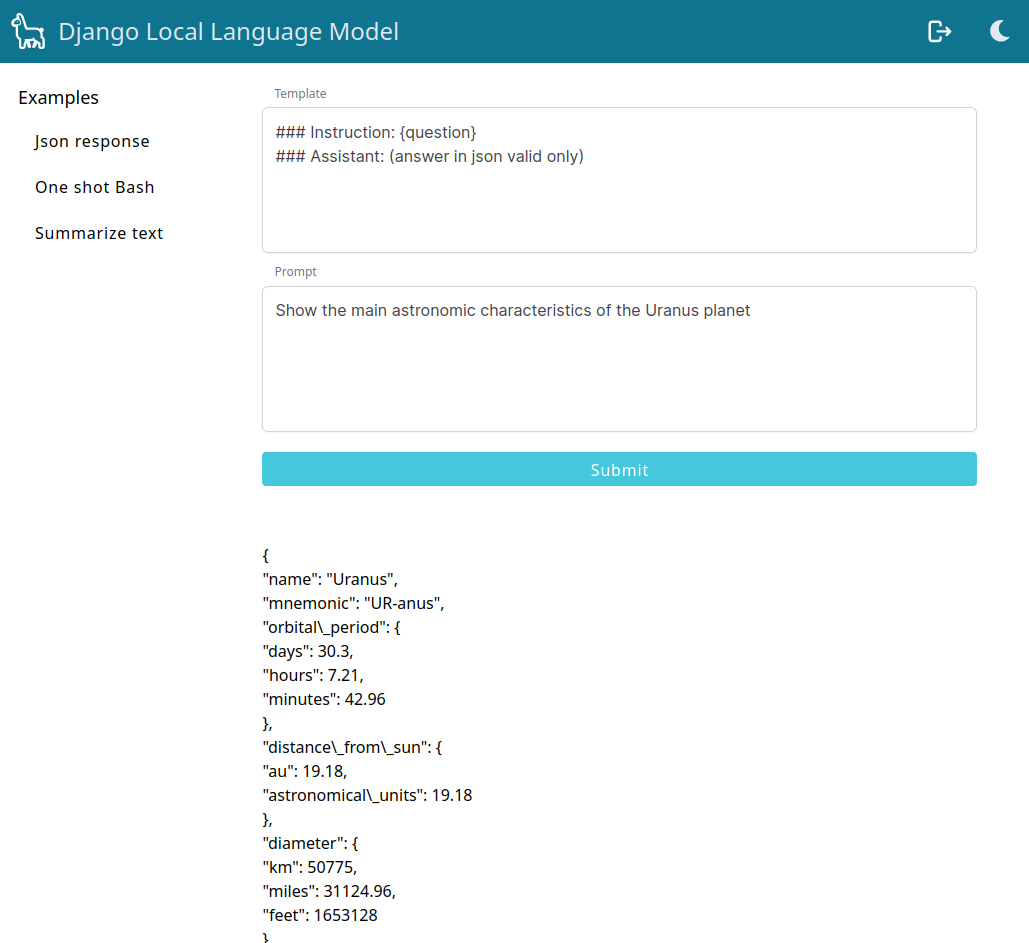

Run a local AI from Django with Llama.cpp

Clone the repository and install the dependencies:

git clone https://github.com/emencia/django-local-ai

cd django-local-ai

make installIn case of an error Failed to build llama-cpp-python try this and run again:

source .venv/bin/activate

export SETUPTOOLS_USE_DISTUTILS=stdlib

pip install --upgrade --force-reinstall setuptoolsGet the websockets server:

make installwsTo make this work you need a Llama.cpp ggml compatible language model. For example we will use GPT4All-13B-snoozy from this repository:

cd some/dir/where/to/put/your/models

wget https://huggingface.co/TheBloke/GPT4All-13B-snoozy-GGML/resolve/main/GPT4All-13B-snoozy.ggmlv3.q5_0.binChange your settings accordingly by updating the MODEL_PATH setting in main/settings.py. Use an absolute path.

First, append this to your Django settings (./main/settings.py):

CENTRIFUGO_HOST = "http://localhost"

CENTRIFUGO_PORT = 8427

CENTRIFUGO_HMAC_KEY = "b4265250-3672-4ed9-abe5-b17ca67d0104"

CENTRIFUGO_API_KEY = "21dae466-e63e-41d1-8f75-3aa94b41c893"

SITE_NAME = "django-local-ai"and don't forget to finish the setup:

.venv/bin/python manage.py initws --settings=main.settings.localNow, you're ready to enjoy your local LLM !

Run the http server:

make runRun the task queue that will handle language model calls:

make lmRun the websockets server:

make wsCreate a superuser:

make superuserOpen the frontend at localhost:8000 and login