VCGAN is a hybrid colorization model that works for both image and video colorization tasks.

arxiv: https://arxiv.org/pdf/2104.12357.pdf

Note that this project is implemented using Python 3.6, CUDA 8.0, and PyTorch 1.0.0 (minimum requirement).

Besides, the cupy, opencv, and scikit-image libs are used for this project.

Please build an appropriate environment for the PWC-Net to compute optical flow.

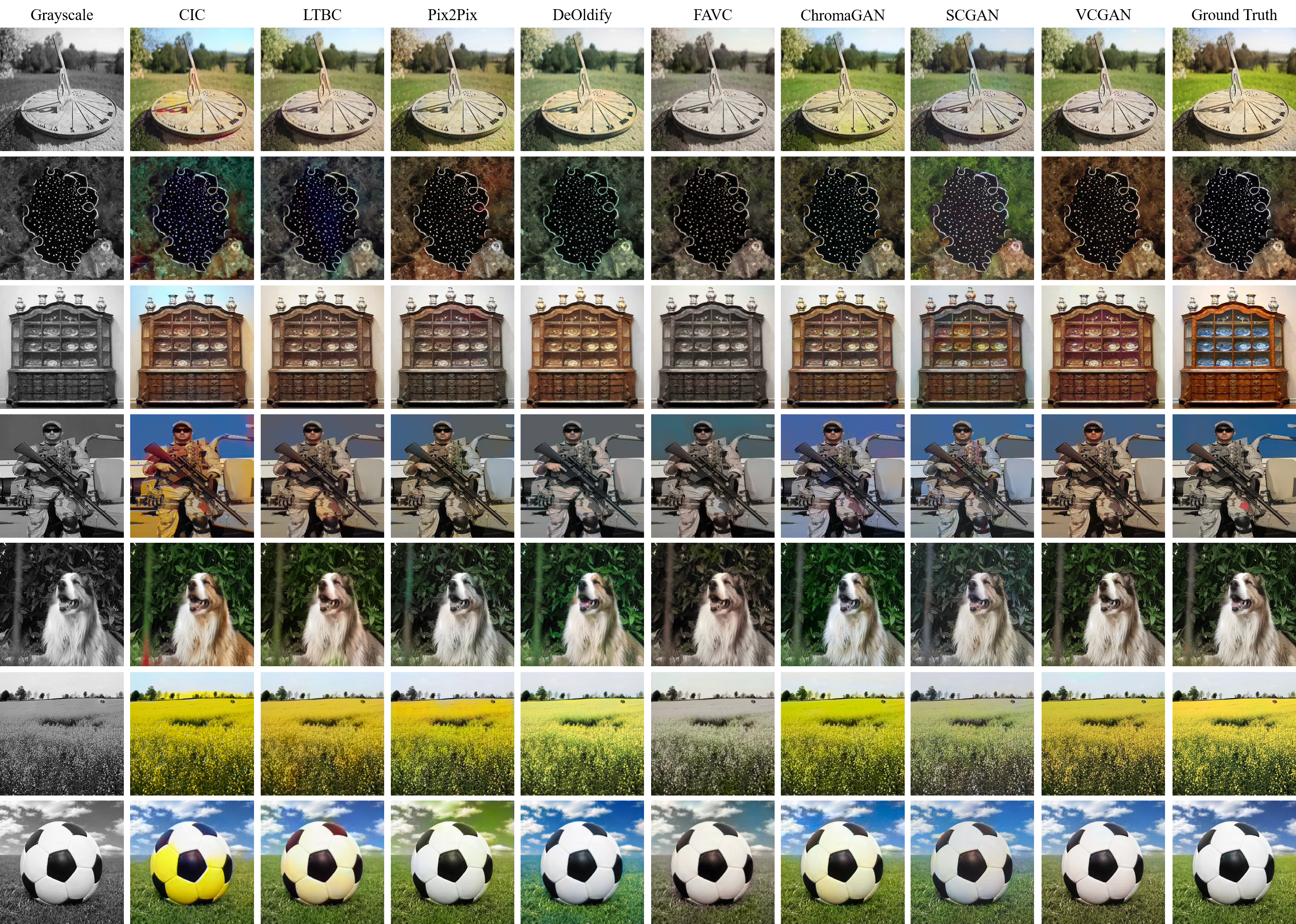

So far we visualize two samples included in the main paper for better visualization.

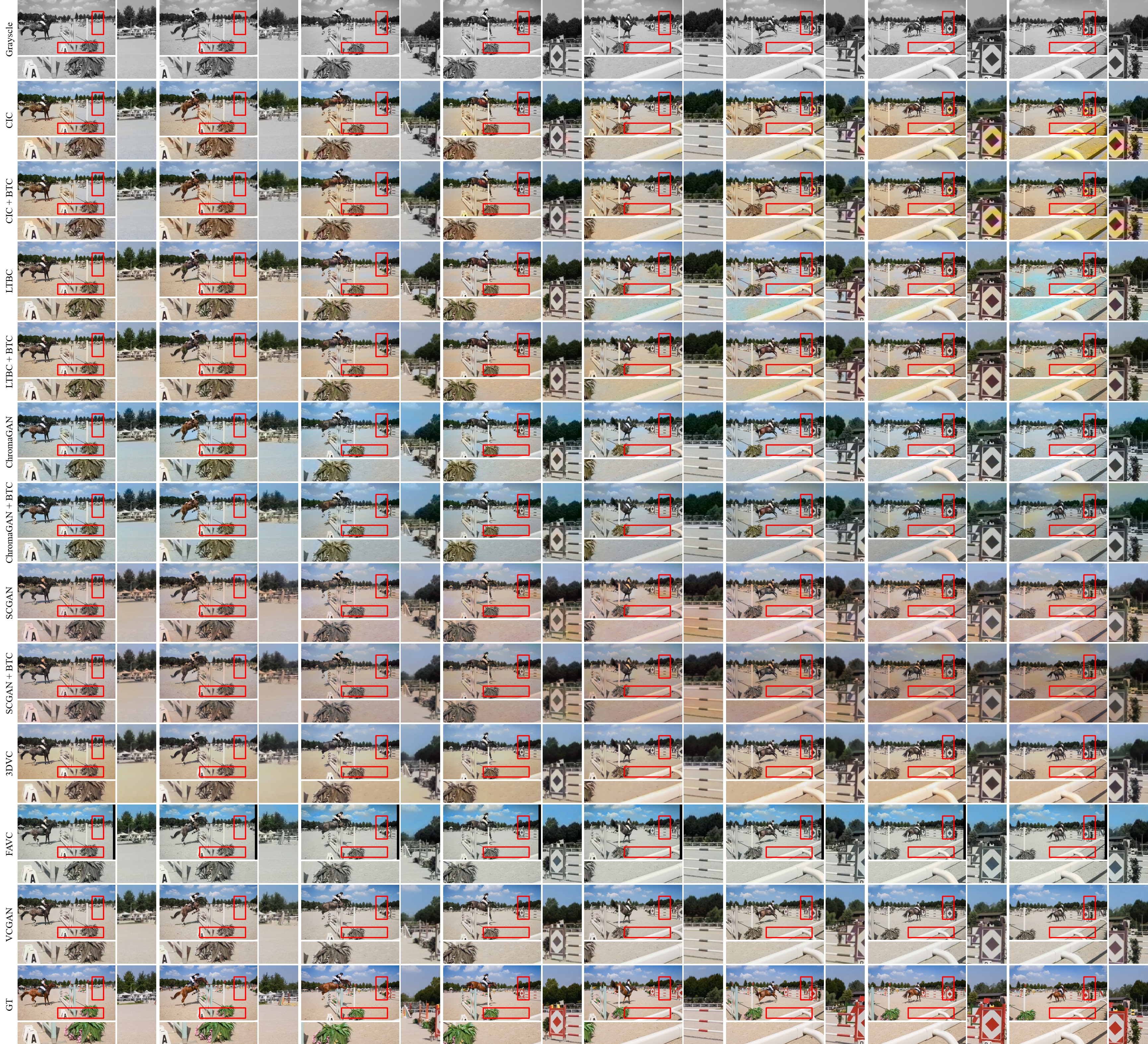

The representative image is shown as (please compare the significant regions marked by red rectangles):

The gifs are shown as:

| Column 1 | Column 2 | Column 3 | Column 4 |

|---|---|---|---|

| CIC | CIC+BTC | LTBC | LTBC+BTC |

| SCGAN | SCGAN+BTC | ChromaGAN | ChromaGAN+BTC |

| 3DVC | FAVC | VCGAN | |

| Grayscale | Ground Truth |

The representative image is shown as (please compare the significant regions marked by red rectangles):

The gifs are shown as:

| Column 1 | Column 2 | Column 3 | Column 4 |

|---|---|---|---|

| CIC | CIC+BTC | LTBC | LTBC+BTC |

| SCGAN | SCGAN+BTC | ChromaGAN | ChromaGAN+BTC |

| 3DVC | FAVC | VCGAN | |

| Grayscale | Ground Truth |

We visualize the Figure 11 in the main paper for better view.

Please download pre-trained ResNet50-Instance-Normalized model at this link, if you want to train VCGAN. The hyper-parameters follow the settings of original paper except normalization.

Please download at this link (will available after published), if you want to test VCGAN. Then put this model in the models folder under current path.

Put the pre-trained ResNet50-Instance-Normalized model into trained_models folder, then change the settings and train VCGAN in first stage:

cd train

python train.py or sh first.shAfter the model is trained, you can run following codes for second stage:

python train2.py or sh second.sh (on 256p resolution)

python train2.py or sh third.sh (on 480p resolution)For testing, please run (note that you need to change path to models):

python test_model_*.pySCGAN: Saliency Map-guided Colorization with Generative Adversarial Network (IEEE TCSVT 2020): Project Paper Github

ChromaGAN: Adversarial Picture Colorization with Semantic Class Distribution (WACV 2020): Paper Github

FAVC: Fully Automatic Video Colorization With Self-Regularization and Diversity (CVPR 2019): Project Paper Github

3DVC: Automatic Video Colorization using 3D Conditional Generative Adversarial Networks (ISVC 2019): Paper

BTC: Learning Blind Video Temporal Consistency (ECCV 2018): Project Paper Github

LRAC: Learning Representations for Automatic Colorization (ECCV 2016): Project Paper Github

CIC: Colorful Image Colorization (ECCV 2016): Project Paper Github

LTBC: Let there be Color!: Joint End-to-end Learning of Global and Local Image Priors for Automatic Image Colorization with Simultaneous Classification (ACM TOG 2016): Project Paper Github

Pix2Pix: Image-to-Image Translation with Conditional Adversarial Nets (CVPR 2017): Project Paper Github

CycleGAN: Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks (ICCV 2017): Project Paper Github