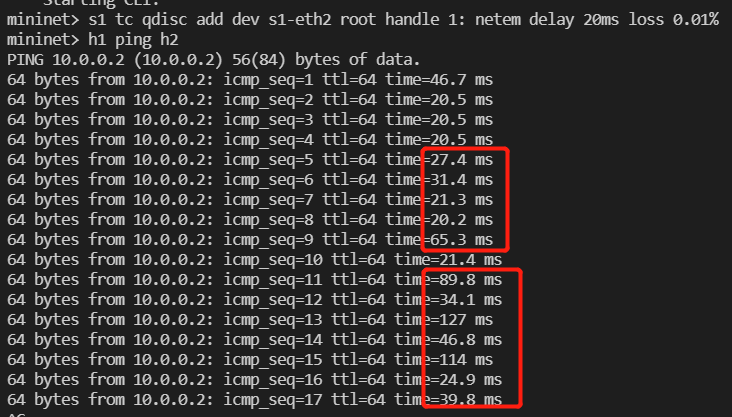

ping/iperf3 got unstable measurement results

ICYPOLE opened this issue · comments

Expected/Desired Behavior:

Mininet should return stable ping RTT results, after setting the network conditions with Linux tc netem.

Actual Behavior:

Detailed Steps to Reproduce the Behavior:

sudo mn # start the default topo h1---s1---h2

s1 tc qdisc add dev s1-eth2 root handle 1: netem delay 20ms loss 0.01% # this will emulate 20ms RTT

h1 ping h2 Additional Information:

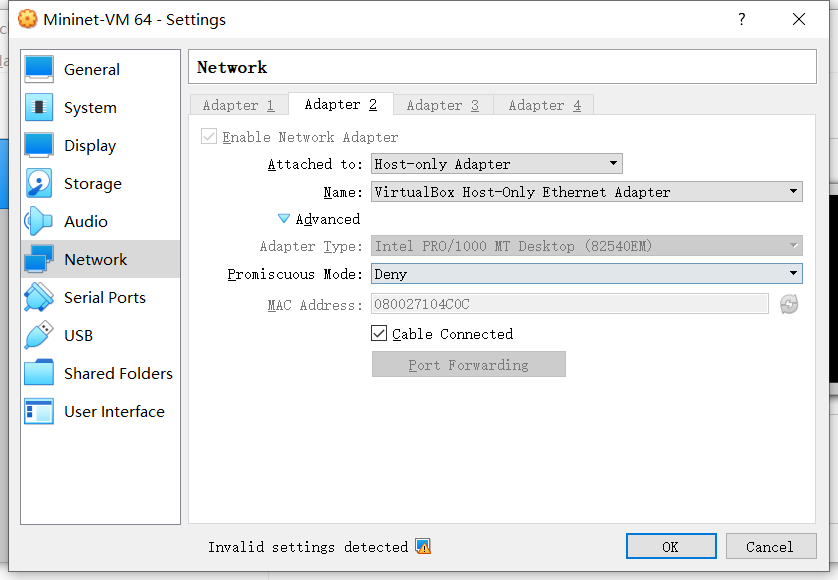

I am running Mininet 2.3.0 on Virtual Box in my win10 laptop. I upgraded the Linux kernel to 4.15.

Only a host-only adapter is set in VM:

Did you test it without using Mininet? Sometimes tc netem does not work properly.

FYI, I have the same issue with VirtualBox running several CPUs

If you don't need the multiple CPUs, note that there is no problem with only one CPU

Also, running mininet with a non-VirtualBox VM or without a VM works fine

FYI, I have the same issue with VirtualBox running several CPUs If you don't need the multiple CPUs, note that there is no problem with only one CPU

Also, running mininet with a non-VirtualBox VM or without a VM works fine

I installed Mininet on virtual machine myself, instead of using VirtualBox image. Then it works out fine. Wonder why there is such a problem.

Did you test it without using Mininet? Sometimes tc netem does not work properly.

Seems like it has no relation with tc netem.

I still see this even when I manually compile mininet from sources on a brand new VM. I am willing to provide code that will setup VM and compile mininet if that is useful....

Two questions:

- Is there a way to have a VM with multiple CPUs but only assign a single to the mn controller (as this seems to fix the latency jitter?)

- What version in particular did you install manually @ICYPOLE ? Is there a chance that this is mn version specific issue?

Note: I need VM with multiple CPUs because otherwise my other processes on the VM are CPU starved, so, limiting the # of CPUs to 1 is not a valid option for me.

This is almost certainly not a Mininet issue; I was also unable to reproduce it running on a 4-core VMware VM on a core i7 laptop from 2016.

It is most likely a configuration or resource issue with your setup.

As @Keshvadi suggested, you should try it on a loopback interface outside of Mininet. Also make sure that your VM is quiescent without a load of background processes eating up CPU and memory resources, and that your host OS is as quiescent as possible as well. For the most accurate performance results, I recommend running booting Linux natively rather than running it in a VM. You might also see if the problem persists with other VMMs like VMware.

[Update]

My (limited) testing suggests that as @lantz noted this is a product of the hypervisor. Below I have a file that works with VirtualBox which reproduces the behaviour mentioned by the OP. However, if the same scenario is played natively on the host (without a VM) this issue is not present. Additionally, I have experimented with VMWare Workstation and that also and after sending 200 ICMP echos I only see jitter of at most 1.4 ms for the 20ms RTT path the OP used.

I suggest closing this as "completed" / resolved.

[/Update]

=================================================================================

After playing with this for a while, I can confirm that it is not a mininet issue and rather a hypervisor or TC issue. Not sure which one...

I am sharing my Vagrantfile that creates a VM and compiles mininet (with python2) on it:

https://gist.github.com/janev94/1d91728cd0fb19b9edd1bc76c227c658

You will need Ruby, Vagrant, and a hypervisor (This file is setup to work with virtualbox but technically you can use any).

Here is the actions I perform to recreate the issue.

Once I have the file downloaded somewhere from within that directory I run:

vagrant up(to create the VM and setup mininet).vagrant ssh(to login into the VM)sudo mn(to start mininet environment)s1 tc qdisc add dev s1-eth2 root handle 1: netem delay 20ms(from within mn terminal)h1 ping h2(to observer RTT values)

I can confirm that if the VM is configured with >1 CPUs the RTT values are unstable. However if I switch off the VM and re-start it with 1 CPU assigned.

vagrant halt(to stop the VM)- edit the Vagrantfile's line

v.customize ["modifyvm", :id, "--cpus", "4"]tov.customize ["modifyvm", :id, "--cpus", "1"] vagrant up(to start the VM again)- repeat steps 3-5 above

Now with a single core, the RTT values are persistent. So the issue is successfully reproduced with VirtualBox as hypervisor.

After doing more digging, I observed the same issue even if operating on the loopback address and do not start mininet at all!

To do this:

tc qdisc add dev lo root handle 1:0 netem delay 10msec(the delay value here is OWD, not the RTT so delay of 10 ms would yield a path with 20ms RTT)

I can confirm that with multiple CPUs assigned, this issue still persists even outside of mininet. Repeat the same procedure as above to change the number of CPUs. Use tc qdisc del dev lo root to remove the loopback handle.

@ICYPOLE Since this doesn't appear to be a mininet issue, would you like to close it?

Closing this for now. If you have a reproducible test case using the official VM image, we can reopen.