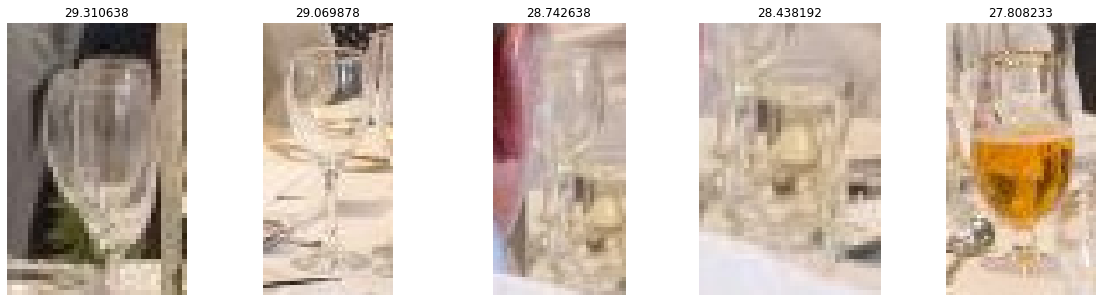

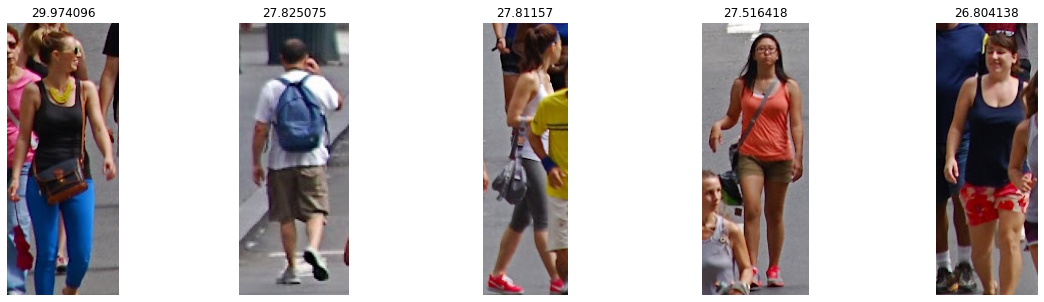

Search between the objects in an image, and cut the region of the detected object.

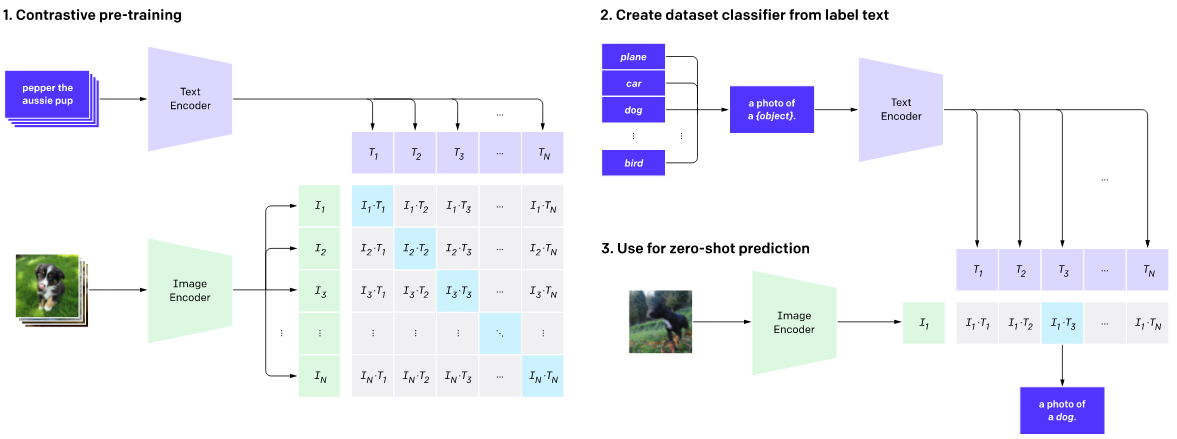

CLIP model was proposed by the OpenAI company, to understand the semantic similarity between images and texts.

It's used for preform zero-shot learning tasks, to find objects in an image based on an input query.

CLIP pre-trains an image encoder and a text encoder to predict which images were paired with which texts in our dataset. We then use this behavior to turn CLIP into a zero-shot classifier. We convert all of a dataset’s classes into captions such as “a photo of a dog” and predict the class of the caption CLIP estimates best pairs with a given image.

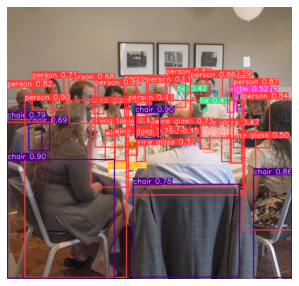

Also, YOLOv5 was used in the first step of the method, to detect the location of the objects in an image.

Demo is ready!

(Sometimes, the Streamlit website may crash! because models are heavy for it.)

Run this notebook on Google Colab and test on your images! (It works both on CPU and GPU)

Obviously object detector model only can find object classes learned from the COCO dataset. So if your results are not related to your query, maybe the object you want is not in the COCO classes.

Sorted from left based on similarity.

Query: wine glass

Query: woman with blue pants