Implement parallel wavenet based on nsynth.

To make the code and configuration as simple as possible, most of the extensible properties are not extended and are set to default values.

Librosa downsample result may be not in [-1, 1), so use tool/sox_downsample.py to downsample all waves first.

- [OK] wavenet

- [OK] fastgen for wavenet

- [OK] parallel wavenet

- [OK] gen for parallel wavenet

It seems that using mu law make the training easier. So experiment it first.

The following examples are more of functional test than gaining good waves. The network may be not trained enough.

- tune wavenet

- [OK] use_mu_law + ce LJ001-0001 LJ001-0002

- [OK] use_mu_law + mol LJ001-0001 J001-0002

- [OK] no_mu_law + mol LJ001-0001 LJ001-0002

- tune parallel wavenet

- use_mu_law

- no_mu_law

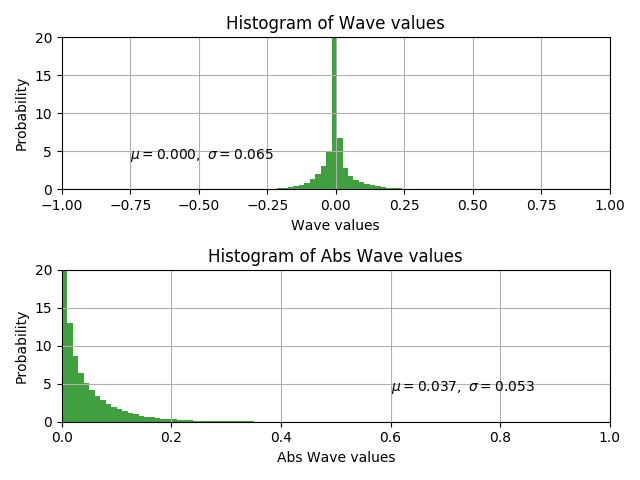

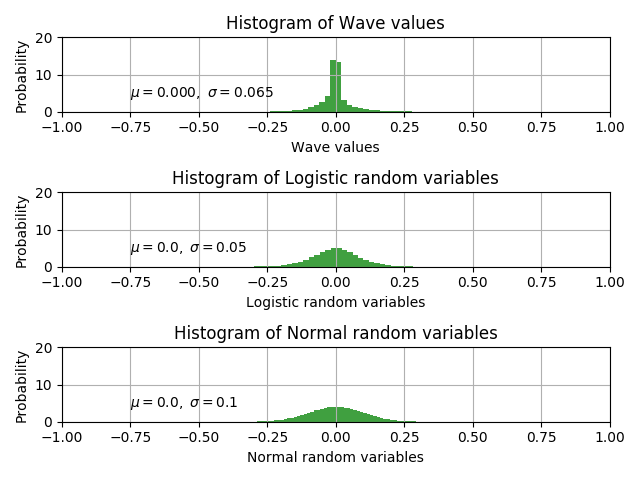

Proper initial mean_tot and scale_tot values have positive impact on model convergence and numerical stability.

According to the LJSpeech data distribution, proper initial values for mean_tot and scale_tot should be 0.0 and 0.05.

I modified the initializer to achieve it.

The figure is pot by this script

Decreasing loss does not indicate that everything goes well.

I found a straightforward method to determine whether a parallel wavenet is running OK.

Compare the values of new_x, new_x_std, new_x_abs, new_x_abs_std listed in tensorboard to statistics of real data.

If there is no difference of many orders of magnitudes, the training process is moving in the right direction.

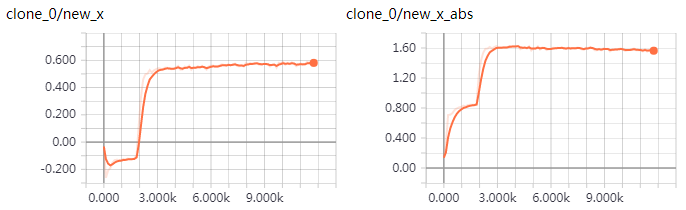

e.g. The first tensorboard figure comes from a parallel wavenet trained without power lowss.

The values of new_x, new_x_abs are too large compared to real data. So I cannot get meaningful waves from this model.

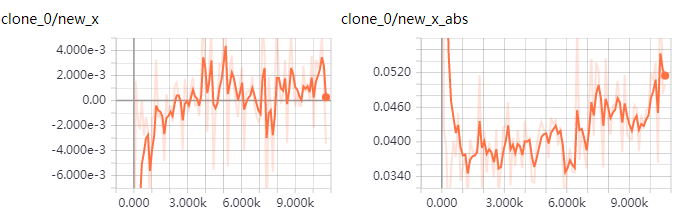

The second is a model using power loss. Its values are much closer to real data.

And it is generating very noisy but to some extent meaningful waves.