Code for our ICLR 2024 paper Synapse: Trajectory-as-Exemplar Prompting with Memory for Computer Control

The agent trajectories and latest updates can be found at our website.

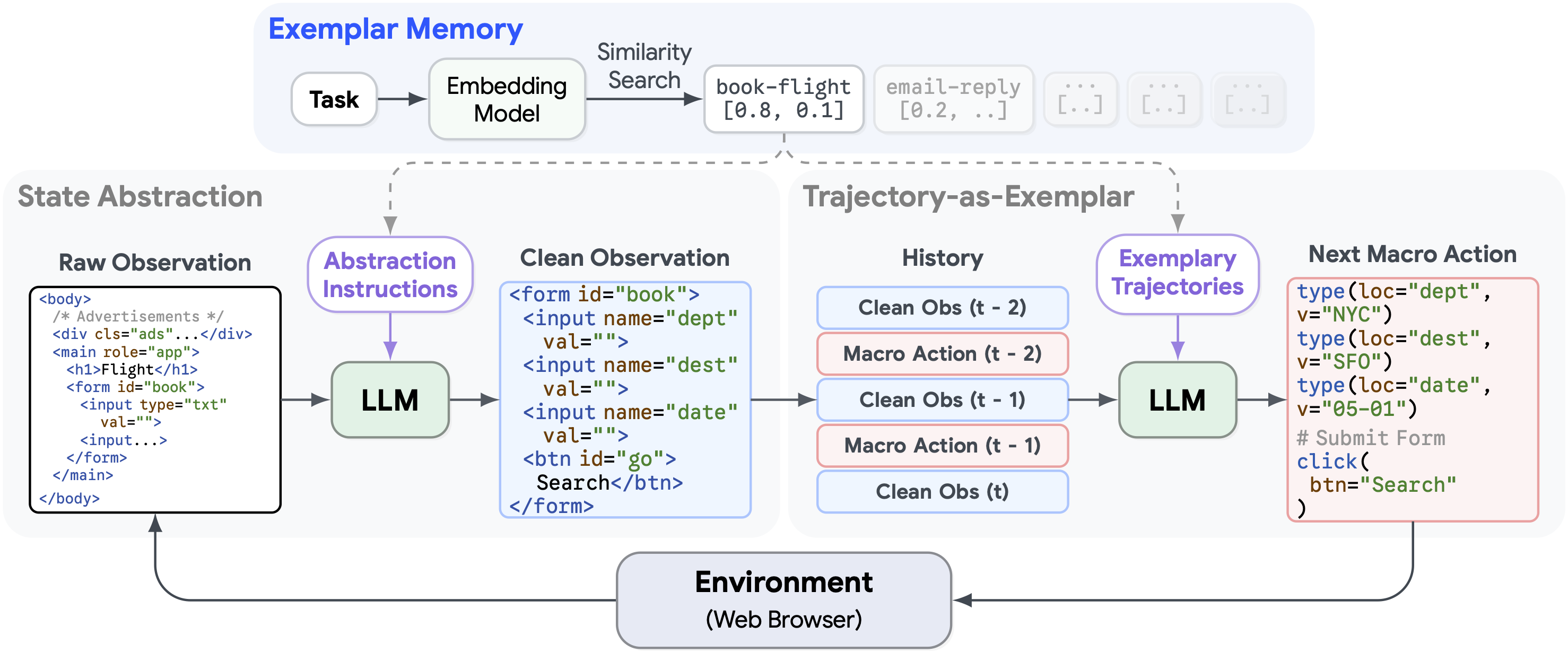

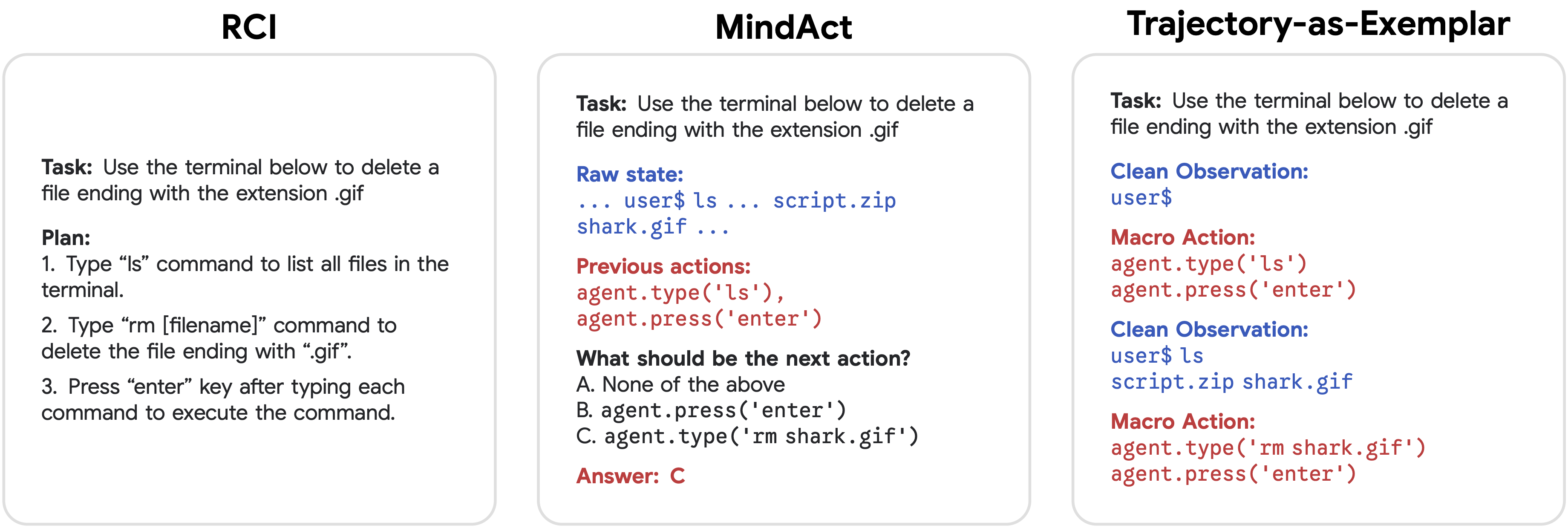

We introduce Synapse, an agent that incorporates trajectory-as-exemplar prompting with the associated memory for solving computer control tasks.

conda create -n synapse python=3.10 -y

conda activate synapse

pip install -r requirements.txtInstall Faiss and Download Mind2Web dataset and element rankings. The directory structure should look like this:

Mind2Web

├── data

| |── scores_all_data.pkl

│ ├── train

│ │ └── train_*.json

│ ├── test_task

│ │ └── test_task_*.json

│ ├── test_website

│ │ └── test_website_*.json

│ └── test_domain

│ └── test_domain_*.json

└── ...

Use build_memory.py to setup the exemplar memory.

python build_memory.py --env miniwob

python build_memory.py --env mind2web --mind2web_data_dir path/to/Mind2Web/dataThe memory folder should contain the following two files:

index.faiss and index.pkl.

Run MiniWoB++ experiments:

python run_miniwob.py --env_name <subdomain> --seed 0 --num_episodes 50

python run_miniwob.py --env_name <subdomain> --no_memory --no_filter --seed 0 --num_episodes 50Run Mind2Web experiments:

python run_mind2web.py --data_dir path/to/Mind2Web/data --benchmark {test_task/test_website/test_domain} --no_memory --no_trajectory

python run_mind2web.py --data_dir path/to/Mind2Web/data --benchmark {test_task/test_website/test_domain} --no_memory

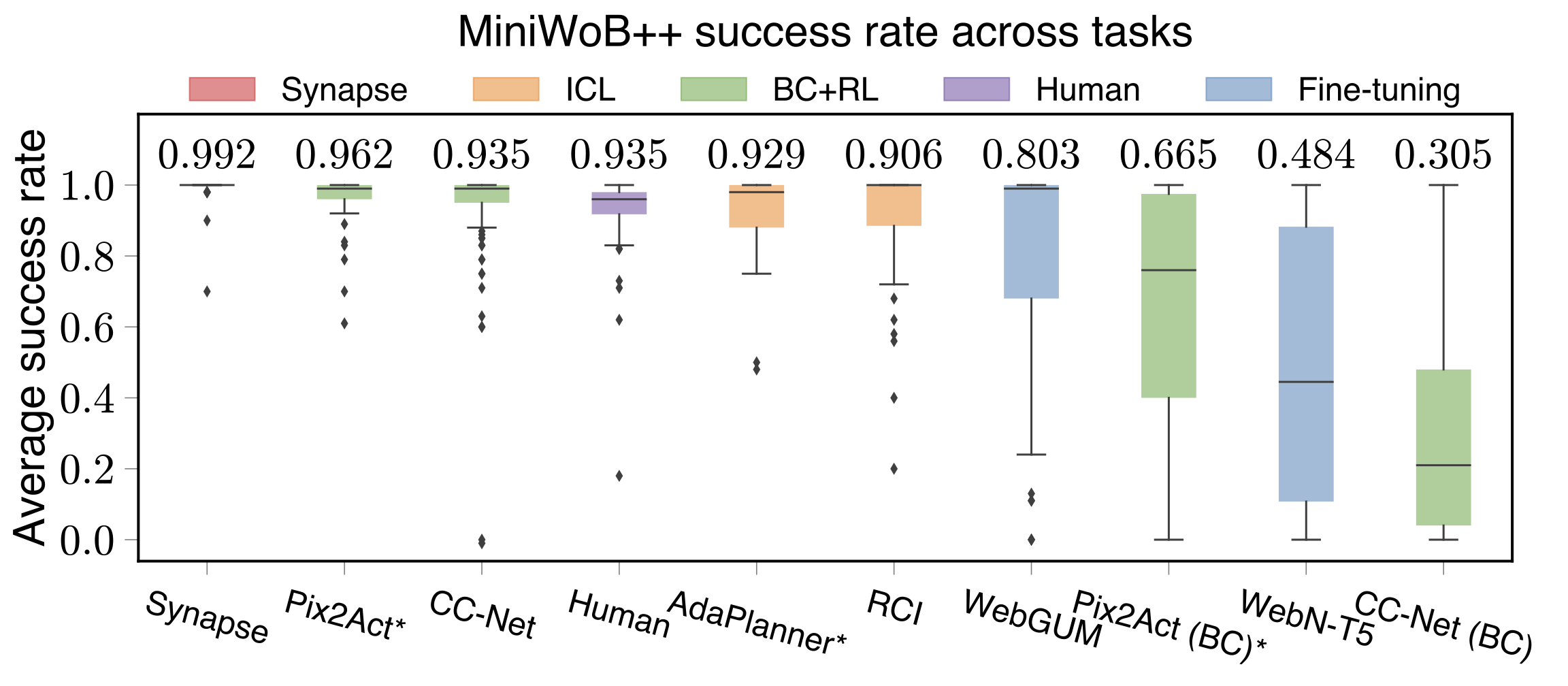

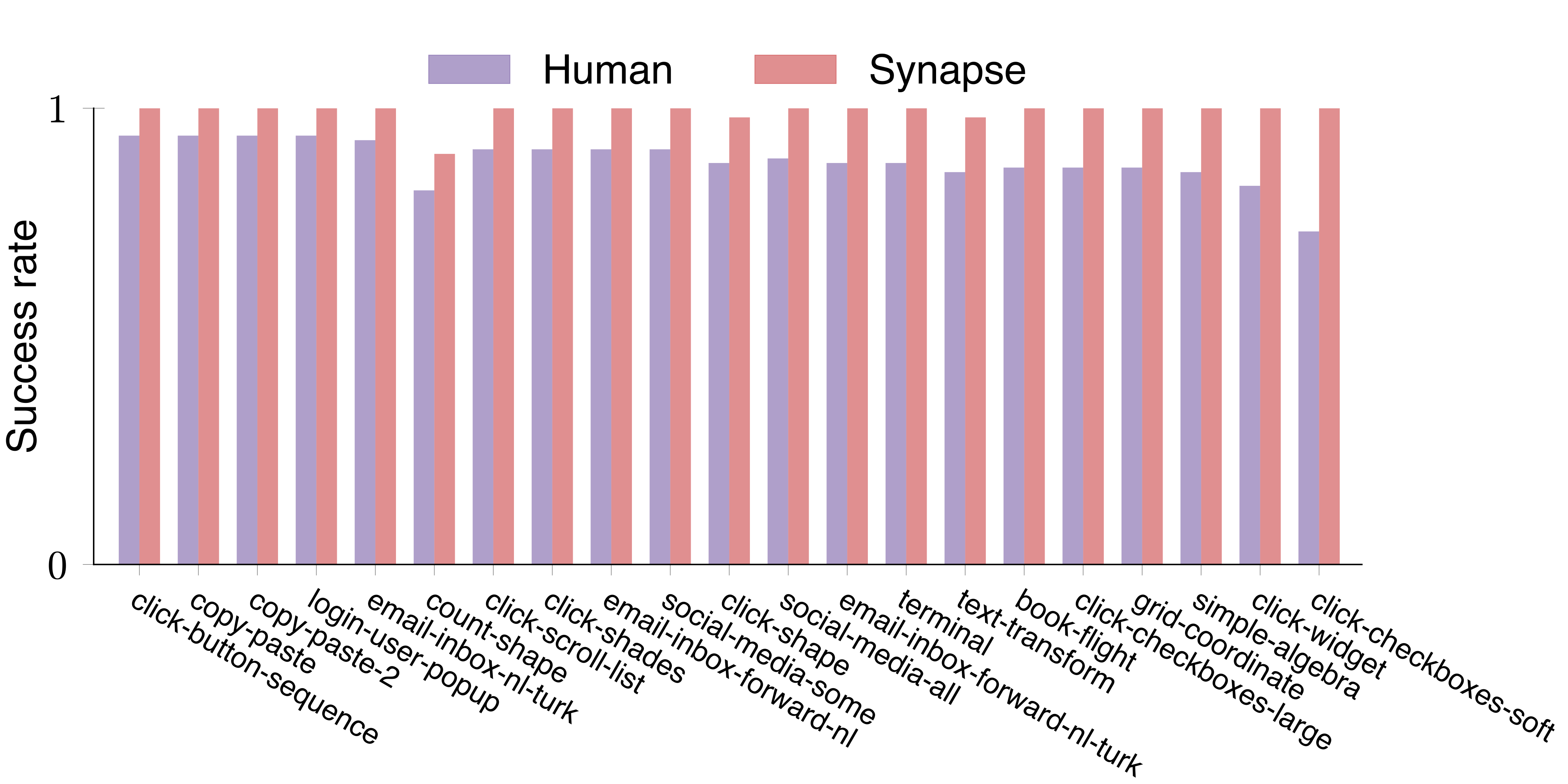

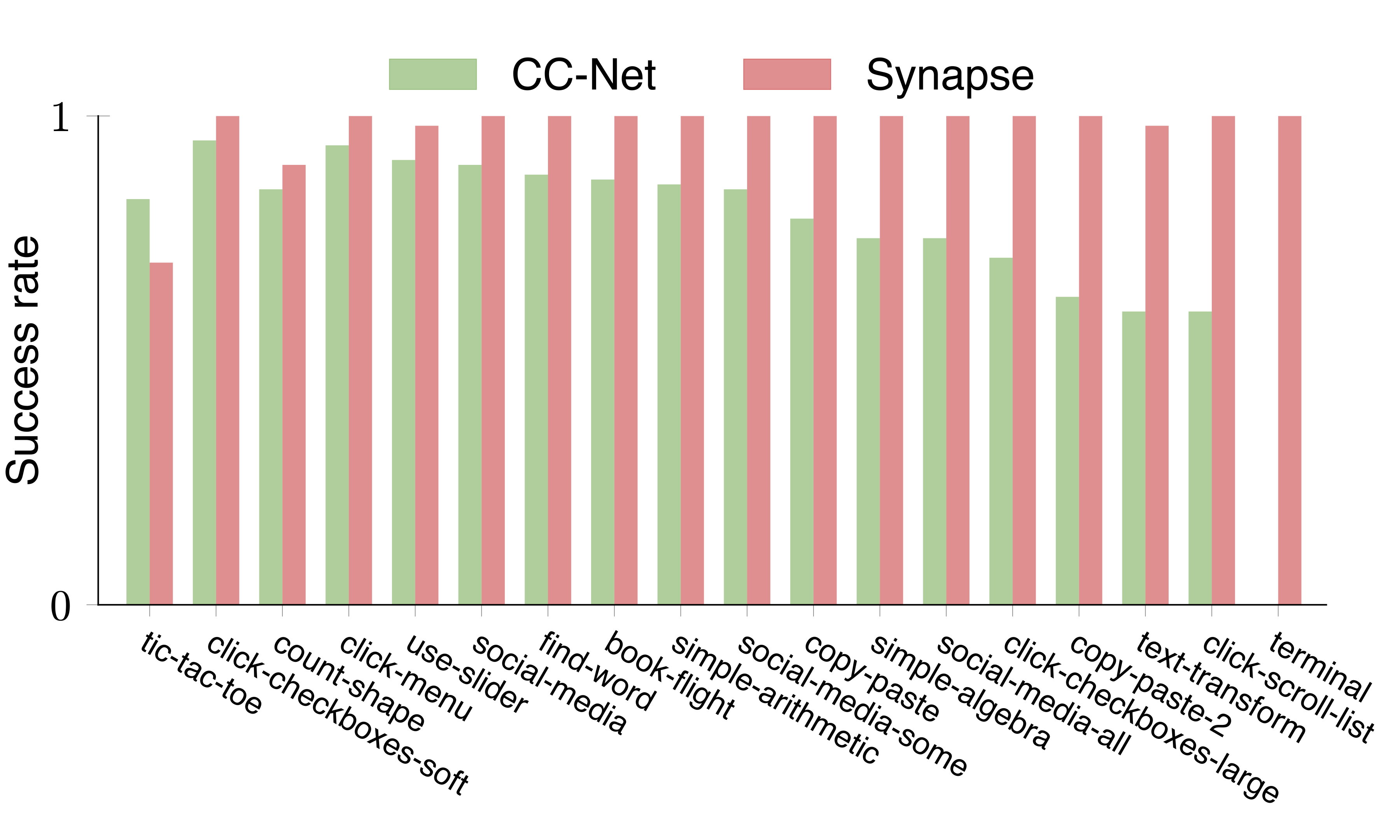

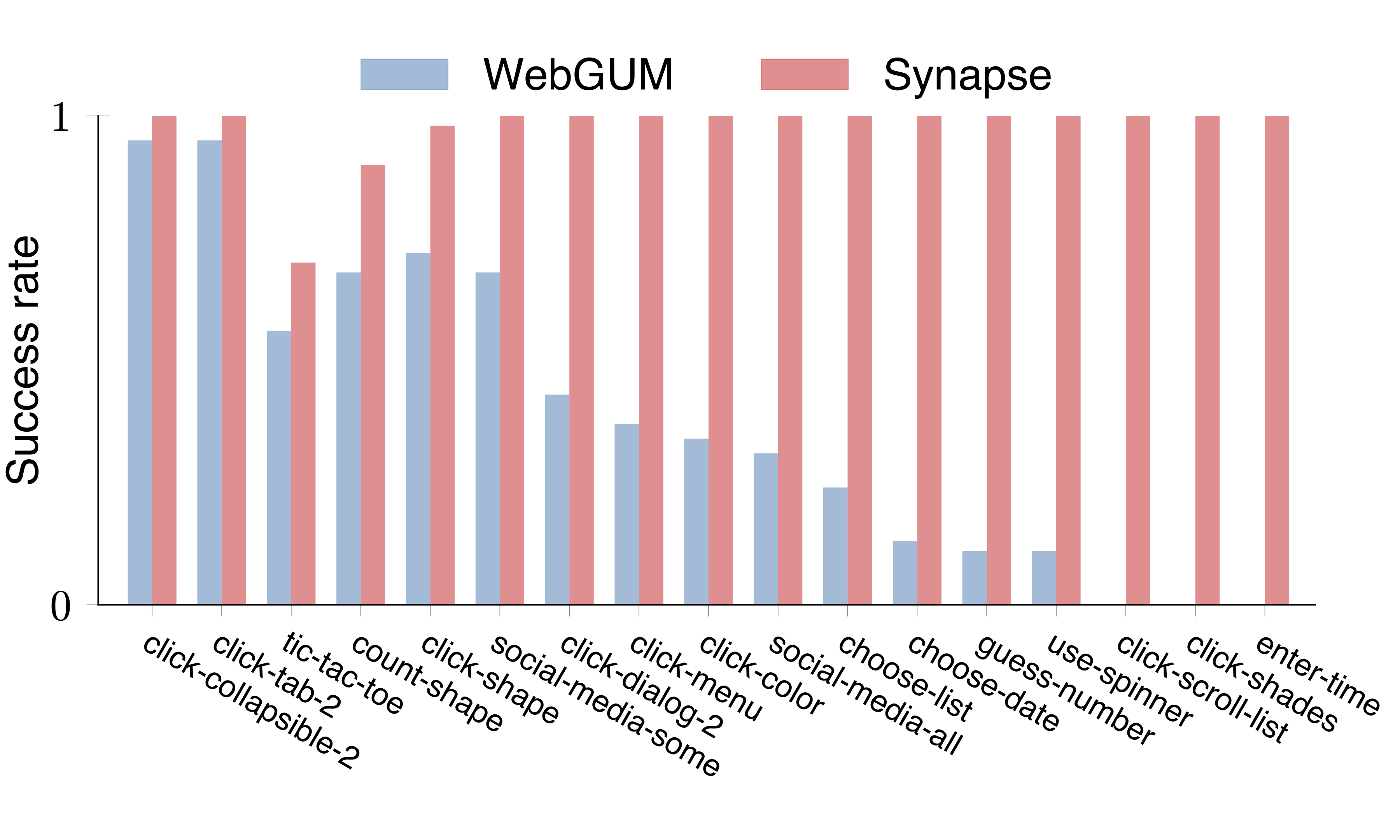

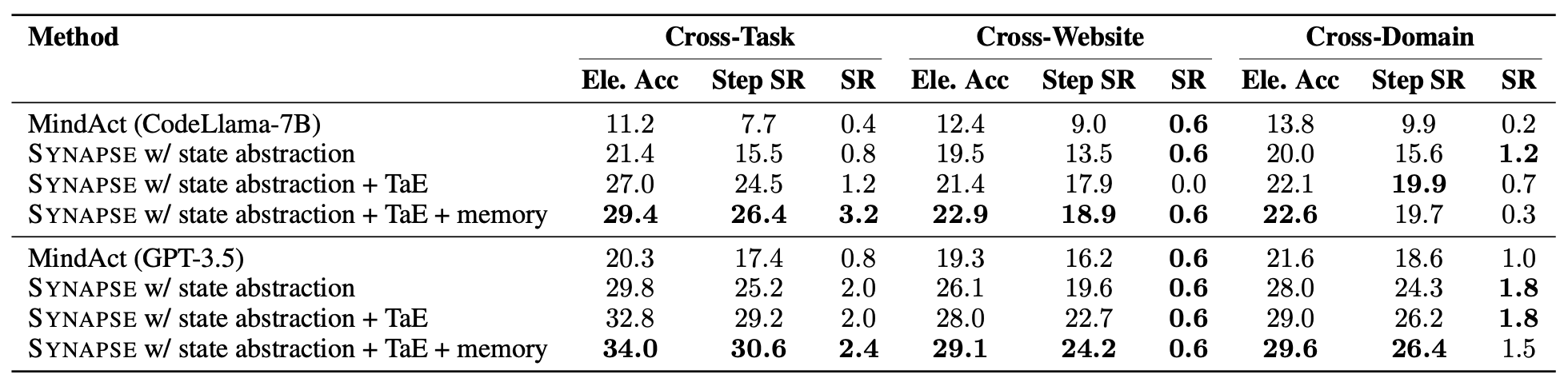

python run_mind2web.py --data_dir path/to/Mind2Web/data --benchmark {test_task/test_website/test_domain}Synapse outperforms the state-of-the-art methods on both MiniWoB++ and Mind2Web benchmarks.

The trajectories of all experiments can be downloaded from here.

Synapse also improves fine-tuning performance on Mind2Web.

Configure python environment:

pip install -r requirements.txt

pip install git+https://github.com/huggingface/peft.git@e536616888d51b453ed354a6f1e243fecb02ea08

pip install git+https://github.com/huggingface/transformers.git@c030fc891395d11249046e36b9e0219685b33399Build Mind2Web datasets for Synapse and memory:

python build_dataset.py --data_dir path/to/Mind2Web/data --no_trajectory --top_k_elements 20 --benchmark train

python build_dataset.py --data_dir path/to/Mind2Web/data --top_k_elements 20 --benchmark train

python build_memory.py --env mind2web --mind2web_data_dir path/to/Mind2Web/data --mind2web_top_k_elements 3Fine-tune:

python finetune_mind2web.py --data_dir path/to/Mind2Web/data --base_model codellama/CodeLlama-7b-Instruct-hf --cache_dir <MODEL_PATH> --lora_dir <CHECKPOINT_PATH> --no_trajectory --top_k_elements 20

python finetune_mind2web.py --data_dir path/to/Mind2Web/data --base_model codellama/CodeLlama-7b-Instruct-hf --cache_dir <MODEL_PATH> --lora_dir <CHECKPOINT_PATH> --top_k_elements 20Evaluate:

python evaluate_mind2web.py --data_dir path/to/Mind2Web/data --no_memory --no_trajectory --benchmark test_domain --base_model codellama/CodeLlama-7b-Instruct-hf --cache_dir <MODEL_PATH> --lora_dir <CHECKPOINT_PATH> --top_k_elements 20

python evaluate_mind2web.py --data_dir path/to/Mind2Web/data --no_memory --benchmark test_domain --base_model codellama/CodeLlama-7b-Instruct-hf --cache_dir <MODEL_PATH> --lora_dir <CHECKPOINT_PATH> --top_k_elements 20If you find our work helpful, please use the following citation:

@inproceedings{zheng2023synapse,

title={Synapse: Trajectory-as-Exemplar Prompting with Memory for Computer Control},

author={Zheng, Longtao and Wang, Rundong and Wang, Xinrun and An, Bo},

booktitle={The Twelfth International Conference on Learning Representations},

year={2023}

}