This repository contains the Python implementation of our submitted paper titled "Deep Reinforcement Learning Enables Joint Trajectory and Communication in Internet of Robotic Things" .

[Installation] [Installation] [Usage]

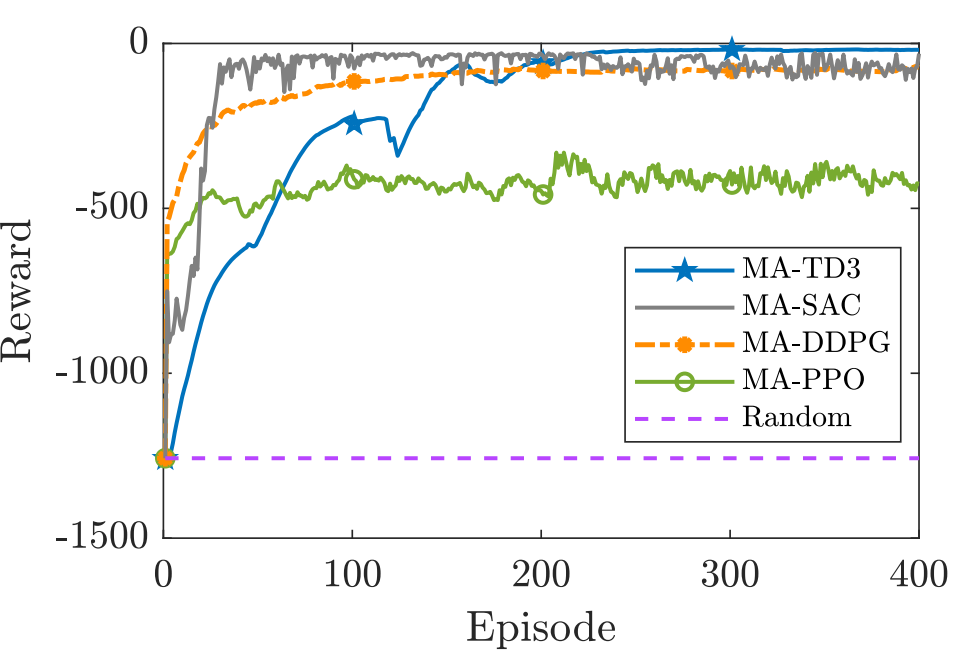

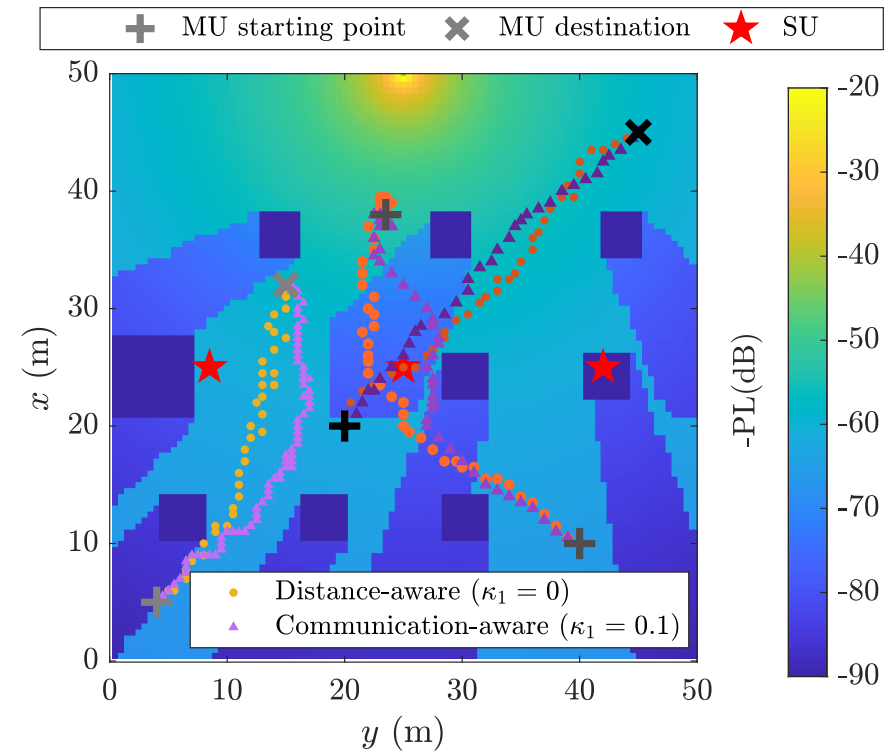

We learn the multi-agent actor-critic deep reinforcement learning (MAAC-DRL) algorithms to reduce the decoding error rate and arriving time of robots in industrial Internet of Robotic Things (IoRT) with the requirements of ultra-reliable and low-latency communications.

Here are the settings of the considered IoRT environment.

| Notation | Simulation Value | Physical Meaning |

|---|---|---|

| the number of users | ||

| the number of antennas | ||

| the number of robots | ||

| packet size | ||

| the number of transmitted symbols | ||

| the moving deadline of robots | ||

| the height of antennas | ||

| the maximal transmit power | ||

| the variance of the additive white Gaussian noise | ||

| the moving speed |

|

|

For more details and simulation results, please check our paper.

Dependencies can be installed by Conda:

For example to install env used for IoRT environments with URLLC requirements:

conda env create -f environment/environment.yml URLLC

conda activate URLLC

Then activate it by

conda activate URLLC

To run on atari environment, please further install the considered environment by

pip install -r environment/requirements.txt

Here are the parameters of our simulations.

| Notation | Simulation Value | Physical Meaning |

|---|---|---|

| the learning rate of the DRL algorithms | ||

| the parameters of the reward designs | ||

| the size of the mini-batch buffer | ||

| the maximal size of the experevce buffer |

'MA-DDPG_main.py'(Main functions and MDP transitions of MA-DDPG)'MA-PPO_main.py'(Main functions and MDP transitions of MA-PPO)'MA-SAC_main.py'(Main functions and MDP transitions of MA-SAC)'MA-TD3_core.py'(MLP operators of MA-TD3)'MA-TD3_main.py'(Main functions and MDP transitions of MA-TD3)

'environment.yaml'(Conda environmental document)'requirements.txt'(Pip environmental document)

'FIGURE_1.m'(Reward comparison under different MA-DRL algorithms)'FIGURE_2.m'(Robots' trajectory comparison under different reward settings)'FIGURE_3.m'(Average decoding error probability under different clustering and multiple access scheme)'FIGURE_4.m'(Objective function under different environmental settings)'FIGURE_5.m'(Arriving time under different environmental settings)

'AABB_plot.m'(Construct a radio map based on the deployment of obstacles and intersection detection)'map_data.mat'(Raw data of the built radio map)