Paper: InstructGPT - Training language models to follow instructions with human feedback

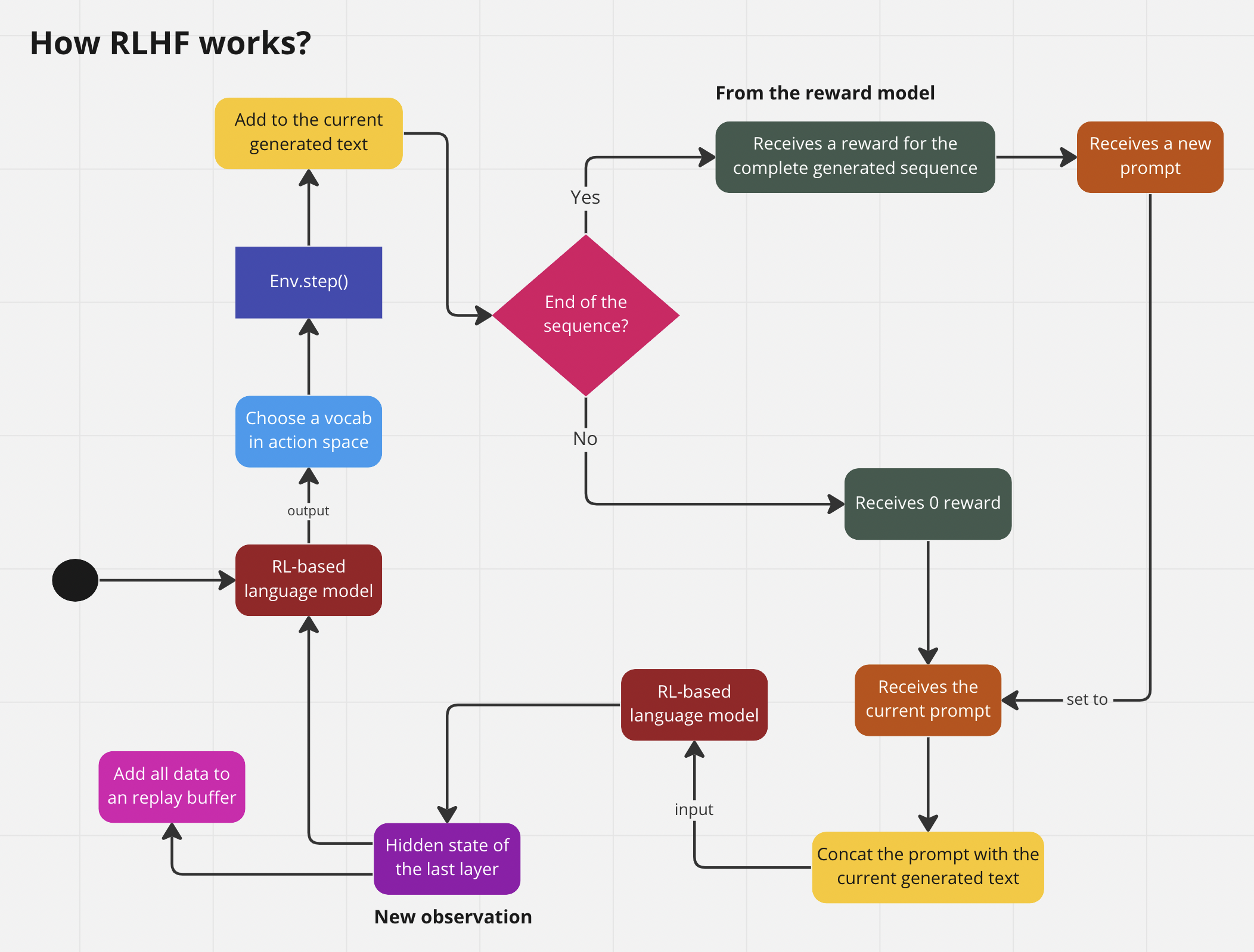

- In the context of RLHF, how to calculate the

$L_t^{V F}(\theta)$ ,- Like it’s a function of the PPO agent uses to predict how much reward it gets if generates the sequence?

Does the RL model and the SFT model use the same tokenizer? YesI don’t know how to returns the logit of the generation model- Does the PPO Agent (Language Model) has a value network just like the regular PPO Agent?

- I don’t understand how to calculate the advantage in PPO

pip install instruct-goosefrom transformers import AutoTokenizer, AutoModelForCausalLM

from instruct_goose import RLHFTrainer, create_reference_model, RLHFConfigtokenizer = AutoTokenizer.from_pretrained("gpt2")

model = AutoModelForCausalLM.from_pretrained("gpt2")

ref_model = create_reference_model(model)- Add support batch inference for agent

- Add support batch for RLHF trainer

I used these resources to implement this

- Copied the

load_yamlfunction from https://github.com/Dahoas/reward-modeling - How to build a dataset to train reward model: https://wandb.ai/carperai/summarize_RLHF/reports/Implementing-RLHF-Learning-to-Summarize-with-trlX–VmlldzozMzAwODM2

- How to add value head in PPO agent: https://github.com/lvwerra/trl

- How to calculate the loss of PPO agent: https://github.com/lvwerra/trl/blob/main/trl/trainer/ppo_trainer.py

- How to use PPO to train RLHF agent: https://github.com/voidful/TextRL

- How PPO works: https://github.com/vwxyzjn/cleanrl/blob/master/cleanrl/ppo.py