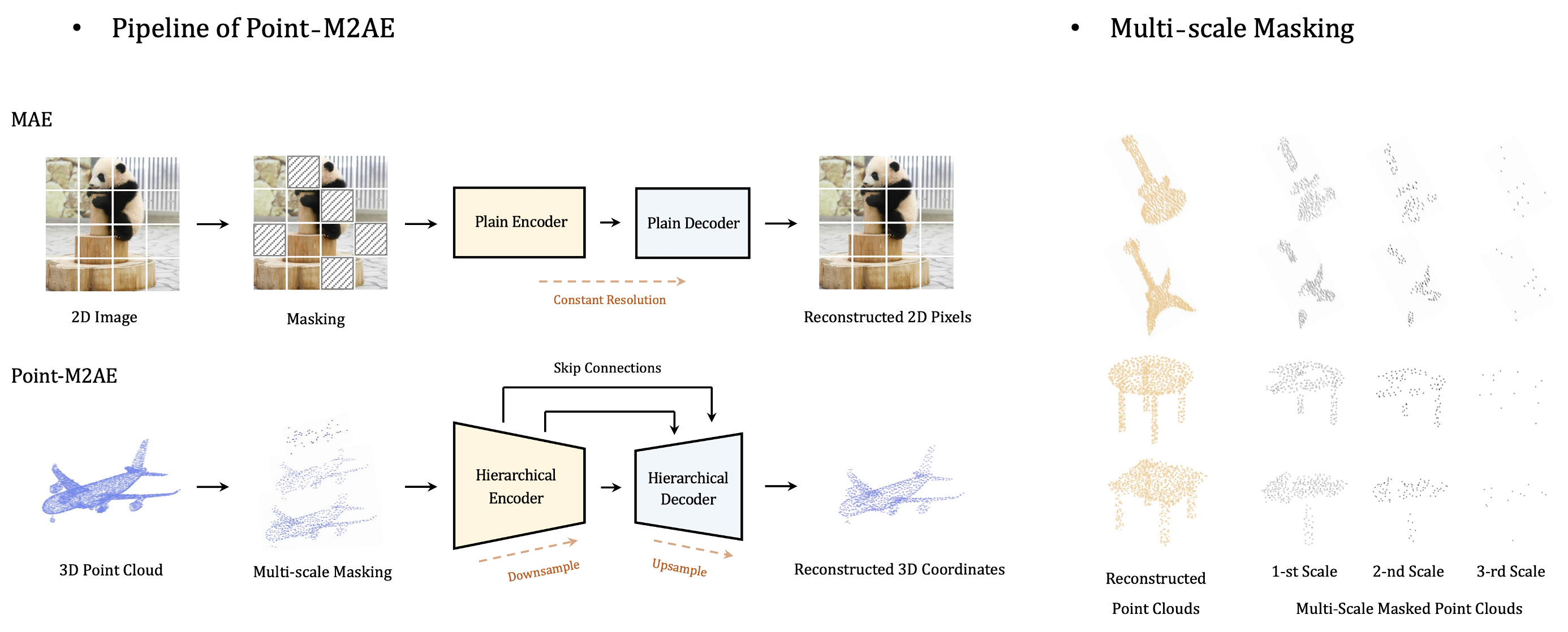

Official implementation of the paper 'Point-M2AE: Multi-scale Masked Autoencoders for Hierarchical Point Cloud Pre-training'.

Point-M2AE is a strong Multi-scale MAE pre-training framework for hierarchical self-supervised learning of 3D point clouds. Unlike the standard transformer in MAE, we modify the encoder and decoder into pyramid architectures to progressively model spatial geometries and capture both fine-grained and high-level semantics of 3D shapes. We design a multi-scale masking strategy to generate consistent visible regions across scales, and reconstruct the masked coordinates from a global-to-local perspective.

Coming Soon.

If you have any question about this project, please feel free to contact zhangrenrui@pjlab.org.cn.