Implementation of our paper entitiled S&CNet: A lightweight network for fast and accurate depth completion published in JVCI. This code is mainly based on Sparse-Depth-Completion. Thanks for their provided codes.

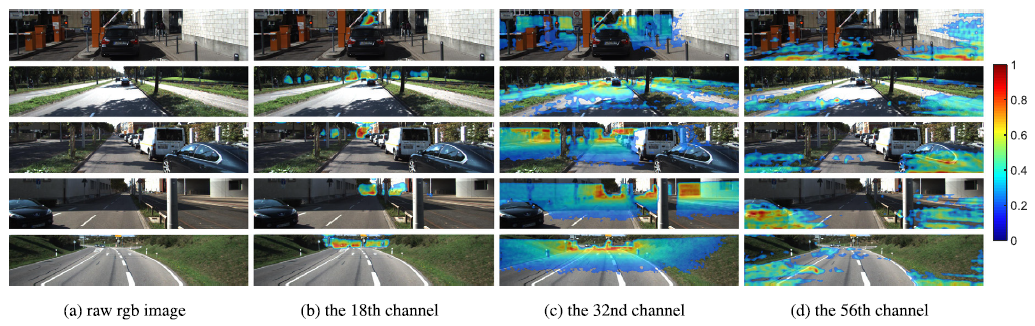

In this paper, we interestingly found that, for deep learning-based depth completion, each feature channel generated by the encoder network corresponds to a different depth range. It can be shown in below:

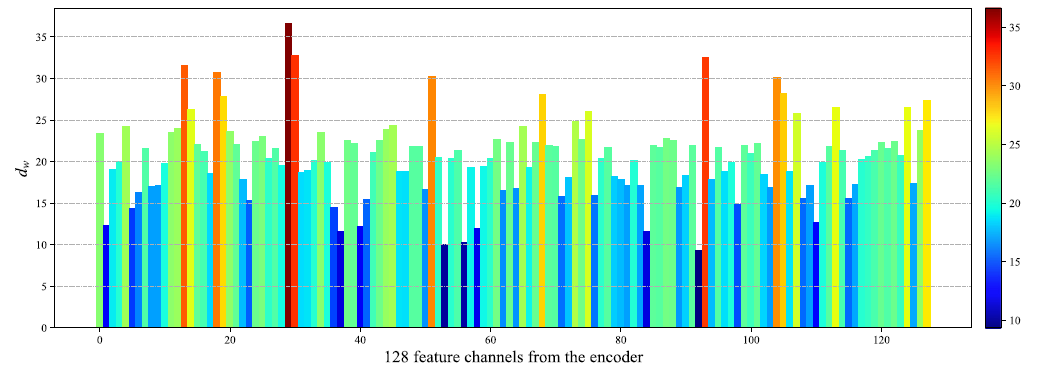

We can clearly see that the 18th channel of the feature map responds to long-distance objects, the 32 feature map responds to middle-distance objects and the 56th responds to near objects. We also proposed a more scientific metric to demonstrate this new finding. We define a weighted depth value dw to represent the depth range that a feature channel corresponds to. Then we can see the distributions of dw across different feature channels:

Ubuntu 16.04

Python 3.7

Pytorch 1.7pip install opencv-python pillow matplotlibThe Kitti dataset has been used. First download the dataset of the depth completion. Secondly, you'll need to unzip and download the camera images from kitti. The complete dataset consists of 85898 training samples, 6852 validation samples, 1000 selected validation samples and 1000 test samples.

Run:

source Shell/preprocess $datapath $dest $num_samples

(Firstly, I transformed the png's to jpg - images to save place. Secondly, two directories are built i.e. one for training and one for validation. See Datasets/Kitti_loader.py)

Dataset structure should look like this:

|--depth selection

|-- Depth

|-- train

|--date

|--sequence1

| ...

|--validation

|--RGB

|--train

|--date

|--sequence1

| ...

|--validation

Save the model in a folder in the Saved directory. and execute the following command:

source Test/test.sh

We provide our trained model (code: klfy) for reference.

We also provide our code in Test/test.py for visualization of spatial and channel attention, and flops.py for computing network parameters and FLOPs.

Set the corresponding datapath, and run:

source Shell/train.sh