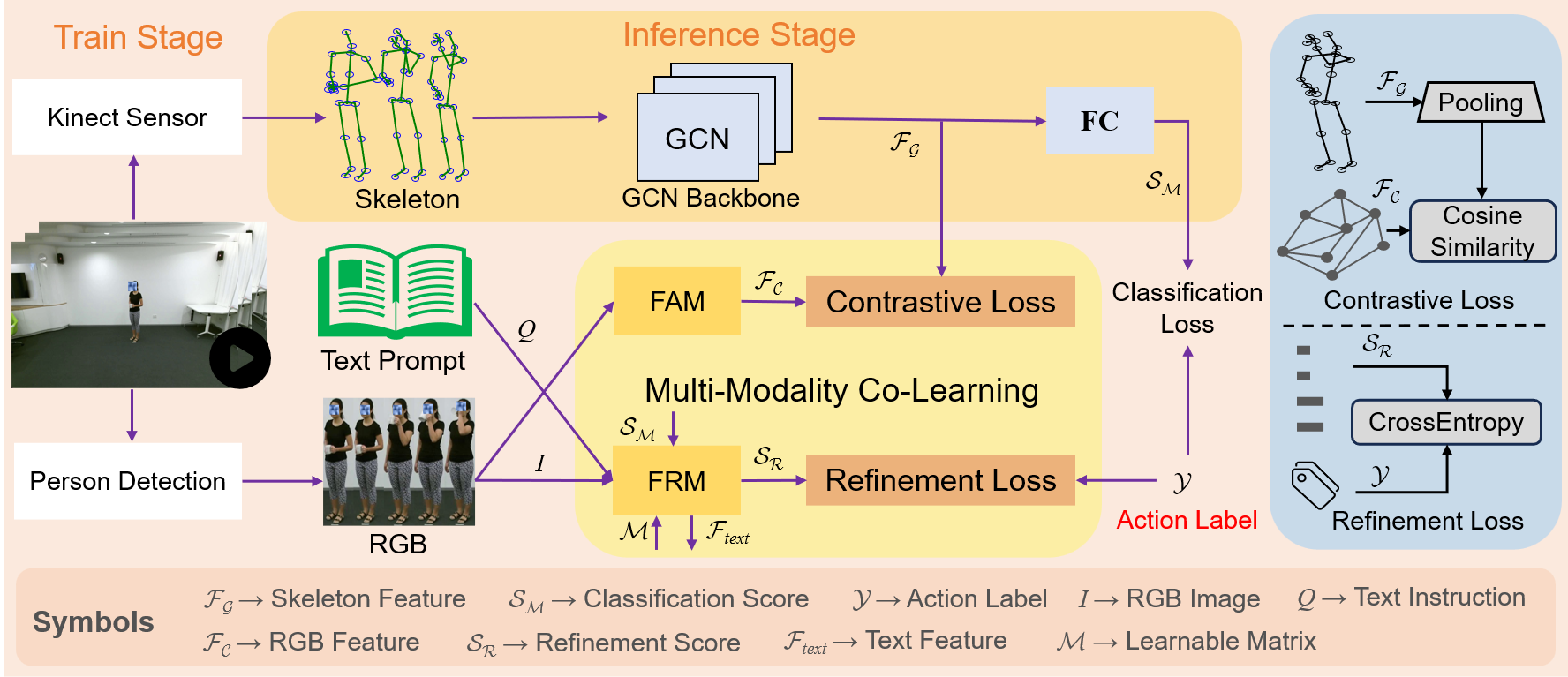

This is the official repo of Multi-Modality Co-Learning for Efficient Skeleton-based Action Recognition and our work is accepted by ACM Multimedia 2024 (ACM MM).

- NTU-RGB+D 60 dataset from https://rose1.ntu.edu.sg/dataset/actionRecognition/

- NTU-RGB+D 120 dataset from https://rose1.ntu.edu.sg/dataset/actionRecognition/

- NW-UCLA dataset from https://wangjiangb.github.io/my_data.html

- UTD-MHAD dataset from https://www.utdallas.edu/~kehtar/UTD-MHAD.html

- SYSU-Action dataset from https://www.isee-ai.cn/%7Ehujianfang/ProjectJOULE.html

- Refer to the method of CTR-GCN or TD-GCN for processing and preserving the skeleton data.

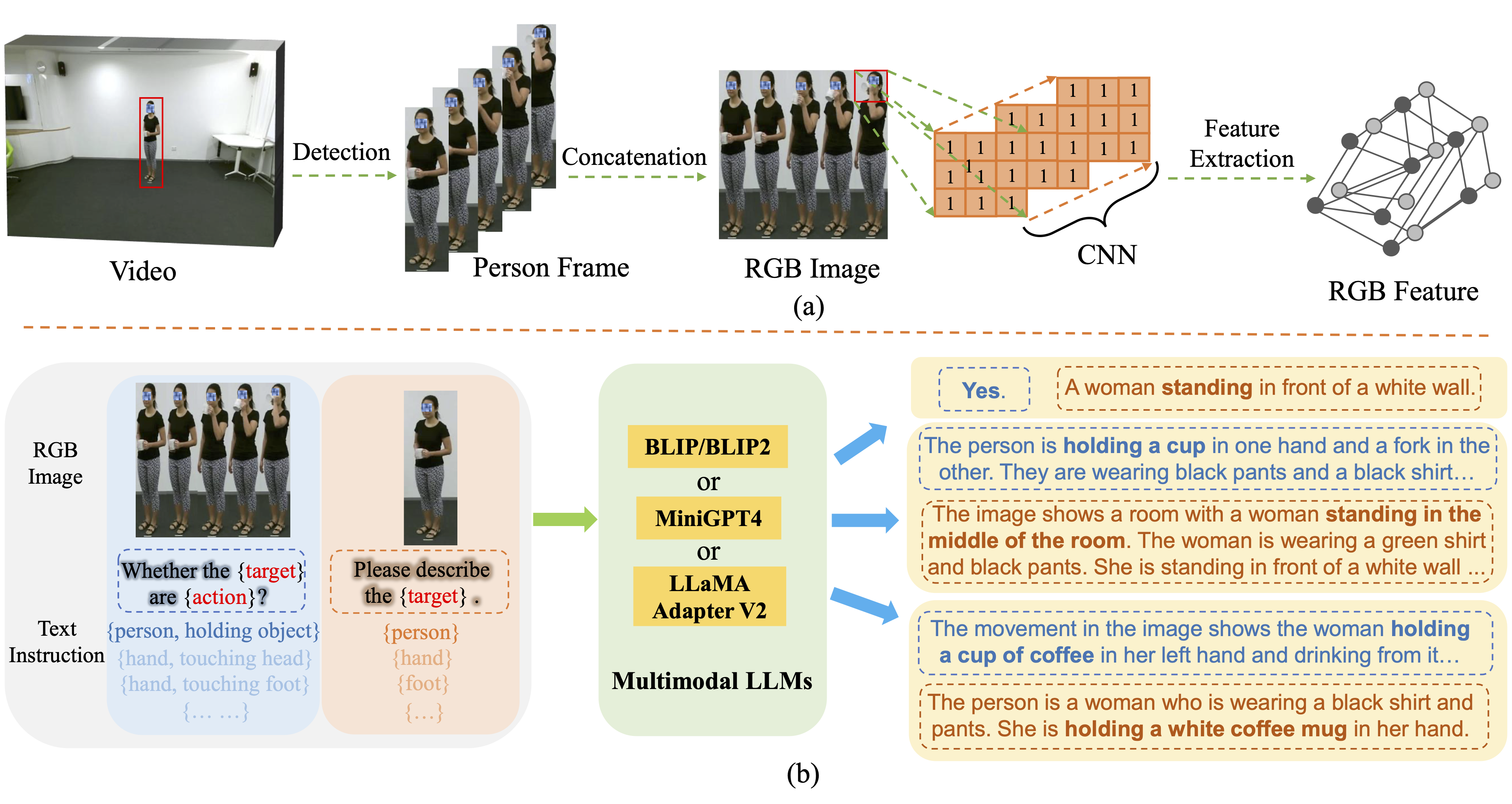

- Refer to the method of Extract_NTU_Person for processing and preserving the Video data.

- Refer to the method of LLMs for get the text features. MiniGPT-4, BLIP, DeepSeek-VL, GLM-4.

Frist, you must git clone the project of the multimodal LLMs.

Then, you need to preserve the text features, not the text content.

We suggest adopting more advanced multimodal LLMs (e.g. GLM-4V and DeepSeek-VL) and more complex prompts to obtain text features.

Please store the data of different modalities in the specified path and modify the config file accordingly.

# NTU120-XSub

python main_MMCL.py --device 0 1 --config ./config/nturgbd120-cross-subject/joint.yaml

# NTU120-XSet

python main_MMCL.py --device 0 1 --config ./config/nturgbd120-cross-set/joint.yaml

# NTU60-XSub

python main_MMCL.py --device 0 1 --config ./config/nturgbd-cross-subject/joint.yaml

# NTU60-XView

python main_MMCL.py --device 0 1 --config ./config/nturgbd-cross-view/joint.yaml

# NTU120-XSub

python main_MMCL.py --device 0 --config ./config/nturgbd120-cross-subject/joint.yaml --phase test --weights <work_dir>/NTU120-XSub.pt

# NTU120-XSet

python main_MMCL.py --device 0 --config ./config/nturgbd120-cross-set/joint.yaml --phase test --weights <work_dir>/NTU120-XSet.pt

# NTU60-XSub

python main_MMCL.py --device 0 --config ./config/nturgbd-cross-subject/joint.yaml --phase test --weights <work_dir>/NTU60-XSub.pt

# NTU60-XView

python main_MMCL.py --device 0 --config ./config/nturgbd-cross-view/joint.yaml --phase test --weights <work_dir>/NTU60-XView.pt

| Method | NTU-60 X-Sub | NTU-60 X-View | NTU-120 X-Sub | NTU-120 X-Set | NW-UCLA |

|---|---|---|---|---|---|

| MMCL | 93.5% | 97.4% | 90.3% | 91.7% | 97.5% |

cd Ensemble

# NTU120-XSub

python ensemble.py \

--J_Score ./Score/NTU120_XSub_J.pkl \

--B_Score ./Score/NTU120_XSub_B.pkl \

--JM_Score ./Score/NTU120_XSub_JM.pkl \

--BM_Score ./Score/NTU120_XSub_BM.pkl \

--HDJ_Score ./Score/NTU120_XSub_HDJ.pkl \

--HDB_Score ./Score/NTU120_XSub_HDB.pkl \

--val_sample ./Val_Sample/NTU120_XSub_Val.txt \

--benchmark NTU120XSub

# Others are similar to this way.Our project is based on the CTR-GCN, TD-GCN, EPP-Net, BLIP, MiniGPT-4.

@inproceedings{liu2024mmcl,

author = {Liu, Jinfu and Chen, Chen and Liu, Mengyuan},

title = {Multi-Modality Co-Learning for Efficient Skeleton-based Action Recognition},

booktitle = {Proceedings of the ACM Multimedia (ACM MM)},

year = {2024}

}