ECON: Explicit Clothed humans Obtained from Normals

Yuliang Xiu · Jinlong Yang · Xu Cao · Dimitrios Tzionas · Michael J. Black

CVPR 2023

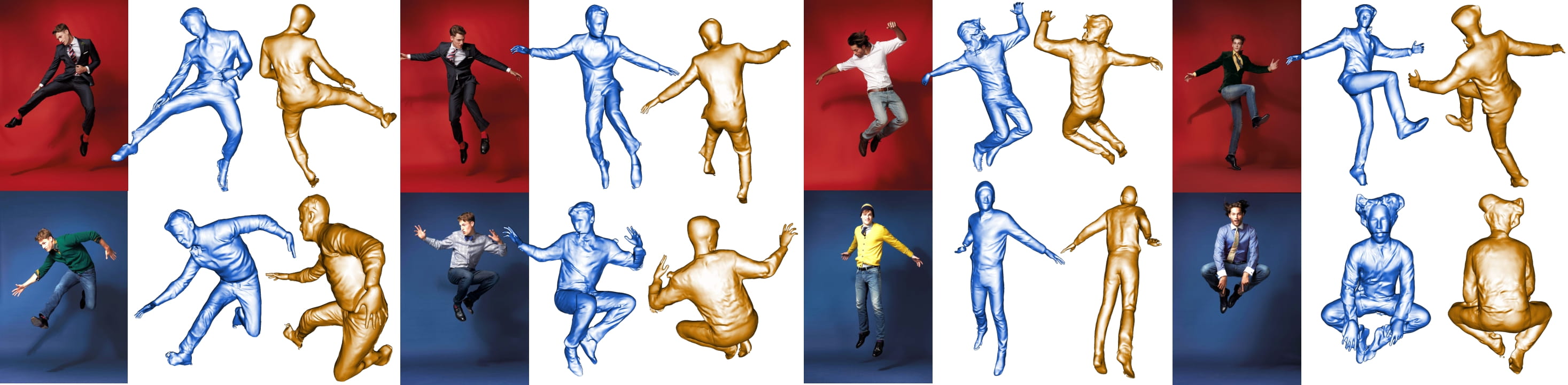

ECON is designed for "Human digitization from a color image", which combines the best properties of implicit and explicit representations, to infer high-fidelity 3D clothed humans from in-the-wild images, even with loose clothing or in challenging poses. ECON also supports multi-person reconstruction and SMPL-X based animation.

News 🚩

- [2023/02/27] ECON got accepted by CVPR 2023!

- [2023/01/12] Carlos Barreto creates a Blender Addon (Download, Tutorial).

- [2023/01/08] Teddy Huang creates install-with-docker for ECON .

- [2023/01/06] Justin John and Carlos Barreto creates install-on-windows for ECON .

- [2022/12/22]

is now available, created by Aron Arzoomand.

- [2022/12/15] Both demo and arXiv are available.

TODO

- Blender add-on for FBX export

- Full RGB texture generation

Table of Contents

Instructions

- See installion doc for Docker to run a docker container with pre-built image for ECON demo

- See installion doc for Windows to install all the required packages and setup the models on Windows

- See installion doc for Ubuntu to install all the required packages and setup the models on Ubuntu

- See magic tricks to know a few technical tricks to further improve and accelerate ECON

- See testing to prepare the testing data and evaluate ECON

Demo

# For single-person image-based reconstruction (w/ l visualization steps, 1.8min)

python -m apps.infer -cfg ./configs/econ.yaml -in_dir ./examples -out_dir ./results

# For multi-person image-based reconstruction (see config/econ.yaml)

python -m apps.infer -cfg ./configs/econ.yaml -in_dir ./examples -out_dir ./results -multi

# To generate the demo video of reconstruction results

python -m apps.multi_render -n <filename>

# To animate the reconstruction with SMPL-X pose parameters

python -m apps.avatarizer -n <filename>More Qualitative Results

|

|---|

| Challenging Poses |

|

| Loose Clothes |

Applications

|

|

|---|---|

| ECON could provide pseudo 3D GT for SHHQ Dataset | ECON supports multi-person reconstruction |

Citation

@inproceedings{xiu2023econ,

title = {{ECON: Explicit Clothed humans Obtained from Normals}},

author = {Xiu, Yuliang and Yang, Jinlong and Cao, Xu and Tzionas, Dimitrios and Black, Michael J.},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2023},

}Acknowledgments

We thank Lea Hering and Radek Daněček for proof reading, Yao Feng, Haven Feng, and Weiyang Liu for their feedback and discussions, Tsvetelina Alexiadis for her help with the AMT perceptual study.

Here are some great resources we benefit from:

- ICON for SMPL-X Body Fitting

- BiNI for Bilateral Normal Integration

- MonoPortDataset for Data Processing, MonoPort for fast implicit surface query

- rembg for Human Segmentation

- MediaPipe for full-body landmark estimation

- PyTorch-NICP for non-rigid registration

- smplx, PyMAF-X, PIXIE for Human Pose & Shape Estimation

- CAPE and THuman for Dataset

- PyTorch3D for Differential Rendering

Some images used in the qualitative examples come from pinterest.com.

This project has received funding from the European Union’s Horizon 2020 research and innovation programme under the Marie Skłodowska-Curie grant agreement No.860768 (CLIPE Project).

Contributors

Kudos to all of our amazing contributors! ECON thrives through open-source. In that spirit, we welcome all kinds of contributions from the community.

Contributor avatars are randomly shuffled.

License

This code and model are available for non-commercial scientific research purposes as defined in the LICENSE file. By downloading and using the code and model you agree to the terms in the LICENSE.

Disclosure

MJB has received research gift funds from Adobe, Intel, Nvidia, Meta/Facebook, and Amazon. MJB has financial interests in Amazon, Datagen Technologies, and Meshcapade GmbH. While MJB is a part-time employee of Meshcapade, his research was performed solely at, and funded solely by, the Max Planck Society.

Contact

For technical questions, please contact yuliang.xiu@tue.mpg.de

For commercial licensing, please contact ps-licensing@tue.mpg.de