This is the official implementation of our work Deep Interactive Thin Object Selection, for segmentation of object with elongated thin structures (e.g., bug legs and bicycle spokes) given extreme points (top, bottom, left-most and right-most pixels).

Deep Interactive Thin Object Selection,

Jun Hao Liew, Scott Cohen, Brian Price, Long Mai, Jiashi Feng

In: Winter Conference on Applications of Computer Vision (WACV), 2021

[pdf] [supplementary]

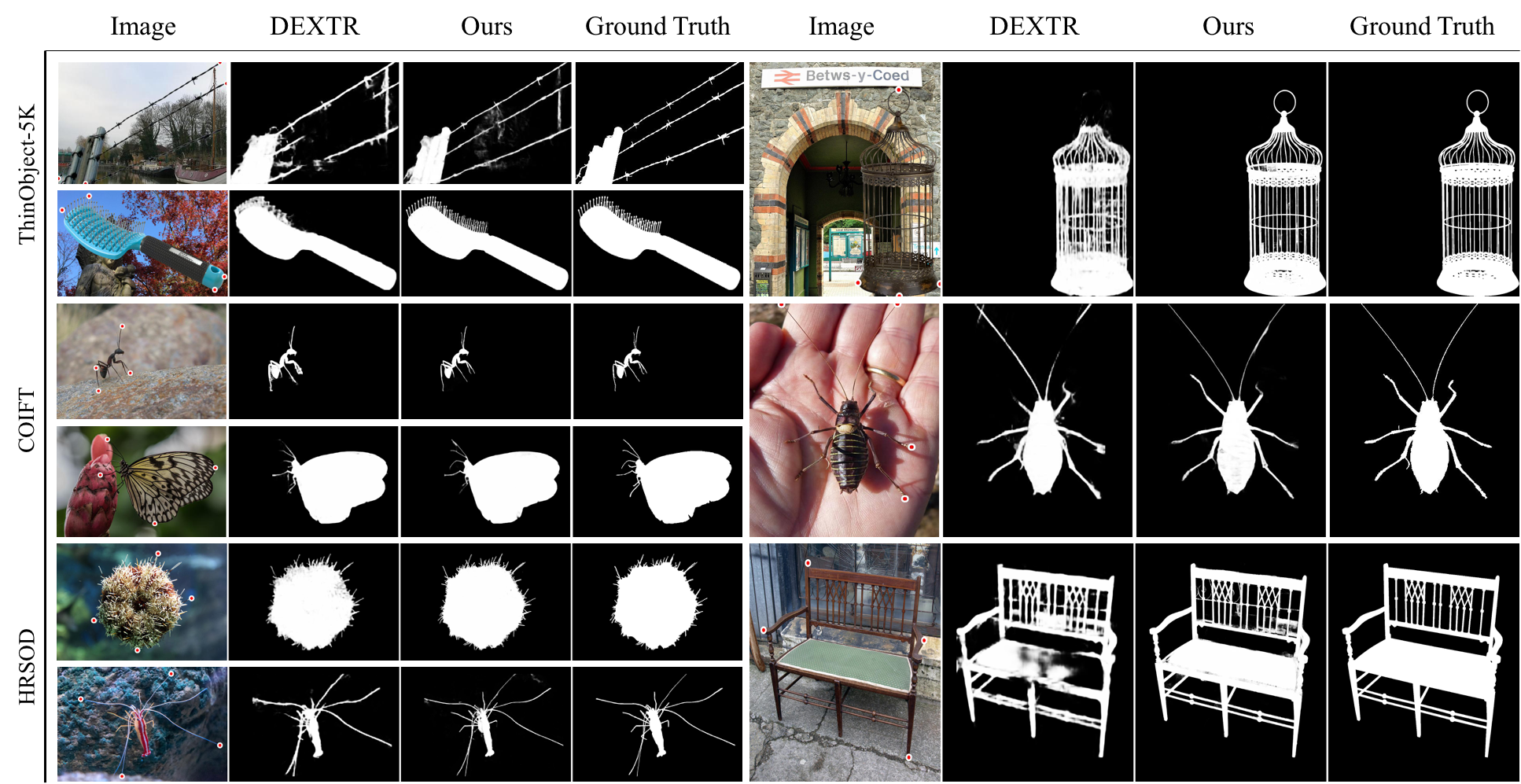

Here are some example results of our method.

The code was tested with Anaconda, Python 3.7, PyTorch 1.7.1 and CUDA 10.1.

-

Clone the repo:

git clone https://github.com/liewjunhao/thin-object-selection cd thin-object-selection -

Setup a virtual environment.

conda create --name tosnet python=3.7 pip source activate tosnet -

Install dependencies.

conda install pytorch=1.7.1 torchvision=0.8.2 cudatoolkit=10.1 -c pytorch conda install opencv=3.4.2 pillow=8.0.1 pip install scikit-fmm tqdm scikit-image gdown

-

Download the pre-trained model by running the script inside

weights/:cd weights/ chmod +x download_pretrained_tosnet.sh ./download_pretrained_tosnet.sh cd ..

-

Download the dataset by running the script inside

data/:cd data/ chmod +x download_dataset.sh ./download_dataset.sh cd ..

-

(Optional) Download the pre-computed masks by running the script inside

results/:cd results/ chmod +x download_precomputed_masks.sh ./download_precomputed_masks.sh cd ..

We also provide a simple interactive demo:

python demo.pyIf installed correctly, the result should look like this:

We provide the scripts for training our models on our ThinObject-5K dataset. You can start training with the following commands:

python train.pyDatasets can be found here. The extracted thin regions used for evaluation of IoUthin can be downloaded here. For convenience, we also release a script file (step 4 in Installation) that downloads all the files needed for evaluation. Our test script expects the following structure:

data

├── COIFT

│ ├── images

│ ├── list

│ └── masks

├── HRSOD

│ ├── images

│ ├── list

│ └── masks

├── ThinObject5K

│ ├── images

│ ├── list

│ └── masks

└── thin_regions

├── coift

│ ├── eval_mask

│ └── gt_thin

├── hrsod

│ ├── eval_mask

│ └── gt_thin

└── thinobject5k_test

├── eval_mask

└── gt_thinTo evaluate,

# This commands runs the trained model on test set and saves the resulting masks

python test.py --test_set <test-set> --result_dir <path-to-save-dir> --cfg <config> --weight <weights>

# This commands evaluates the performance of the trained model given the saved masks

python eval.py --test_set <test-set> --result_dir <path-to-save-dir> --thres <threshold>Where test_set denotes the testing dataset (currently support coift, hrsod and thinobject5k_test).

Examples of the script usage:

# This command evaluates on COIFT dataset using threshold of 0.5

python test.py --test_set coift --result dir results/coift/

python eval.py --test_set coift --result_dir results/coift/ --thres 0.5

# This command evaluates on HRSOD dataset using the pre-trained model

python test.py --test_set hrsod --result_dir results/hrsod/ --cfg weights/tosnet_ours/TOSNet.txt --weights weights/tosnet_ours/models/TOSNet_epoch-49.pth

python eval.py --test_set hrsod --result_dir results/hrsod/Alternatively, run the following script to perform testing and evaluation on all the 3 benchmarks:

chmod +x scripts/eval.sh

./scripts/eval.sh*** Note that the inference script (test.py) does not always give the same output each time (~0.01% IoU difference), even when the weights are held constant. Similar issue was also reported in this post. User is advised to first save the masks by running test.py once before evaluating the performance using eval.py.

For convenience, we also offer the pre-computed masks from our method (step 5 in Installation).

| Dataset | IoU | IoUthin | F-boundary | Masks | Source |

|---|---|---|---|---|---|

| COIFT | 92.0 | 76.4 | 95.3 | Download | Link |

| HRSOD | 86.4 | 65.1 | 87.9 | Download | Link |

| ThinObject-5K | 94.3 | 86.5 | 94.8 | Download | Link |

This project is licensed under a Creative Commons Attribution-NonCommercial 4.0 International Public License.

If you use this code, please consider citing our paper:

@Inproceedings{liew2021deep,

Title = {Deep Interactive Thin Object Selection},

Author = {Liew, Jun Hao and Cohen, Scott and Price, Brian and Mai, Long and Feng, Jiashi},

Booktitle = {Winter Conference on Applications of Computer Vision (WACV)},

Year = {2021}

}

This code is built upon the following projects: DEXTR-PyTorch, DeepInteractiveSegmentation, davis. We thank the authors for making their codes available!