Lichang Chen*, Jiuhai Chen*, Tom Goldstein, Heng Huang, Tianyi Zhou

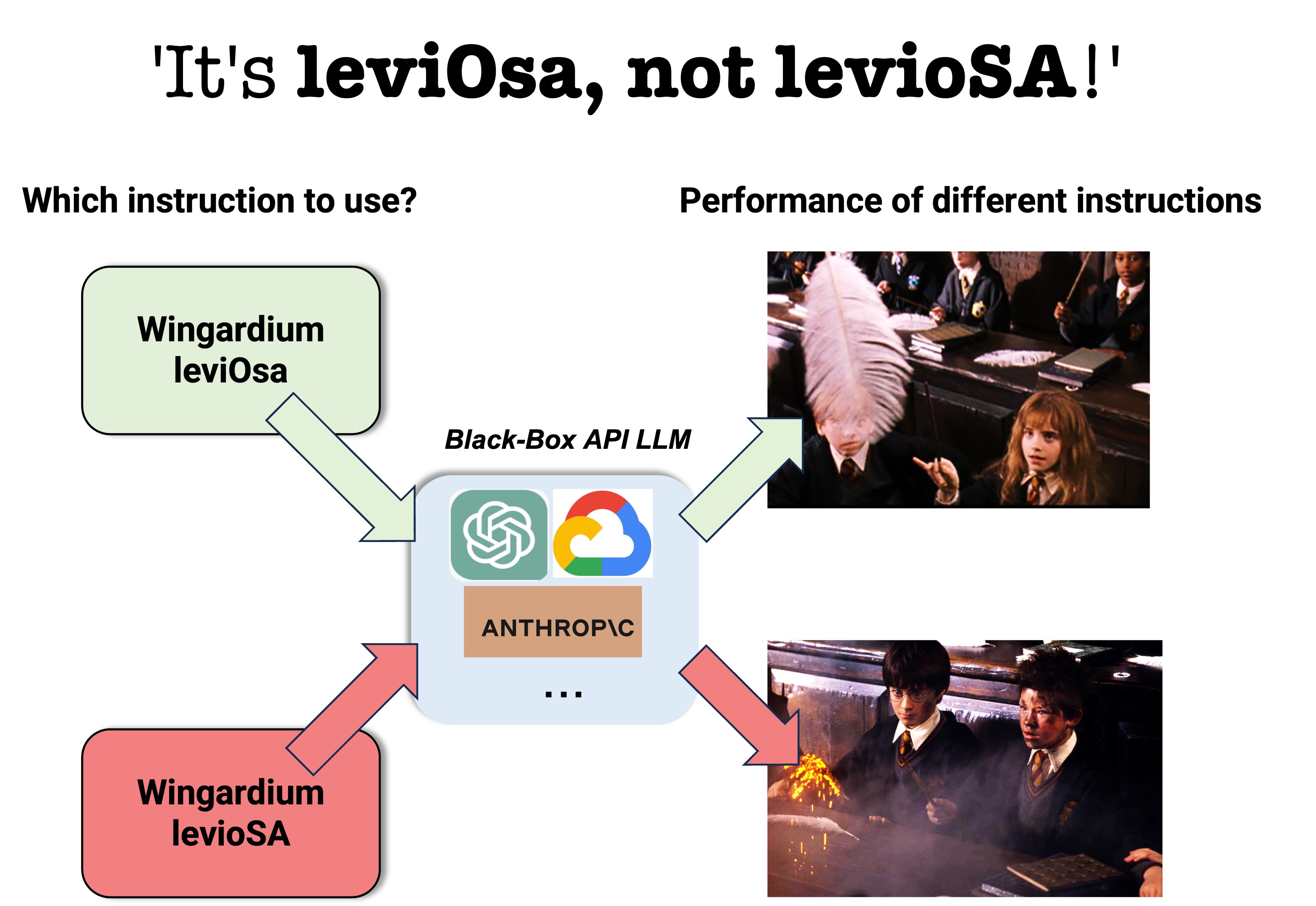

Find the optimal instruction is extremely important for achieving the "charm", and this holds true for Large Language Models as well. ("Wingardium Leviosa" was a charm used to make objects fly, or levitate. If you are interested in "leviOsa", please check the video in our project page).If you have any questions, feel free to email the correspondence authors: Lichang Chen, Jiuhai Chen. (bobchen, jchen169 AT umd.edu)

- [2023.7] We just got the Claude API key and our code will support GPT-4 and Claude(as API LLMs), WizardLM-13B and 30B~(as open-sourced LLMs) in the next week!

- [2023.7] Now our code support vicuna-v1.3 models and WizardLM-v1.1 models as open-source LLMs.

- [2023.7] Introducing AlpaGasus 🦙 Paper, Webpage, Code - making LLM instruction-finetuning smarter, not harder, using fewer data. We uses ChatGPT to select only 9k high-quality data from Alpaca's 52k data. The 9k data lead to Faster training time (5.7x ⏩), better performance (matching >90% of Text-Davinci-003).

- [2023.9] Our code now starts to support open-source LLMs as the black-box API LLM! Hope this function can help your research!

We propose a new kind of Alignment! The optimization process in our method is like aligning human with LLMs. (Compared to ours, instruction finetuning is more like aligning LLMs with human.) It is also the first framework to optimize the bad prompts for ChatGPT and finally obtain good prompts.

LLMs are instruction followers, but it can be challenging to find the best instruction for different situations, especially for black-box LLMs on which backpropagation is forbidden. Instead of directly optimizing the discrete instruction, we optimize a low-dimensional soft prompt applied to an open-source LLM to generate the instruction for the black-box LLM.

We have two folders in InstructZero:

-

automatic_prompt_engineering: this folder contains the functions from APE, like you could use functions in generate.py to calculate the cost of the whole training required. BTW, to ensure a more efficient OPENAI querying, we make asynchronous calls of ChatGPT which is adapted from Graham's code

-

experiments: contains the implementation of our pipeline and instruction-coupled kernels.

- Create env and download all the packages required as follows:

conda create -n InstructZero

conda install pytorch==1.13.1 torchvision==0.14.1 torchaudio==0.13.1 pytorch-cuda=11.6 -c pytorch -c nvidia

conda install botorch -c pytorch -c gpytorch -c conda-forge

pip install -r requirements.txt # install other requirements

- Firstly, you need to prepare your OPENAI KEY.

export OPENAI_API_KEY=Your_KEY

- Secondly, run the script to reproduce the experiments.

bash experiments/run_instructzero.sh

Here we introduce the hyperparameters in our algorithm.

- instrinsic_dim: the dimension of the projection matrix, default=10

- soft tokens: the length of the tunable prompt embeddings, you can choose from [3, 10]

- API LLMs and open-source LLMs support: currently, we only support for Vicuna-13b and GPT-3.5-turbo (ChatGPT), respectively. We will support more models in the next month (July). Current Plan: WizardLM-13b for open-source models and Claude, GPT-4 for API LLMs.

- Why is the performance of APE quite poor on ChatGPT? Answer: we only have access to the textual output from the black-box LLM, e.g., ChatGPT. So we could not calculate the log probability as the score function in InstructZero as original APE.

- Cost for calling ChatGPT API: On the single dataset(e.g., EN-DE dataset), the estimated cost is $1. We will merge the cost computation function into our repo. Stay tuned!

- How long does it take to run one task? It is supposed to be less than 10 minutes.

Our codebase is based on the following repo:

Thanks for their efforts to make the code public!

Stay tuned! We will make the usage and installation of our packages as easy as possible!

Please consider citing our paper if you used our code, or results, thx!

@article{chen2023instructzero,

title={InstructZero: Efficient Instruction Optimization for Black-Box Large Language Models},

author={Chen, Lichang and Chen, Jiuhai and Goldstein, Tom and Huang, Heng and Zhou, Tianyi},

journal={arXiv preprint arXiv:2306.03082},

year={2023}

}