Two GAAL-based outlier detection models: Single-Objective Generative Adversarial Active Learning (SO-GAAL) and Multiple-Objective Generative Adversarial Active Learning (MO-GAAL).

SO-GAAL directly generates informative potential outliers to assist the classifier in describing a boundary that can separate outliers from normal data effectively. Moreover, to prevent the generator from falling into the mode collapsing problem, the network structure of SO-GAAL is expanded from a single generator (SO-GAAL) to multiple generators with different objectives (MO-GAAL) to generate a reasonable reference distribution for the whole dataset.

This is our official implementation for the paper:

Liu Y , Li Z , Zhou C , et al. "Generative Adversarial Active Learning for Unsupervised Outlier Detection", arXiv:1809.10816, 2018.

(Corresponding Author: Dr. Xiangnan He)

If you use the codes, please cite our paper . Thanks!

- Python 3.5

- Tensorflow (version: 1.0.1)

- Keras (version: 2.0.2)

The instruction of commands has been clearly stated in the codes (see the parse_args function).

To launch the SO-GAAL quickly, you can use:

python SO-GAAL.py --path Data/onecluster --stop_epochs 1000 --lr_d 0.01 --lr_g 0.0001 --decay 1e-6 --momentum 0.9

or ./SO-GAAL.sh for short.

To launch the MO-GAAL quickly, you can use:

python MO-GAAL.py --path Data/onecluster --k 10 --stop_epochs 1500 --lr_d 0.01 --lr_g 0.0001 --decay 1e-6 --momentum 0.9

or ./MO-GAAL.sh for short.

se python SO-GAAL.py -h or python MO-GAAL.py -h to get more argument setting details.

-h, --help show this help message and exit

--path [PATH] Input data path.

--k K Number of sub_generator.

--stop_epochs. Stop training generator after stop_epochs.

--whether_stop. Whether or not to stop training generator after stop_epochs.

--lr_d Learning rate of discriminator.

--lr_g. Learning rate of generator.

--decay Decay.

--momentum MOMENTUM Momentum.We provide a synthetic dataset and ten real-world datasets in Data/ The parameter settings for the 10 benchmark datasets are provided in "PARAMETERS.txt".

For problems that may be encountered during the experiment, some explanations about GAAL have been added.

Due to the assumption that outliers are not concentrated, unsupervised outlier detection can be regarded as a density-level detection process. However, it is expensive on large datasets, and the distance between samples may suffer from the “curse of dimensionality”. Therefore, we attempt to replace the density-level detection with a classification process by sampling potential outliers (AGPO).

For a given dataset X={x_1, x_2, ..., x_n}, the initial AGPO first randomly generates n data points x’ as potential outliers. A classifier C is then trained on the new dataset to separate potential outliers x’ from the original data x. To minimize the loss function of the classifier, it should assign a higher value (approaching 1) to the original data x with higher relative density, and a lower one (approaching 0) in the opposite case. Thus, because the absolute density of potential outliers is equal everywhere, the optimized classifier can assign higher values to the original data with higher absolute density, and identify non-concentrated outliers from concentrated normal data. However, as the number of dimensions increases, the data becomes sparser. A limited number of potential outliers are randomly scattered throughout the sample space, so that the original data with low absolute density may also have a high relative density. Therefore, GAAL was proposed to generate informative potential outliers and construct a reasonable reference distribution to ensure that the relative density of the concentrated normal data is higher than that of the non-concentrated outliers.

GAAL consists of k sub-generators and a discriminator. The sub-generators attempt to learn the generation mechanism of the original data, while the discriminator attempts to identify the generated data from the original data. Thus, the sub-generators can generate an increasing number of informative potential outliers that occur inside or close to the original dataset. Coupled with the control of the number of potential outliers generated by the sub-generators, a reasonable reference distribution can be constructed. As a result, the discriminator (like the classifier C in AGPO) can separate non-concentrated outliers from concentrated normal data.

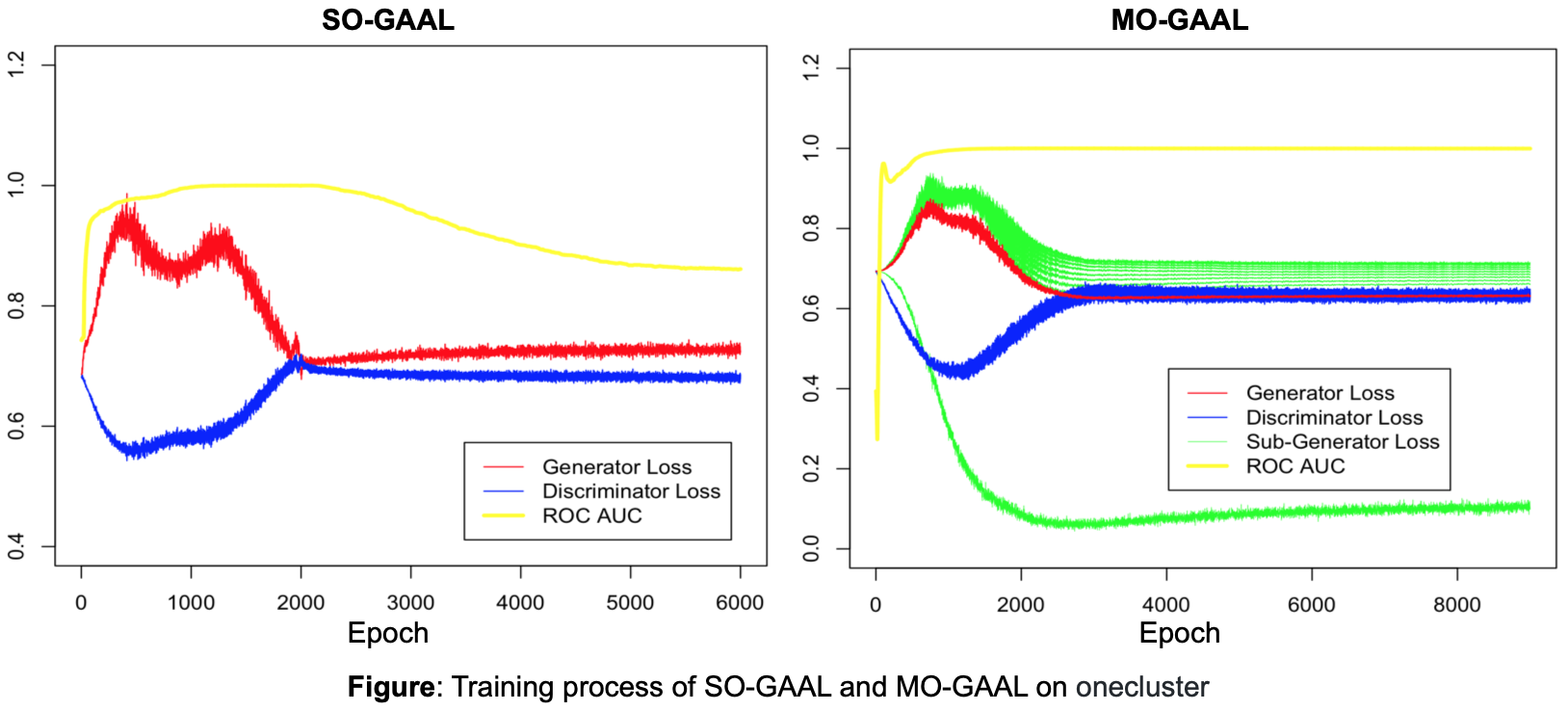

The premise for GAAL to effectively identify outliers is that the generators have basically learn the generation mechanism of the original data, and then a reasonable reference distribution can be constructed. If the training of the generator is stopped prematurely, the generated data is still randomly scattered throughout the sample space, just like the initial AGPO. Conversely, if the training is stopped too late, the generated data may cluster around part of the real data. (Compared to SO-GAAL, MO-GAAL alleviates this problem to a large extent, but it is also necessary to stop the training.) Therefore, the setting of the stop node (i.e., the “stop_epochs”) is crucial.

Since the dimensions and amounts of data are significantly different, the “stop_epochs” also vary widely. The paper states that GAAL should stop training generators when the downward trend of its loss tends to be slow. We provide parameter settings for the 10 benchmark datasets in "PARAMETERS.txt". If you want to set the "stop_epochs" for a new dataset. First, set "whether_stop" to 0 and "stop_epochs" to a reasonable value. Then, observe when the downward trend of the generator loss tends to be slow. If the downtrend tends to be slow at 100 iterations (i.e., I=100). The “stop_epochs” should be set to ⌈I/⌈n/500⌉⌉, where n is the number of data points. In addition, we further expand the structure of GAAL from multiple-objective sub-generators (MO-GAAL) to multiple sub-GANs (MGAAL) in the follow-up study (https://doi.org/10.1145/3522690). And an evaluation indicator, Nearest Neighbor Ratio NNR, is introduced to evaluate the training status of the sub-generators.

SO-GAAL (k=1) contains one generator, and its accuracy drops dramatically when the mini-max game reaches a Nash equilibrium. The reason is that all informative potential outliers occur inside or close to part of the real data as the training progresses, causing some normal examples to confront a higher-density reference distribution than outliers. Therefore, to prevent this problem, the structure of SO-GAAL is extended from a single generator to multiple generators (MO-GAAL, k>1).

The central idea of MO-GAAL is to get each sub-generator actively learns the generation mechanism of the data in a specific real data subset. In addition, to prevent generating an identical distribution with the original data, more potential outliers need to be generated for the less concentrated subset. Thus, as the training progresses, the integration of different numbers of potential outliers generated by different sub-generators can provide a reasonable reference distribution for the whole dataset.

GAAL is an unsupervised outlier detection algorithm without any labels. The labels contained in the data are removed before training ("y = data.pop(1)"). They are only used for model evaluation, i.e., the calculation of the AUC score.

The visual representation of the division boundary (Fig. 8) first divides the sample space into 100*100 small pixels. Then, the coordinates of these pixels are input into the discriminator to get the outlier scores. Finally, pixels with different outlier scores are displayed in different colors. Furthermore, although GAAL can achieve satisfactory results with parameter settings in the paper, the discriminator requires more iterations to describe the complex division boundary. A larger learning rate and more neurons for the discriminator are helpful.