This repository contains code and results for comparing several GAN architectures.

- Install pipenv using the command

pip insalll pipenv - Install the project dependencies by running the command

pipenv installin the project directory which will set up the dependencies after creating a virtual env.

You can find the jupyter notebook for this project in notebooks/project_gans.ipynb. Running it should be fairly simple as the code is well-commented and explained fairly well.

In the project directory use the following commands:

For training:

cg train

For Generating samples:

cg generate_samples

Use the command cg --help for an overview of what each command does

The file config.yaml stores the configuration of the classifier.

Note: To compute the FID scores, I used the following repo: https://github.com/mseitzer/pytorch-fid

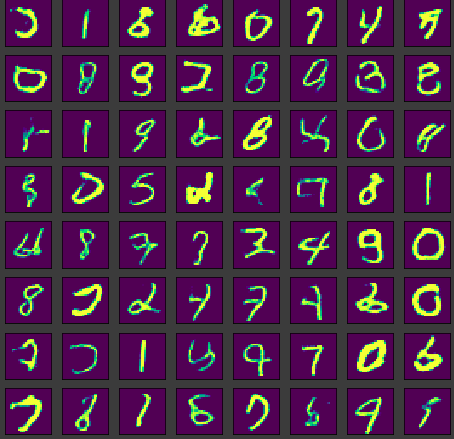

MNIST dataset |

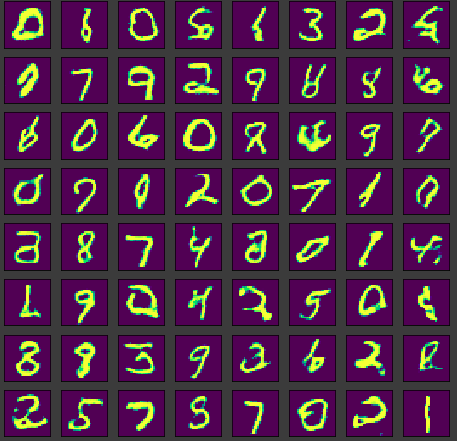

Fashion-MNIST dataset |

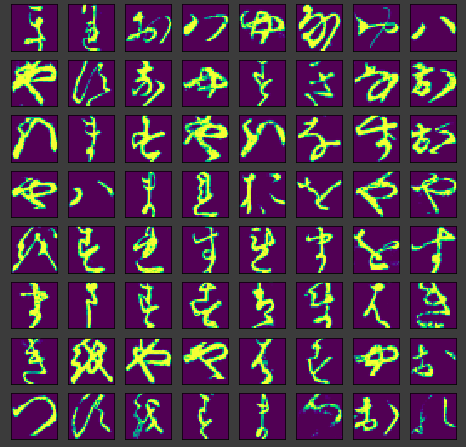

KMNIST dataset |

LSGAN |

WGAN |

WGAN-GP |

Note: The samples are generated randomly and not cherry-picked

Note: All FID scores are reported on the same architecture of the Generator and the discriminator.

For the MNIST dataset, the FID scores for the architectures are:

| Architecture | FID Score | Dataset | Number of Samples |

|---|---|---|---|

| DCGAN | 10.8589 | MNIST | 8k |

| LSGAN | 10.9903 | MNIST | 8k |

| WGAN with gradient clipping | 9.4645 | MNIST | 8k |

| WGAN with gradient penalty | 17.69 | MNIST | 8k |

The architecture for the discriminator and the generator was constant and other factors like learning_rate, optimizer was also constant. All the architectures were trained for 50 epochs.

In the given setting WGAN with weight clipping produces the best FID score on the MNIST dataset.

Source Dataset: MNIST Target Dataset: Fashion-MNIST GAN Architecture: WGAN with gradient penalty

| Architecture | FID Score(Scratch) | FID Score(Fine-tuned) | Dataset | Number of Samples | # epochs | transfer |

|---|---|---|---|---|---|---|

| DCGAN | 23.2419 | 23.3011 | Fashion-MNIST | 8k | 50 | Generator only |

| WGAN with gradient penalty | 36.5917 | 31.38651 | Fashion-MNIST | 8k | 20 | Both |

From the above results it can be concluded that:

-

Finetuning works best when both the generator and the discriminator are transferred

-

Finetuning might also work when the source and the target datasets are not even related like the ones in this case.

-

I experimented with using the

Mishand theSwishactivation functions in the generator and the discriminator and the GAN training was very unstable with no plausible samples -

Using

leaky_reluin the discriminator andswishin the generator generates some plausible samples but not good enough. These are shown below:

Kushagra Pandey / @kpandey008