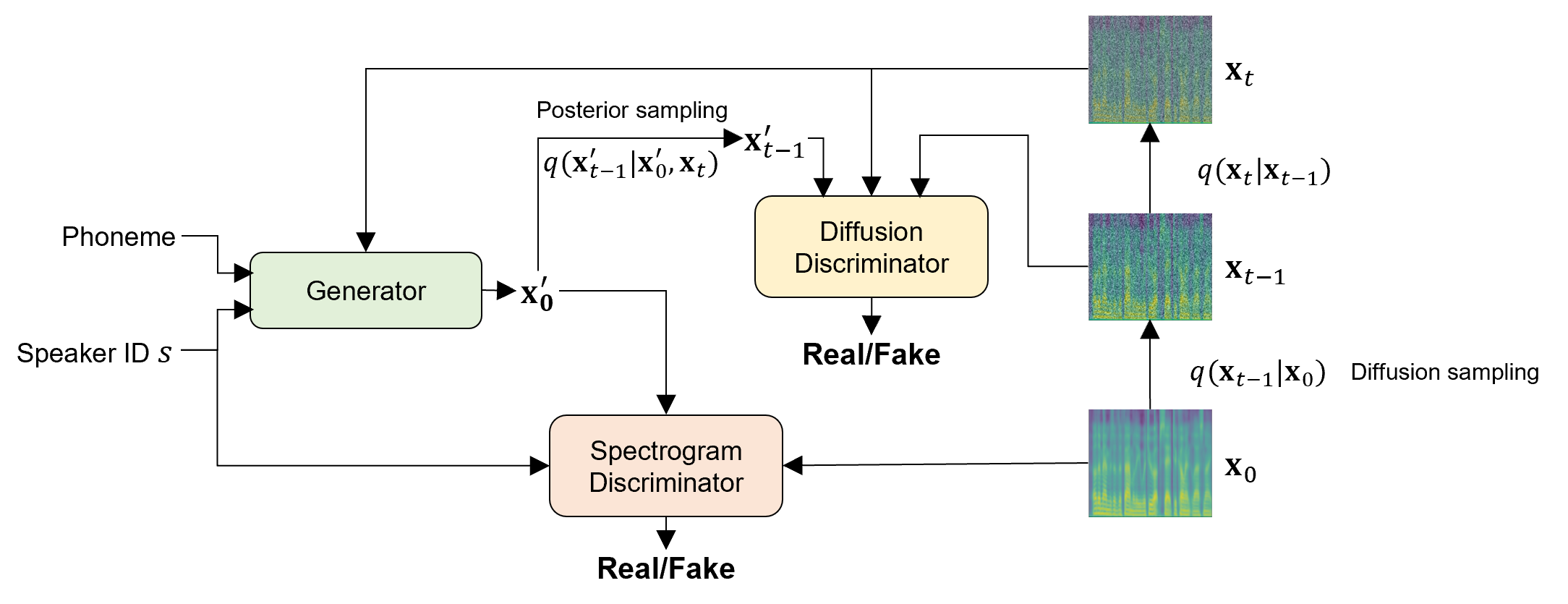

PyTorch implementation of Adversarial Training of Denoising Diffusion Model Using Dual Discriminators for High-Fidelity Multi-Speaker TTS

This project is based on keonlee9420's implementation of DiffGAN-TTS

Audio samples are available at the following link.

You can install the Python dependencies with

pip3 install -r requirements.txt

python3 synthesize.py --text "YOUR_DESIRED_TEXT" --speaker_id SPEAKER_ID --restore_step RESTORE_STEP --mode single --dataset DATASET

The dictionary of learned speakers can be found at preprocessed_data/DATASET/speakers.json, and the generated utterances will be put in output/result/.

Batch inference is also supported, try

python3 synthesize.py --source preprocessed_data/DATASET/val.txt --restore_step RESTORE_STEP --mode batch --dataset DATASET

to synthesize all utterances in preprocessed_data/DATASET/val.txt.

The pitch/volume/speaking rate of the synthesized utterances can be controlled by specifying the desired pitch/energy/duration ratios. For example, one can increase the speaking rate by 20 % and decrease the volume by 20 % by

python3 synthesize.py --text "YOUR_DESIRED_TEXT" --model MODEL --restore_step RESTORE_STEP --mode single --dataset DATASET --duration_control 0.8 --energy_control 0.8

Please note that the controllability is originated from FastSpeech2 and not a vital interest of this model.

The supported dataset is

- VCTK: The CSTR VCTK Corpus includes speech data uttered by 110 English speakers (multi-speaker TTS) with various accents. Each speaker reads out about 400 sentences, which were selected from a newspaper, the rainbow passage and an elicitation paragraph used for the speech accent archive.

You can add another dataset with modifying config files in /config/VCTK

If you want to train Single speaker dataset, please change multi_speaker option in model.yaml

-

Before you run preprocess code, please set your dataset's location to

corpus_pathoption in preprocess.yaml -

For a multi-speaker TTS with external speaker embedder, download ResCNN Softmax+Triplet pretrained model of philipperemy's DeepSpeaker for the speaker embedding and locate it in

./deepspeaker/pretrained_models/. -

Run

python3 prepare_align.py --dataset DATASETfor some preparations.

For the forced alignment, Montreal Forced Aligner (MFA) is used to obtain the alignments between the utterances and the phoneme sequences. Pre-extracted alignments for the datasets are provided here. You have to unzip the files in

preprocessed_data/DATASET/TextGrid/. Alternately, you can run the aligner by yourself.After that, run the preprocessing script by

python3 preprocess.py --dataset DATASET

Train with

python3 train.py --dataset DATASET

-

Restore training status:

To restore training status from checkpoint, you must pass

--restore_stepwith the step of auxiliary FastSpeech2 training as the following command.python3 train.py --restore_step RESTORE_STEP --dataset DATASETFor example, if the last checkpoint is saved at 200000 steps during the training, you have to set

--restore_stepwith200000. Then it will load and freeze the aux model and then continue the training under the active shallow diffusion mechanism.

Use

tensorboard --logdir output/log/DATASET

to serve TensorBoard on your localhost. The loss curves, synthesized mel-spectrograms, and audios are shown.

- In addition to the Diffusion Decoder, the Variance Adaptor is also conditioned on speaker information.

- Two options for embedding for the multi-speaker TTS setting: training speaker embedder from scratch or using a pre-trained philipperemy's DeepSpeaker model (as STYLER did). You can toggle it by setting the config (between

'none'and'DeepSpeaker').

@article{ko2023adversarial

title={Adversarial Training of Denoising Diffusion Model Using Dual Discriminators for High-Fidelity Multi-Speaker TTS},

author={Ko, Myeongjin and Choi, Yong-Hoon},

journal={arXiv preprint arXiv:2308.01573},

year={2023}

}