Project page for the paper:

Intentonomy: a Dataset and Study towards Human Intent Understanding CVPR 2021 (oral)

we introduce a human intent dataset, Intentonomy, containing 14K images that are manually annotated with 28 intent categories, organized in a hierarchy by psychology experts. See DATA.md for how to download the dataset.

We employed a "game with a purpose" approach to acquire the intent annotation from Amazon Mechanical Turks. See this link for furthur demonstration. See Appendix C in our paper for details.

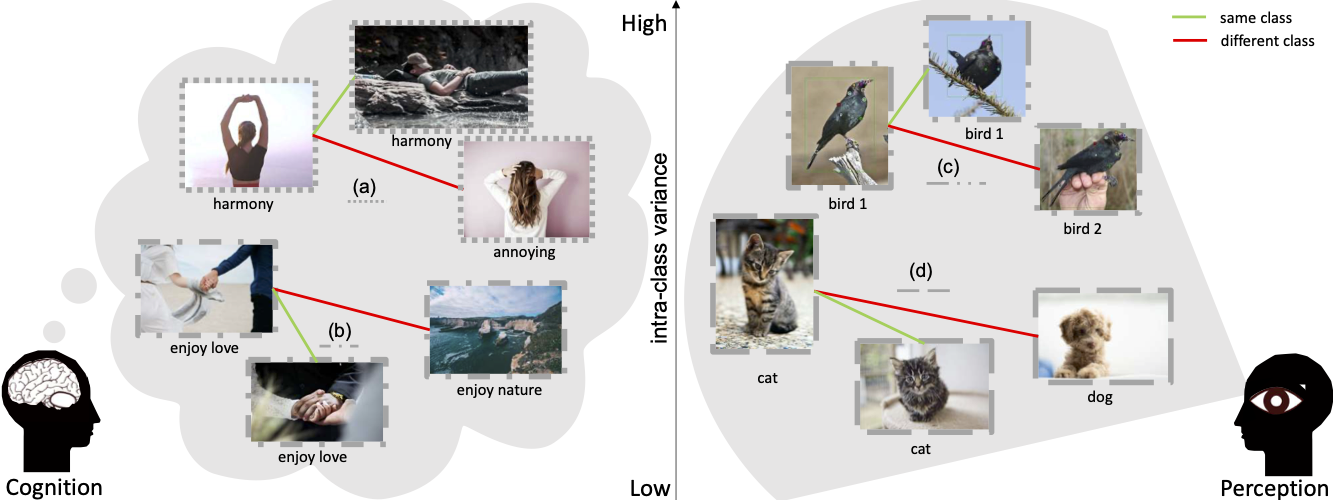

To investigate the intangible and subtle connection between visual content and intent, we present a systematic study to evaluate how the performance of intent recognition changes as a a function of (a) the amount of object/context information; (b) the properties of object/context, including geometry, resolution and texture. Our study suggests that:

- different intent categories rely on different sets of objects and scenes for recognition;

- however, for some classes that we observed to have large intra-class variations, visual content provides negligible boost to the performance.

- our study also reveals that attending to relevant object and scene classes brings beneficial effects for recognizing intent.

We introduce a framework with the help of weaklysupervised localization and an auxiliary hashtag modality that is able to narrow the gap between human and machine understanding of images. We provide the results of the our baseline model below.

We provide the implementation of the proposed localization loss in loc_loss.py, where the default parameters are the one we used in the paper. Download the masks for our images (518M) here and update the MASK_ROOT in the script.

Note that you will need cv2 and pycocotools libraries to use Localizationloss. Other notes are included in the loc_loss.py.

We break down the intent classes into different subsets based on:

- content dependency: i.e., object-dependent (O-classes), context-dependent (C-classes), and Others which depends on both foreground and background information;

- difficulty: it measures how much the VISUAL outperforms achieves than the RANDOM results (“easy”, “medium” and “hard”).

See Appendix A in our paper for details.

scp menglin@10.100.115.133:/checkpoints/menglin/h2/checkpoint/menglin/projects/2020intent/coco_maskrcnn.json coco_maskrcnn.json

Validation set results:

| Macro F1 | Micro F1 | Samples F1 | |

|---|---|---|---|

| VISUAL | 23.03 |

31.36 |

29.91 |

| VISUAL + |

24.42 |

32.87 |

32.46 |

| VISUAL + |

25.07 |

32.94 |

33.61 |

Test set results:

| Macro F1 | Micro F1 | Samples F1 | |

|---|---|---|---|

| VISUAL | 22.77 |

30.23 |

28.45 |

| VISUAL + |

24.37 |

32.07 |

30.91 |

| VISUAL + |

23.98 |

31.28 |

31.39 |

By content dependency:

| object | context | other | |

|---|---|---|---|

| VISUAL | 25.58 |

30.16 |

21.34 |

| VISUAL + |

28.15 |

28.62 |

22.60 |

| VISUAL + |

29.66 |

32.48 |

22.61 |

By difficulty:

| easy | medium | hard | |

|---|---|---|---|

| VISUAL | 54.64 |

24.92 |

10.71 |

| VISUAL + |

57.10 |

25.68 |

12.72 |

| VISUAL + |

58.86 |

26.30 |

13.11 |

If you find our work helpful in your research, please cite it as:

@inproceedings{jia2021intentonomy,

title={Intentonomy: a Dataset and Study towards Human Intent Understanding},

author={Jia, Menglin and Wu, Zuxuan and Reiter, Austin and Cardie, Claire and Belongie, Serge and Lim, Ser-Nam},

booktitle={CVPR},

year={2021}

}We thank Luke Chesser and Timothy Carbone from Unsplash for providing the images, Kimberly Wilber and Bor-chun Chen for tips and suggestions about the annotation interface and annotator management, Kevin Musgrave for the general discussion, and anonymous reviewers for their valuable feedback.