Code for paper "Dialogue State Induction Using Neural Latent Variable Models", IJCAI 2020.

Slides for presentation is available at here.

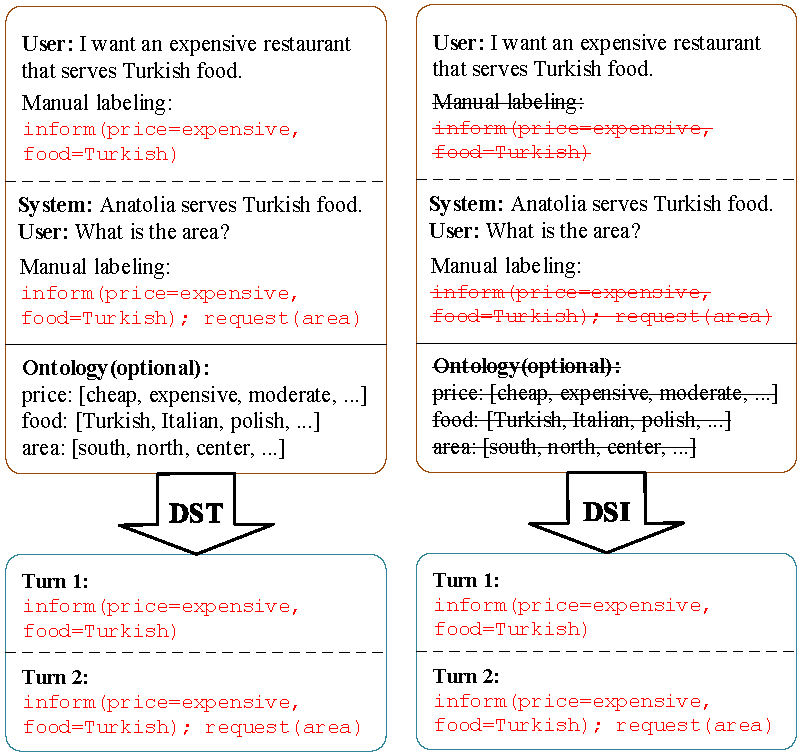

Dialogue state induction aims at automatically induce dialogue states from raw dialogues. We assume that there is a large set of dialogue records of many different domains, but without manual labeling of dialogue states. Such data are relatively easy to obtain, for example from customer service call records from different businesses. Consequently, we propose the task of dialogue state induction (DSI), which is to automatically induce slot-value pairs from raw dialogue data. The difference between DSI and DST is illustrated in Figure 1.

Figure 1: Comparison between DSI and traditional DST. The strikeghtough font is used to rpresent the resources not needed by DSI. The dialogue state is accumulated as the dialogue proceeds. Turns are separately by dashed lines. Dialogues and external ontology are separated by black lines

Figure 1: Comparison between DSI and traditional DST. The strikeghtough font is used to rpresent the resources not needed by DSI. The dialogue state is accumulated as the dialogue proceeds. Turns are separately by dashed lines. Dialogues and external ontology are separated by black lines

In paricular, we use two neural latent variable models (actually one model together with its multi-domain variant) to induce dialogue states. Two datasets, e.g., MultiWOZ2.1 and SGD, are used in our experiments.

In this work, we introduce two incrementally complex neural latent variable models for DSI by treating th whole state and each slot as latent variables.

We evaluate our proposed DSI task on two dataset: MultiWOZ 2.1 and SGD. We consider two evaluation metrics:

- State Matching (Precision, Recall and F1-score in Table 1): To evaluate the overlapping of induced states and the ground truth.

- Goal Accuracy (Accuracy in Table 1): The predicted dialogue states for a turn is considered as true only when all the user search goal constraints are correctly and exactly identified.

We evaluate both metrics in both the turn level and the joint level (Table 1). The joint-level dialogue state is the typical definition of dialogue state, which reflects the user goal from the beginning of the dialogue until the current turn, where turn-level state reflects local user goal at each dialogue turn. The joint level metrics are more strict in jointly considering the output of all turns.

Note: A fuzzy matching mechanism is used to compare induced values with the ground truth, which is similar to the evaluation of SGD dataset.

| MultiWOZ 2.1 | SGD | |||||||

|---|---|---|---|---|---|---|---|---|

| Turn Level | Joint Level | |||||||

| Model | F1 | Accuracy | F1 | Accuracy | F1 | Accuracy | F1 | Accuracy |

| DSI-base | 37.3 | 25.7 | 32.1 | 2.3 | 26.0 | 21.1 | 14.5 | 2.3 |

| DSI-GM | 49.6 | 36.1 | 44.8 | 5.0 | 33.5 | 27.5 | 19.5 | 3.1 |

For pre-processing, you can either directly download pre-processed files or build training data by yourself.

- from google drive: MultiWOZ21, SGD

- from BaiduNetDisk: MultiWOZ21 (code: vr2a), SGD (code: et3q)

- python 3.7

- pytorch>=1.0

- allennlp

- stanfordcorenlp

stanford-corenlp-full-2018-10-05: preprocessing (candidate extraction) toolkit. Download from stanfordnlp and unzip it intoutilsfolder.elmo pretrained model: pretrained model. Download weights file and options file from allennlp and put them intoutils/elmo_pretrained_modelfolder.

For MultiWOZ 2.1 dataset:

- Download MultiWOZ 2.1.

- Data preprocessing: Process MultiWOZ 2.1 to follow the data format of the Schema-Guided Dialogue dataset (to run the script, you need to install tensorflow).

python multiwoz/create_data_from_multiwoz.py \ --input_data_dir=<downloaded_multiwoz2.1_dir> \ --output_dir data/multiwoz21

For SGD dataset:

- Download SGD.

- Put

train,devandtestfolders intodata/dstc8folder.

Extract value candidates, extract features and build vocabulary.

python build_data.py -t multiwoz21|dstc8

- python 3.7

- pytorch>=1.0

- numpy

- fuzzywuzzy

- tqdm

- sklearn

python train.py -t multiwoz|dstc8 -r train -m dsi-base|dsi-gm

Configurations including data paths and model hyper-parameters are stored in config.py

python train.py -t multiwoz21|dstc8 -r predict -m dsi-base|dsi-gm

Please cite the following paper if you use any source codes in your work:

@inproceedings{min2020dsi,

title={Dialogue State Induction Using Neural Latent Variable Models},

author={Min, Qingkai and Qin, Libo and Teng, Zhiyang and Liu, Xiao and Zhang, Yue},

booktitle={IJCAI},

pages={3845--3852},

year={2020}

}