Authors: Yi Zhang, Geng Chen, Qian Chen, YuJia Sun, Yong Xia, Olivier Deforges, Wassim Hamidouche, Lu Zhang

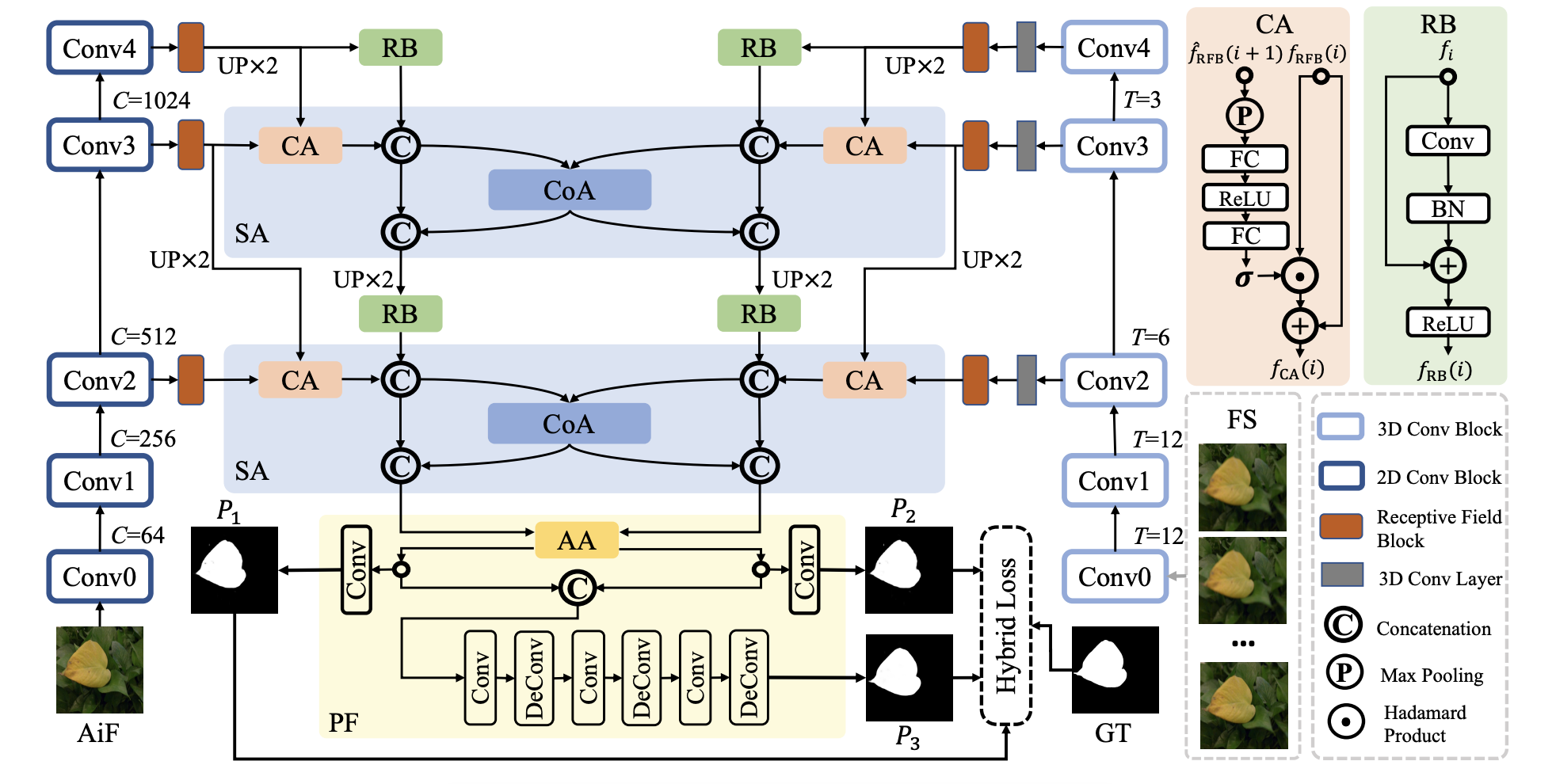

Figure 1: An overview of our SA-Net. Multi-modal multi-level features extracted from our multi-modal encoder are fed to two cascaded synergistic attention (SA) modules followed by a progressive fusion (PF) module. The short names in the figure are detailed as follows: CoA = co-attention component. CA = channel attention component. AA = AiF-induced attention component. RB = residual block. Pn = the nth saliency prediction. (De)Conv = (de-)convolutional layer. BN = batch normalization layer. FC = fully connected layer.

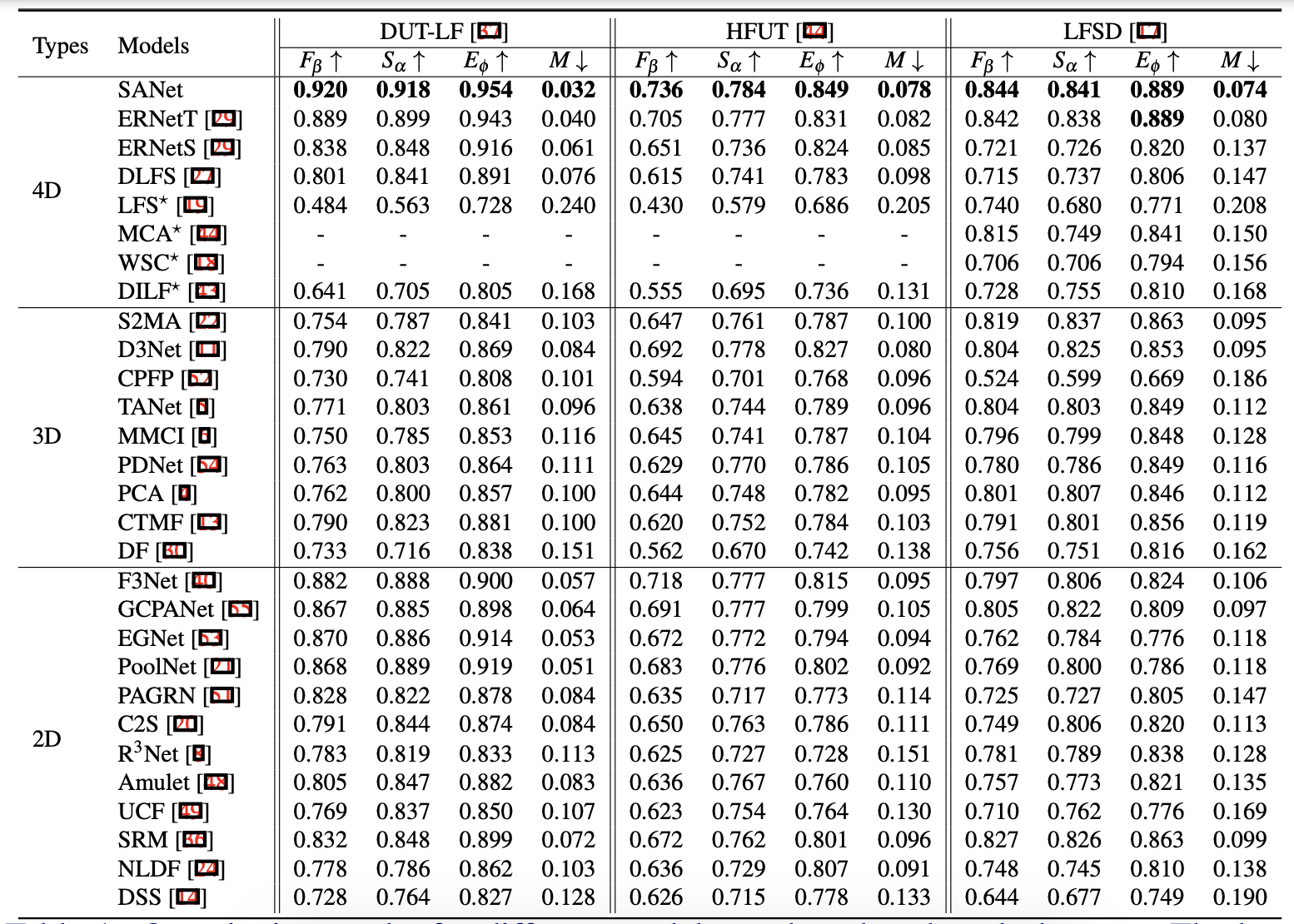

In this work, we propose Synergistic Attention Network (SA-Net) to address the light field salient object detection by establishing a synergistic effect between multimodal features with advanced attention mechanisms. Our SA-Net exploits the rich information of focal stacks via 3D convolutional neural networks, decodes the high-level features of multi-modal light field data with two cascaded synergistic attention modules, and predicts the saliency map using an effective feature fusion module in a progressive manner. Extensive experiments on three widely-used benchmark datasets show that our SA-Net outperforms 28 state-of-the-art models, sufficiently demonstrating its effectiveness and superiority.

Figure 2: Quantitative results for different models on three benchmark datasets. The best scores are in boldface. We train and test our SA-Net with the settings that are consistent with ERNet, which is the state-of-the-art model at present. - denotes no available result. ↑ indicates the higher the score the better, and vice versa for ↓.

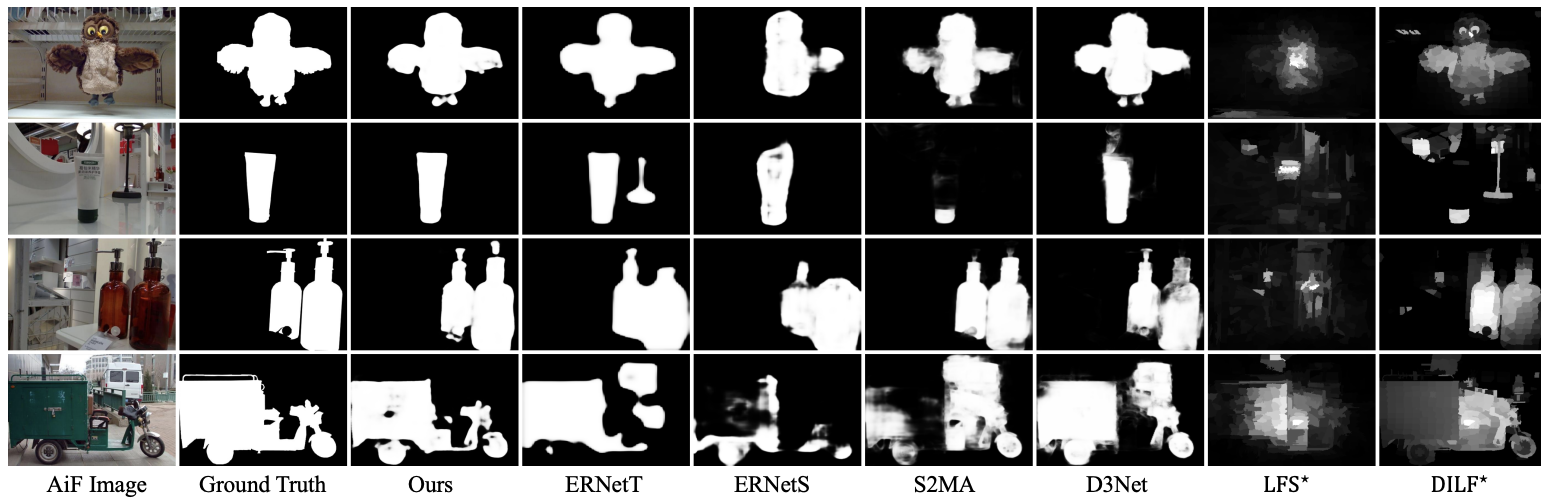

Figure 3: Qualitative comparison between our SA-Net and state-of-the-art light field SOD models.

Download the saliency prediction maps at Google Drive or OneDrive.

Download the pretrained model at Google Drive or OneDrive.

Please refer to SANet_train.py.

Please feel free to drop an e-mail to yi23zhang.2022@gmail.com for any questions.

@article{zhang2021learning,

title={Learning Synergistic Attention for Light Field Salient Object Detection},

author={Zhang, Yi and Chen, Geng and Chen, Qian and Sun, Yujia and Xia, Yong and Deforges, Olivier and Hamidouche, Wassim and Zhang, Lu},

journal={arXiv preprint arXiv:2104.13916},

year={2021}

}