This is the code for our ER conference 2024 submission "A Universal Prompting Strategy for Extracting Process Model Information from Natural Language Text using Large Language Models", as well as material that did not make it into our paper.

Also check the companion repository for generating BPMN models from PET annotated documents: https://anonymous.4open.science/r/pet-to-bpmn-poc-B465/README.md

The excel file results.xlsx contains all results and the raw answer files that contains those results.

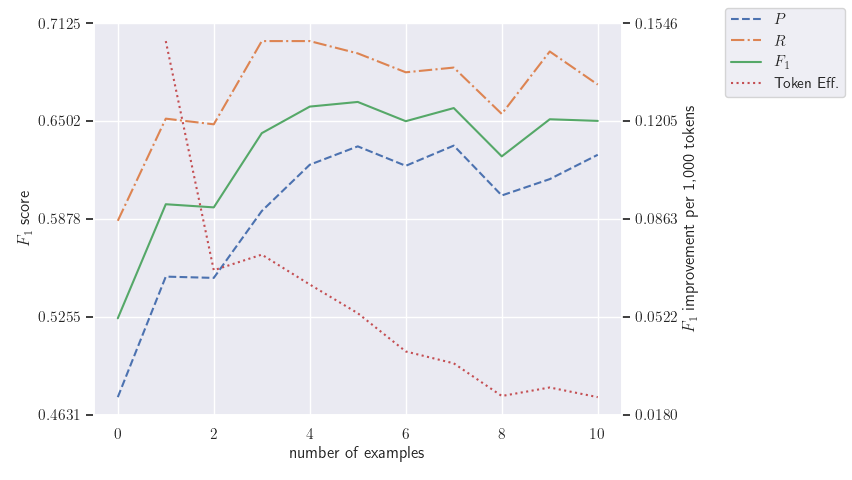

Performance for the MD task on PET by number of shots.

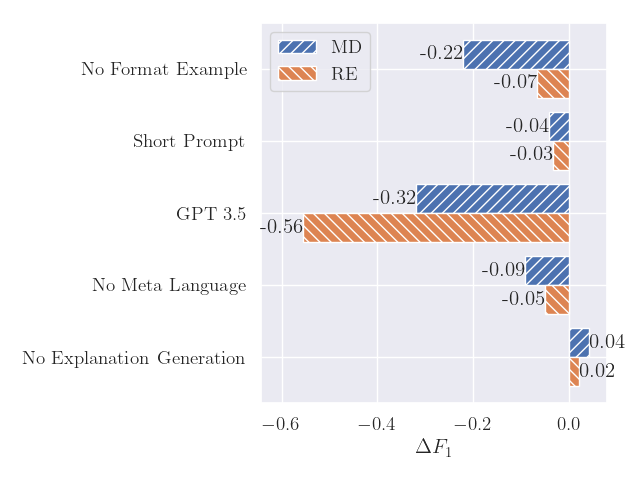

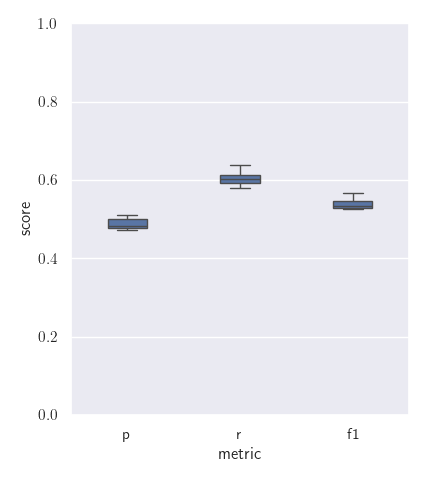

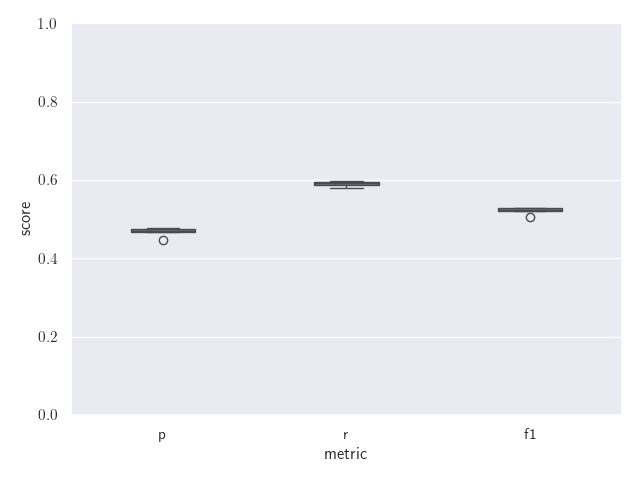

Left: Most relevant results of our ablation study. Right: Differences in performance for 10 repeated runs.

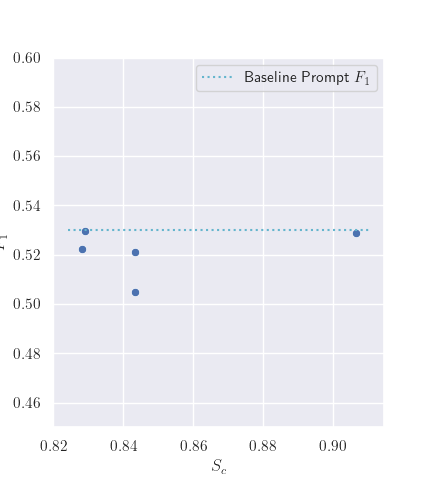

Both: Performance when rephrasing prompts, change in wording measured as the cosine similarity of the prompt BOW representation. Minor changes in similarity elicit only minor changes in performance.

- Conda or Mamba installation: https://github.com/conda-forge/miniforge

- OpenAI API key: https://platform.openai.com/

Create a new conda environment with all the required dependencies and activate it by running

mamba env create -f env.yaml

conda activate llm-process-generationDownload the required datasets from

- Quishpi data (ATDP dataset): https://github.com/PADS-UPC/atdp-extractor/tree/bpm2020. Download and copy folder

inputintores/data/quishpi - van der Aa data (DECON dataset): https://github.com/hanvanderaa/declareextraction/tree/master/caise2019version. Download

datacolection.csvand copy file intores/data/van-der-aa

You can find top level files for all experiments we ran:

pet_md.py: Mention detection on pet datapet_er.py: Entity resolution on pet datapet_re.py: Relation extraction on pet datavanderaa_md.py: Mention detection on van der Aa datavanderaa_re.py: Constraint extraction on van der Aa dataquishpi_md.py: Mention detection on Quishpi dataquishpi_re.py: Constraint extraction on Quishpi dataanalysis.pycontains the code for the ablation study

Note that running these files requires your OpenAI api key to be set as an environment variable!

You can find all the prompts that we used during our experiments (even archived and early ones) in res/prompts/.

Answers for the reported runs can be found in res/answers. You can parse these files using experiments/parse.py.

Set the path to the answer file near line 347: answer_file = f"res/answers/analysis/re/no_disambiguation.json"

and run the file using python -m experiments.parse from the top level project directory.