Creating service fails for deepdetect_gpu

johnt-softclouds opened this issue · comments

I'm able to train a model with both the cpu and gpu versions of the deepdetect platform. I copied the model to the cpu version of the deepdetect server and it works fine. I am able to create a service and use the service for classification. However, I cannot create a service when using the gpu deepdetect server. I went back to basics to troublehshoot the error.

- Read the instructions at https://www.deepdetect.com/quickstart-server/

- Started a terminal shell

- Executed : docker run -d -p 8080:8080 jolibrain/deepdetect_gpu

- Executed: curl http://localhost:8080/info

- Got back the following (I formatted it for easier reading)

{

"status": {

"code": 200,

"msg": "OK"

},

"head": {

"method": "/info",

"build-type": "dev",

"version": "v0.11.0-dirty",

"branch": "heads/v0.11.0",

"commit": "9c273556ce497898c49a8a78d16d7c9571dbc7cc",

"compile_flags": "USE_CAFFE2=OFF USE_TF=OFF USE_NCNN=OFF USE_TORCH=OFF USE_HDF5=ON USE_CAFFE=ON USE_TENSORRT=OFF USE_TENSORRT_OSS=OFF USE_DLIB=OFF USE_CUDA_CV=OFF USE_SIMSEARCH=ON USE_ANNOY=OFF USE_FAISS=ON USE_COMMAND_LINE=ON USE_JSON_API=ON USE_HTTP_SERVER=ON",

"deps_version": "OPENCV_VERSION=3.2.0 CUDA_VERSION_STRING=10.2 CUDNN_VERSION=",

"services": []

}

}

- Executed:

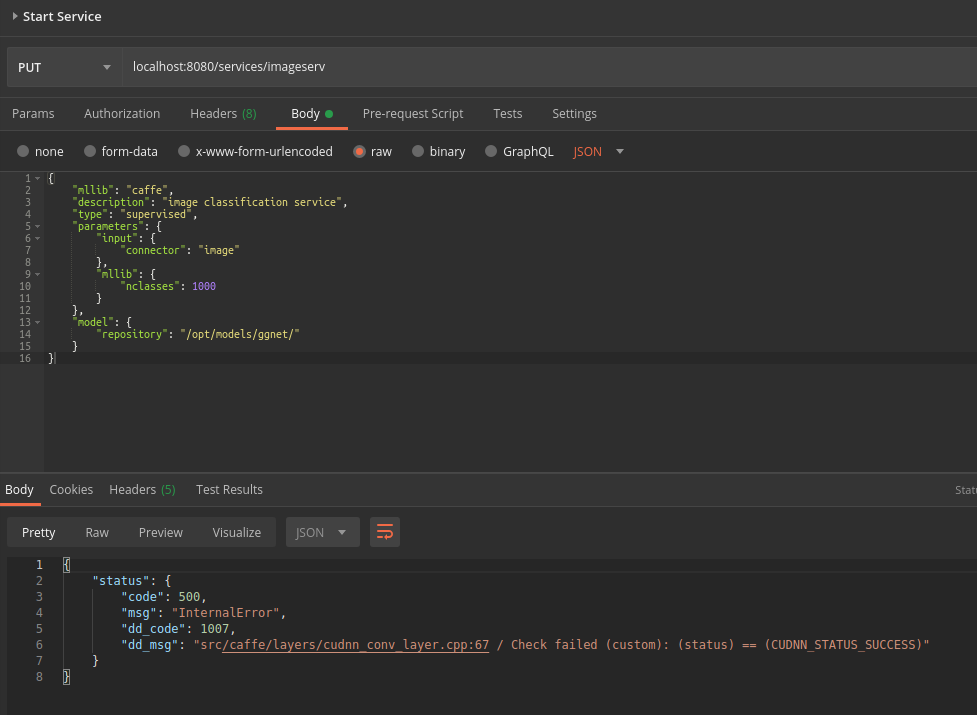

curl -X PUT "http://localhost:8080/services/imageserv" -d '{

"mllib":"caffe",

"description":"image classification service",

"type":"supervised",

"parameters":{

"input":{

"connector":"image"

},

"mllib":{

"nclasses":1000

}

},

"model":{

"repository":"/opt/models/ggnet/"

}

}'

- Got back the following:

{"status":{"code":500,"msg":"InternalError","dd_code":1007,"dd_msg":"src/caffe/layers/cudnn_conv_layer.cpp:67 / Check failed (custom): (status) == (CUDNN_STATUS_SUCCESS)"}}

Here is some information about my laptop:

Ubuntu 18.04 x64

Docker version 19.03.14, build 5eb3275d40

NVIDIA Corporation GP104GLM [Quadro P5000 Mobile] (rev a1)

@johnt-softclouds thanks for reporting this.

@sileht I can reproduce on v0.11.0. Since ci-master doesn't run (yet) for me, I wasn't able to test it. Strangely enough, I believe the docker works for us from within the platform. Quadro P5000 is CUDA compute 6.1, and I've checked that the docker should be built with it, and I've tested on 1080Ti (6.2) to reproduce.

@sileht, two remarks:

- Might be useful to add the loading of the model to our docker CI

- We may want the

compile_flagsto include the CUDAcomputenumbers DD was built with.

It seems this is fixed on a custom built ci-master build, I've started a fuil rebuild of our dockers.

Is the new Docker image available for me to pull?

Tomorrow as thry are dtill fsiling on our vi! I can give you the instructions to build one yourself easily if you like

It would be great if you can send me the instructions so I can start using it today. I will switch to the official Docker tomorrow when its released. Thank you.

Yes so documentation is here: https://github.com/jolibrain/deepdetect/tree/master/docker

And basically for a GPU build, that'd be something like this:

export DOCKER_BUILDKIT=1

docker build -t jolibrain/deepdetect_gpu:mine --progress plain -f docker/gpu.Dockerfile .

EDIT: fixed the line above

Hi @johnt-softclouds thanks for your patience, the ci-master docker builds are now fixed, the above bug has disappeared in my tests.

I let you verify on your side as needed and close the issue.

Thanks for the report!

Thank you. Will do testing today. Will post results after testing.

cloned https://github.com/jolibrain/deepdetect to ~/data/softclouds/unfoldlabs/kapture/docker/custom-deepdetect

cd ~/data/softclouds/unfoldlabs/kapture/docker/custom-deepdetect

mkdir build

cd build

cp -a ../build.sh .

cd ..

export DOCKER_BUILDKIT=1

docker build -t jolibrain/deepdetect_gpu:mine --progress plain -f docker/gpu.Dockerfile .

After an hour into the build, I get the following error message:

6311.9 /opt/deepdetect/build/caffe_dd/src/caffe_dd/include/caffe/llogging.h:227:0: note: this is the location of the previous definition

#24 6311.9 #define DLOG(severity) CaffeLogger(severity).sstream()

#24 6311.9

#24 6311.9 In file included from /opt/deepdetect/build/xgboost/src/xgboost/dmlc-core/src/data/parser.h:11:0,

#24 6311.9 from /opt/deepdetect/src/backends/xgb/xgbinputconns.h:37,

#24 6311.9 from /opt/deepdetect/src/csvinputfileconn.h:793,

#24 6311.9 from /opt/deepdetect/src/backends/caffe/caffeinputconns.h:26,

#24 6311.9 from /opt/deepdetect/src/imginputfileconn.h:819,

#24 6311.9 from /opt/deepdetect/src/services.h:34,

#24 6311.9 from /opt/deepdetect/src/apistrategy.h:30,

#24 6311.9 from /opt/deepdetect/src/deepdetect.h:25,

#24 6311.9 from /opt/deepdetect/src/deepdetect.cc:22:

#24 6311.9 /opt/deepdetect/build/xgboost/src/xgboost/dmlc-core/include/dmlc/logging.h:194:0: error: "LOG_EVERY_N" redefined [-Werror]

#24 6311.9 #define LOG_EVERY_N(severity, n) LOG(severity)

#24 6311.9

#24 6311.9 In file included from /opt/deepdetect/build/caffe_dd/src/caffe_dd/include/caffe/common.hpp:7:0,

#24 6311.9 from /opt/deepdetect/build/caffe_dd/src/caffe_dd/include/caffe/util/db.hpp:6,

#24 6311.9 from /opt/deepdetect/src/simsearch.h:42,

#24 6311.9 from /opt/deepdetect/src/mlmodel.h:26,

#24 6311.9 from /opt/deepdetect/src/mlservice.h:30,

#24 6311.9 from /opt/deepdetect/src/services.h:31,

#24 6311.9 from /opt/deepdetect/src/apistrategy.h:30,

#24 6311.9 from /opt/deepdetect/src/deepdetect.h:25,

#24 6311.9 from /opt/deepdetect/src/deepdetect.cc:22:

#24 6311.9 /opt/deepdetect/build/caffe_dd/src/caffe_dd/include/caffe/llogging.h:231:0: note: this is the location of the previous definition

#24 6311.9 #define LOG_EVERY_N(severity,n) CaffeLogger(severity).sstream()

#24 6311.9

#24 6332.5 cc1plus: all warnings being treated as errors

#24 6332.6 src/CMakeFiles/ddetect.dir/build.make:81: recipe for target 'src/CMakeFiles/ddetect.dir/deepdetect.cc.o' failed

#24 6332.6 make[2]: *** [src/CMakeFiles/ddetect.dir/deepdetect.cc.o] Error 1

#24 6332.6 CMakeFiles/Makefile2:487: recipe for target 'src/CMakeFiles/ddetect.dir/all' failed

#24 6332.6 make[1]: *** [src/CMakeFiles/ddetect.dir/all] Error 2

#24 6332.6 Makefile:102: recipe for target 'all' failed

#24 6332.6 make: *** [all] Error 2

#24 ERROR: executor failed running [/bin/sh -c mkdir build && cd build && ../build.sh]: exit code: 2

[build 10/11] RUN --mount=type=cache,target=/ccache/ mkdir build && cd build && ../build.sh:

executor failed running [/bin/sh -c mkdir build && cd build && ../build.sh]: exit code: 2

Hi. we are updating our logging code, and the caffe part was merged, but not the main/dede part, this is the cause of your compilation problem. As the main/dede part just passed all ci checks, I think it will be merged within a few hours and at this point everything should be okay (or at least should be better)

Please let me know when I can do a clone or pull of the code. Thanks.

See my message #1082 (comment) the docker images are available.

OK. I didn't realize you wanted me to use https://github.com/jolibrain/deepdetect/blob/master/ci/Jenkinsfile.docker to do a build. I will have to figure out how to do this since I'm not a Jenkins expert.

@johnt-softclouds you don't need to build anything anymore, the docker images are fixed :

docker run -d -p 8080:8080 jolibrain/deepdetect_gpu:ci-master

That's it.

@beniz Did you push the latest ci-master to Docker Hub? I'm still getting this error message.

REPOSITORY TAG IMAGE ID CREATED SIZE

jolibrain/deepdetect_gpu ci-master 1a6e95256acb 3 days ago 3.83GBI believe the issue here is that the --gpus all docker flag needs to be used during container creation. This is not reflected in any of the user guides. ref: https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/install-guide.html#docker

Hi @berglh yes the docker works for me. The error you are reporting was affecting an older version of the CPU only docker image. My info call is not reporting the CUDNN version, not sure why, but the docker image id is the same. Maybe make sure you are not in fact running another version ?