Rethinking Training from Scratch for Object Detection

Intro

Code for paper Rethinking Training from Scratch for Object Detection.

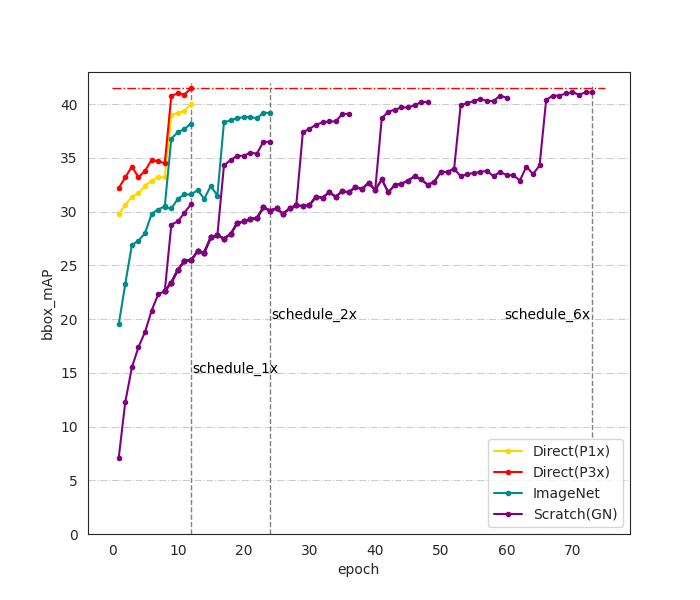

The ImageNet pre-training initialization is the de-facto standard for object detection. He et al. found it is possible to train detector from scratch(random initialization) while needing a longer training schedule with proper normalization technique. In this paper, we explore to directly pre-training on target dataset for object detection. Under this situation, we discover that the widely adopted large resizing strategy e.g. resize image to (1333, 800) is important for fine-tuning but it's not necessary for pre-training. Specifically, we propose a new training pipeline for object detection that follows `pre-training and fine-tuning', utilizing low resolution images within target dataset to pre-training detector then load it to fine-tuning with high resolution images. With this strategy, we can use batch normalization(BN) with large bath size during pre-training, it's also memory efficient that we can apply it on machine with very limited GPU memory(11G). We call it direct detection pre-training, and also use direct pre-training for short. Experiment results show that direct pre-training accelerates the pre-training phase by more than 11x on COCO dataset while with even +1.8mAP compared to ImageNet pre-training. Besides, we found direct pre-training is also applicable to transformer based backbones e.g. Swin Transformer.

Pre-trained models

| method | pipeline | bbox mAP | mask mAP | config | model |

|---|---|---|---|---|---|

| RetinaNet | ImageNet-1x | 36.5 | - | - | - |

| RetinaNet | Direct(P1x)-1x | 37.1 | - | pre-train|fine-tune | pre-train|fine-tune |

| Faster RCNN | ImageNet-1x | 37.4 | - | - | - |

| Faster RCNN | Direct(P1x)-1x | 39.3 | - | pre-train|fine-tune | pre-train|fine-tune |

| Cascade RCNN | ImageNet-1x | 40.3 | - | - | - |

| Cascade RCNN | Direct(P1x)-1x | 41.5 | - | pre-train|fine-tune | pre-train|fine-tune |

| Mask RCNN | ImageNet-1x | 38.2 | 34.7 | - | - |

| Mask RCNN | Direct(P1x)-1x | 40.0 | 35.8 | pre-train|fine-tune | pre-train|fine-tune |

| Mask RCNN w/ Swin | ImageNet-1x | 43.8 | 39.6 | - | - |

| Mask RCNN w/ Swin | Direct(P1x)-1x | 45.0 | 40.5 | pre-train|fine-tune | pre-train|fine-tune |

We also provide models are sufficiently trained with longer schedule(3x), could be used for model initialization.

| method | pipeline | bbox mAP | mask mAP | model |

|---|---|---|---|---|

| Mask RCNN | Direct(P3x)-1x | 41.5 | 37.0 | pre-train|fine-tune |

| Mask RCNN w/ Swin | Direct(P3x)-1x | 46.9 | 41.9 | pre-train|fine-tune |

Installation

This project mainly reference MMDetection codebase and Swin Transformer.

- Following MMDection installation guide to install mmdetection.

- install NVIDIA apex:

git clone https://github.com/NVIDIA/apex

cd apex

pip install -v --disable-pip-version-check --no-cache-dir --global-option="--cpp_ext" --global-option="--cuda_ext" ./Usage

Prepare Dataset

git clone https://github.com/wxzs5/direct-pretraining.git

cd direct-pretraining

# set dataset path

mkdir data

ln -s path/to/coco_dataset data/

Inference

# single-gpu testing

python test.py <CONFIG_FILE> <DET_CHECKPOINT_FILE> --eval <EVAL_METRICS>

# multi-gpu testing

./dist_test.sh <CONFIG_FILE> <DET_CHECKPOINT_FILE> <GPU_NUM> --eval <EVAL_METRICS>e.g.

./dist_test.sh configs/direct_pretraining/mask_rcnn_r50_fpn_direct_finetune_p1x_1x_coco.py model.pth 8 --eval bbox segmTrain

- pre-training:

./dist_train.sh <CONFIG_FILE> <GPU_NUM>- fine-tuning:

# set config file *load_from* the pre-trained model path

./dist_train.sh <CONFIG_FILE> <GPU_NUM>Citing Direct Pre-training

if this paper helps you, please consider citing direct pre-training.

@article{yang2021Rethink,

title={Rethinking Training from Scratch for Object Detection},

author={Yang, Li and Hong, Zhang and Yu, Zhang},

journal={arXiv preprint arXiv:2106.03112},

year={2021}

}