BEVFormer: a Cutting-edge Baseline for Camera-based Detection

BEVFormer.mp4

BEVFormer: Learning Bird's-Eye-View Representation from Multi-Camera Images via Spatiotemporal Transformers, ECCV 2022

- Paper in arXiv | Paper in Chinese | OpenPerceptionX

- Blog in Chinese | Video Talk and Slides (in Chinese)

- BEV Perception Survey | Github repo

News

- [2022/6/16]: We added two BEVformer configurations, which require less GPU memory than the base version. Please pull this repo to obtain the latest codes.

- [2022/6/13]: We release an initial version of BEVFormer. It achieves a baseline result of 51.7% NDS on nuScenes.

- [2022/5/23]: 🚀🚀Built on top of BEVFormer, BEVFormer++, gathering up all best practices in recent SOTAs and our unique modification, ranks 1st on Waymo Open Datast 3D Camera-Only Detection Challenge. We will present BEVFormer++ on CVPR 2022 Autonomous Driving Workshop.

- [2022/3/10]: 🚀BEVFormer achieve the SOTA on nuScenes Detection Task with 56.9% NDS (camera-only)!

Abstract

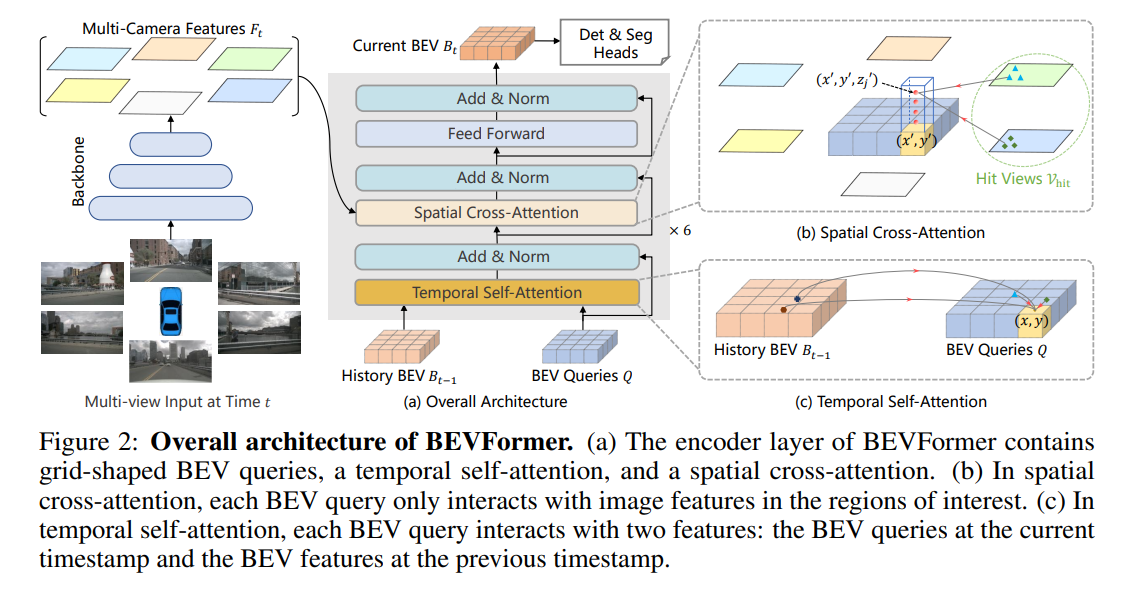

In this work, the authors present a new framework termed BEVFormer, which learns unified BEV representations with spatiotemporal transformers to support multiple autonomous driving perception tasks. In a nutshell, BEVFormer exploits both spatial and temporal information by interacting with spatial and temporal space through predefined grid-shaped BEV queries. To aggregate spatial information, the authors design a spatial cross-attention that each BEV query extracts the spatial features from the regions of interest across camera views. For temporal information, the authors propose a temporal self-attention to recurrently fuse the history BEV information. The proposed approach achieves the new state-of-the-art 56.9% in terms of NDS metric on the nuScenes test set, which is 9.0 points higher than previous best arts and on par with the performance of LiDAR-based baselines.

Methods

Getting Started

Model Zoo

| Backbone | Method | Lr Schd | NDS | mAP | memroy | Config | Download |

|---|---|---|---|---|---|---|---|

| R50 | BEVFormer-tiny_fp16 | 24ep | 35.9 | 25.7 | - | config | model/log |

| R50 | BEVFormer-tiny | 24ep | 35.4 | 25.2 | 6500M | config | model/log |

| R101-DCN | BEVFormer-small | 24ep | 47.9 | 37.0 | 10500M | config | model/log |

| R101-DCN | BEVFormer-base | 24ep | 51.7 | 41.6 | 28500M | config | model/log |

Catalog

- BEV Segmentation checkpoints

- BEV Segmentation code

- 3D Detection checkpoints

- 3D Detection code

- Initialization

Bibtex

If this work is helpful for your research, please consider citing the following BibTeX entry.

@article{li2022bevformer,

title={BEVFormer: Learning Bird’s-Eye-View Representation from Multi-Camera Images via Spatiotemporal Transformers},

author={Li, Zhiqi and Wang, Wenhai and Li, Hongyang and Xie, Enze and Sima, Chonghao and Lu, Tong and Qiao, Yu and Dai, Jifeng}

journal={arXiv preprint arXiv:2203.17270},

year={2022}

}

Acknowledgement

Many thanks to these excellent open source projects: