Official PyTorch Implementation of the Tag2Text, an efficient and controllable vision-language model with tagging guidance. Code is available now!

Welcome to try out Tag2Text Web demo🤗! Both Tagging and Captioning are included.

Tag2Text now is combine with Grounded-SAM, which can automatically recognize, detect, and segment for an image! Tag2Text showcases powerful image recognition capabilities:

2023/06/05: Prompt-can-anything ,gradio web library that integrates SOTA multimodal large models, which aims to become an "agent" to help you do anything, including Tag2text as the core model for graphic understanding ,Contains automatic visual tasks, voice, graphics, GPT and more . version_1.15 realease!2023/05/20: Tag2Text is combined with VideoChat, Tag2Text provides powerful tagging and captioning capabilities as a fundamental component!2023/04/20: We marry Tag2Text with with Grounded-SAM to provide powerful image recognition capabilities!2023/04/10: Code and checkpoint is available Now!2023/03/14: Tag2Text web demo 🤗 is available on Hugging Face Space!

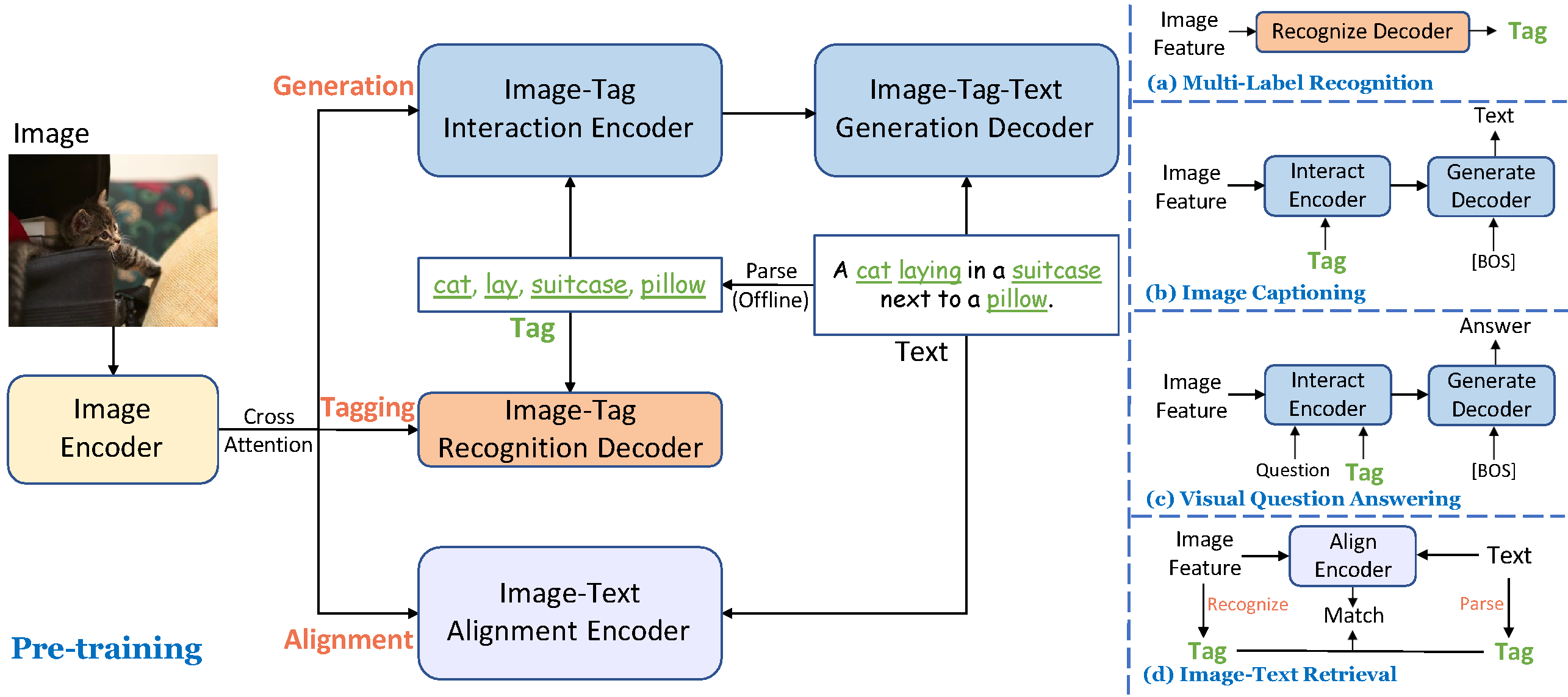

- Tagging. Without manual annotations, Tag2Text achieves superior image tag recognition ability of 3,429 commonly human-used categories.

- Efficient. Tagging guidance effectively enhances the performance of vision-language models on both generation-based and alignment-based tasks.

- Controllable. Tag2Text permits users to input desired tags, providing the flexibility in composing corresponding texts based on the input tags.

|

- Release demo.

- Release checkpoints.

- Release inference code.

- Release training codes.

- Release training datasets.

| name | backbone | Data | Illustration | Checkpoint | |

|---|---|---|---|---|---|

| 1 | Tag2Text-Swin | Swin-Base | COCO, VG, SBU, CC-3M, CC-12M | Demo version with comprehensive captions. | Download link |

- Install the dependencies, run:

pip install -r requirements.txt

-

Download Tag2Text pretrained checkpoints.

-

Get the tagging and captioning results:

python inference.py --image images/1641173_2291260800.jpg \ --pretrained pretrained/tag2text_swin_14m.pthOr get the tagging and sepcifed captioning results (optional):

python inference.py --image images/1641173_2291260800.jpg \ --pretrained pretrained/tag2text_swin_14m.pth \ --specified-tags "cloud,sky"

If you find our work to be useful for your research, please consider citing.

@article{huang2023tag2text,

title={Tag2Text: Guiding Vision-Language Model via Image Tagging},

author={Huang, Xinyu and Zhang, Youcai and Ma, Jinyu and Tian, Weiwei and Feng, Rui and Zhang, Yuejie and Li, Yaqian and Guo, Yandong and Zhang, Lei},

journal={arXiv preprint arXiv:2303.05657},

year={2023}

}

This work is done with the help of the amazing code base of BLIP, thanks very much!

We also want to thank @Cheng Rui @Shilong Liu @Ren Tianhe for their help in marrying Tag2Text with Grounded-SAM.