Automatically modelling and distilling knowledge within AI. In other words, summarise the arxiv firehose. Map, categorise, quantify, qualify, filter, search, browse, reduce, digest, compress, summarise and model all knowledge within ML/DL/RL/AI/DS/CS/Stats. And, always for the community.

We are showing some of our results on ai-distillery.io.

Server GitHub repo at ai-distillery-app

Server GitHub repo at ai-distillery-app

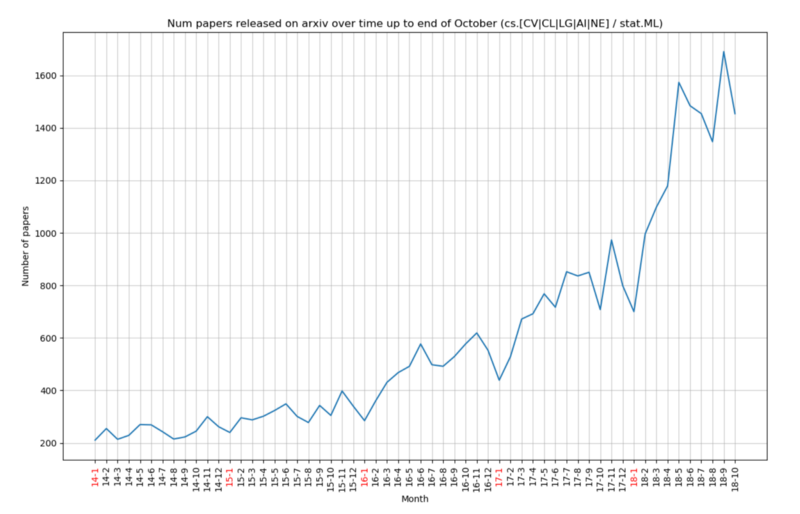

Number of arxiv papers released over time from 2014 Jan - November 2018

Please consider using a virtual environment as shown below.

This way, the scripts won't pollute your global $PATH.

git clone https://github.com/ai-distillery

cd ai-distillery

virtualenv venv && source venv/bin/activate # STRONGLY RECOMMENDED

pip install -e .The package will install a single executable distill. Distill can be invoked to apply latent semantic analysis, word2vec, doc2vec, and extracting named entities.

Consolt distill -h for more information on the available subcommands.

We maintain a fork of Karpathy's Arxiv Sanity Preserver to harvest structured meta-data as well as full-text data from ArXiV.

We assume in the following that the data/db.p holds the database of

structured metadata. The directory data/txt contains the raw

<arxiv_Id>.pdf.txt full-text files.

For convenience we have registered our fork of arxiv-sanity-preserver as a submodule. To clone the submodule, issue the following command.

git submodule update --initThen follow the guide by Karpathy to run the code.

Please consult -h for more information on how to run one of the executables.

An example call to compute 2-dimensional LSA (latent semantic analysis) vectors for the documents:

distill lsa data/txt/ -n 2 --annotate data/full_paper_id_to_title_dict.pkl -o data/embeddings/lsa-2.pklThis call assumes that data/txt/ contains *.pdf.txt files.

The -n arguments determines the number of components at which the singular value decomposition in LSA should truncate. This also determines the embedding dimension.

The optional --annotate argument supplies a path to a pickled dict which maps identifiers (filenames without .pdf.txt) to titles for visualization.

The output is stored in Ben format. A pickled dict of type {'labels': labels:list(str), 'embeddings': embeddings:numpy.ndarray } such that labels[i]

corresponds to embeddings[i].

Make sure to install the aidistillery package by pip install -e . or python3 setup.py develop.

This way, any changes take effect without the need to reinstall.

We look forward to receiving your pull requests.