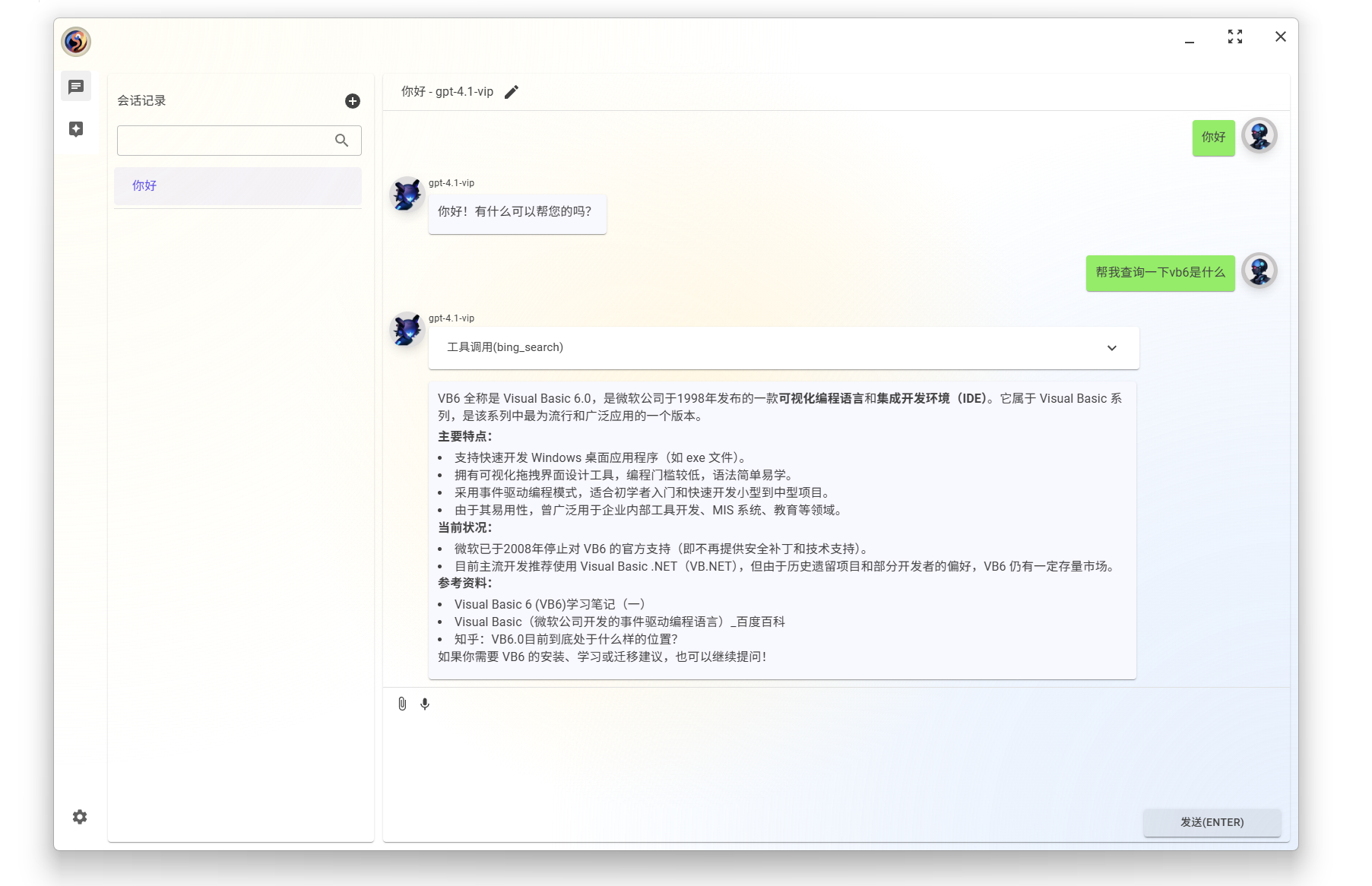

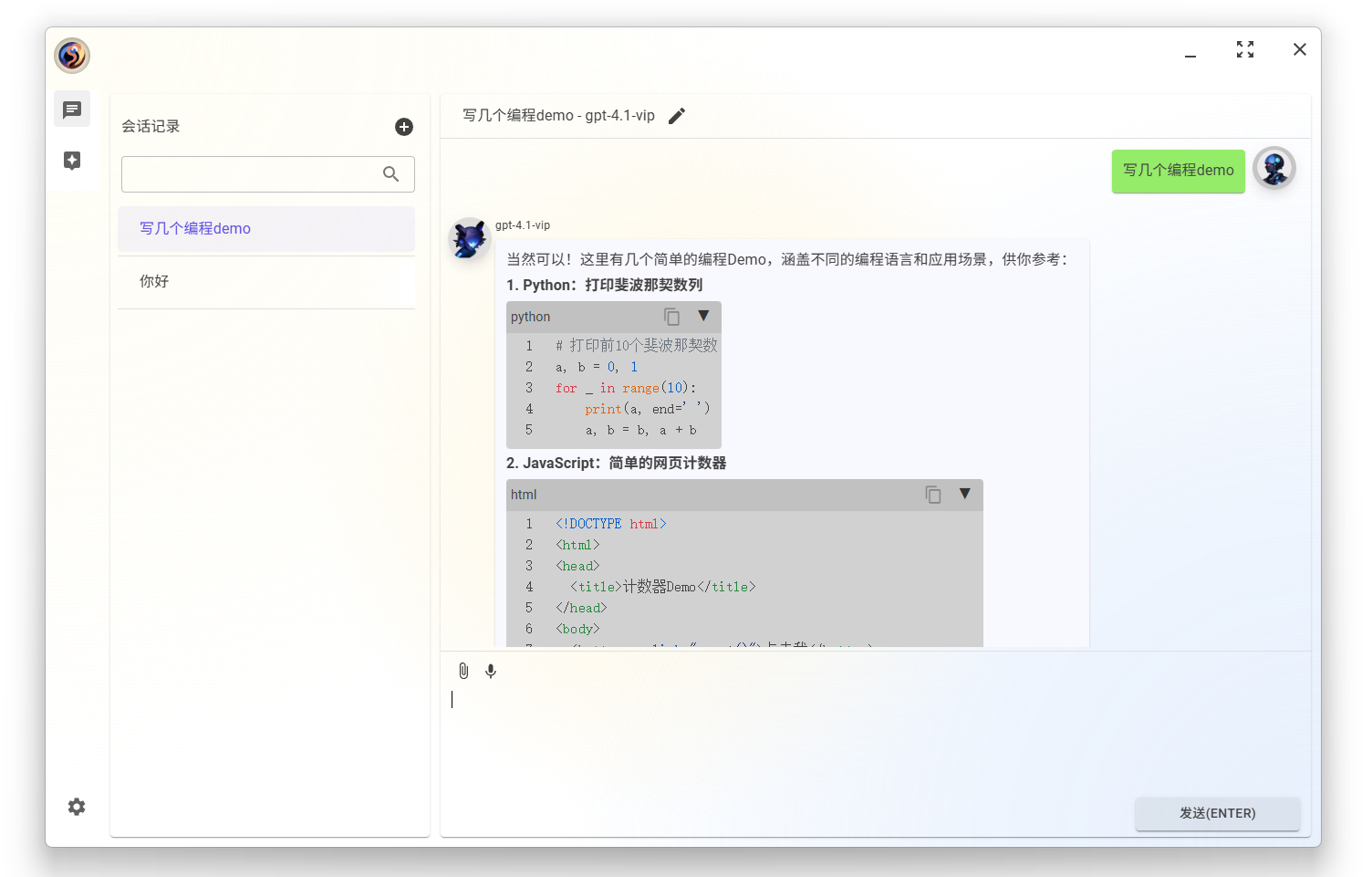

A powerful, cross-platform, and open-source LLM AI client.

Tinvo is designed to be a comprehensive and extensible client for various AI models, supporting both cloud-based APIs and local inference engines. Its cross-platform nature allows you to run it seamlessly on desktops, mobile devices, and even directly in your web browser.

-

Multi-Provider Support:

- OpenAI

- iFlytek (Xunfei)

- ONNX

- Ollama

- Llama Models

- Model Context Protocol (MCP) Tools Call

-

Cross-Platform Compatibility:

- Android

- iOS

- Windows

- macOS

- Linux

- Web Server

- WebAssembly (WASM)

-

Storage Support:

- Local

- WebDAV

This project is licensed under the MIT License.