Pytorch implementation of "What Uncertainties Do We Need in Bayesian Deep Learning for Computer Vision?", NIPS 2017

# Data Tree

config.data_dir/

└── config.data_name/

# Project Tree

WHAT

├── WHAT_src/

│ ├── data/ *.py

│ ├── loss/ *.py

│ ├── model/ *.py

│ └── *.py

└── WHAT_exp/

├── log/

├── model/

└── save/

# L2 loss only

python train.py --uncertainty "normal" --drop_rate 0.

# Epistemic / Aleatoric

python train.py --uncertainty ["epistemic", "aleatoric"]

# Epistemic + Aleatoric

python train.py --uncertainty "combined"

# L2 loss only

python train.py --is_train false --uncertainty "normal"

# Epistemic

python train.py --is_train false --uncertainty "epistemic" --n_samples 25 [or 5, 50]

# Aleatoric

python train.py --is_train false --uncertainty "aleatoric"

# Epistemic + Aleatoric

python train.py --is_train false --uncertainty "combined" --n_samples 25 [or 5, 50]

-

Python3.7

-

Pytorch >= 1.0

-

Torchvision

-

distutils

This is not official implementation.

-

Autoencoder based on Bayesian Segnet

- Network depth 2 (paper 5)

- Drop_rate 0.2 (paper 0.5)

-

Fahsion MNIST / MNIST

- Input = Label (for autoencoder)

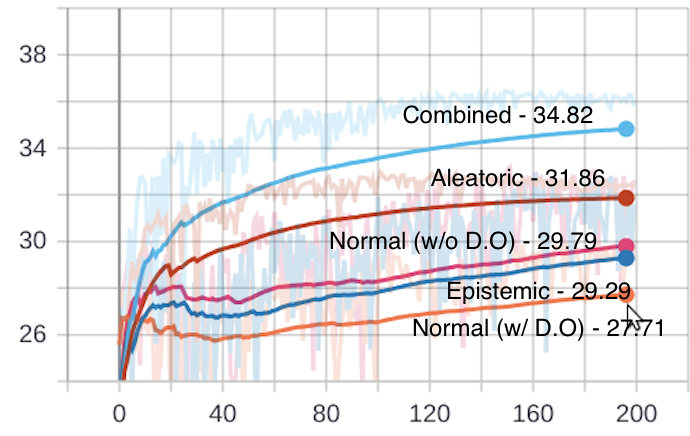

Combined > Aleatoric > Normal (w/o D.O) > Epistemic > Normal (w/ D.O)