Brown university CSCI 2952-O final project.

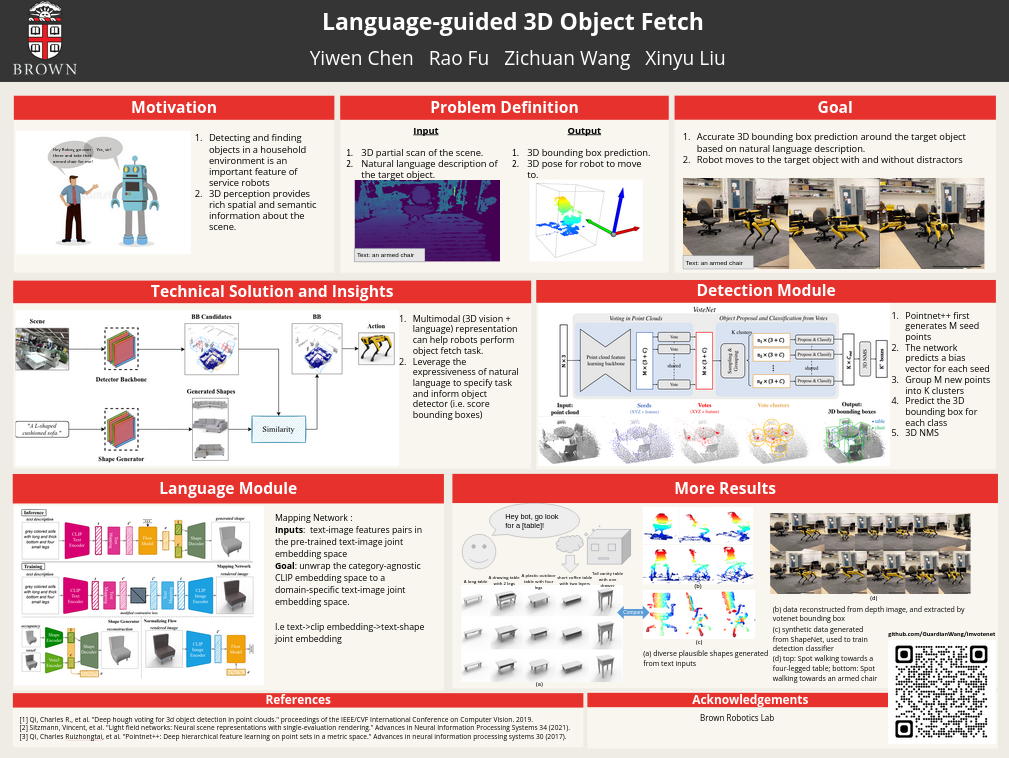

Given a depth map and a text description, we use Spot to detect the object that matches the description in the scene and walk to it.

This repo is based on imvotenet.

- Demo only with 3D object detector

- spot-sdk for Spot API

Clone this repository and submodules:

git clone https://github.com/GuardianWang/imvotenet.git RobotFetchMeIt

cd RobotFetchMeIt

git submodule initWe use conda to create a virtual environment. Because loading the weights of text model requires pytorch>=1.8 and compiling pointnet2 requires pytorch==1.4, we are using two conda environments.

conda create -n fetch python=3.7 -y

conda create -n torch18 python=3.7 -yOverall, the installation is similar to VoteNet. GPU is required. The code is tested with Ubuntu 18.04, Python 3.7.13, PyTorch 1.4.0, CUDA 10.0, cuDNN v7.4, and NVIDIA GeForce RTX 2080.

Before installing Pytorch, make sure the machine has GPU on it. Check by nvidia-smi.

We need GPU to compile PointNet++.

First install PyTorch,

for example through Anaconda:

Find cudatoolkit and cudnn version match here.

Find how to let nvcc detect a specific cuda version here.

conda activate fetch

conda install pytorch==1.4.0 torchvision==0.5.0 cudatoolkit=9.2 cudnn=7.6.5 -c pytorch

pip install matplotlib opencv-python plyfile tqdm networkx==2.2 trimesh==2.35.39 protobuf

pip install open3d

pip install bosdyn-client bosdyn-mission bosdyn-choreography-client

conda activate torch18

conda install pytorch==1.8.0 torchvision==0.9.0 cudatoolkit=10.2 cudnn=7.6.5 -c pytorch

pip install ordered-set

pip install tqdm

pip install pandasThe code depends on PointNet++ as a backbone, which needs compilation. We need to compile by the arch of GPU we want to use.

conda activate fetch

cd pointnet2

TORCH_CUDA_ARCH_LIST="7.5" python setup.py install

cd ..- VoteNet weights (12 MB): checkpoint.tar

- Text model weights (1.4 GB)

- Text features (500 MB)

bash download.sh

mv checkpoint.pth TextCondRobotFetch

unzip subdataset.zip -d <path to unzip>In terminal1, run

conda activate fetch

export ROBOT_IP=<your spot ip>

python estop_nogui.py $ROBOT_IPYou can force stop the spot by pressing <space>

In terminal2, run

conda activate fetch

export ROBOT_IP=<your spot ip>

export BOSDYN_CLIENT_USERNAME=<spot username>

export BOSDYN_CLIENT_PASSWORD=<spot password>

export BOSDYN_DOCK_ID=<spot dock id>

python predict_example.py --checkpoint_path checkpoint.tar --dump_dir pred_votenet --cluster_sampling seed_fps --use_3d_nms --use_cls_nms --per_class_proposal --time_per_move=5 --username $BOSDYN_CLIENT_USERNAME --password $BOSDYN_CLIENT_PASSWORD --dock_id $BOSDYN_DOCK_ID $ROBOT_IPIn terminal3, run

conda activate torch18

export ROBOT_IP=<your spot ip>

python shape_inference.py --checkpoint TextCondRobotFetch/checkpoint.pthYou can specify the text and corresponding pre-extracted features by changing latent_id parameter passed to pred_shape()

in shape_inference.py::pred_shape.

Texts and features are stored in TextCondRobotFetch/embeddings

You can specify the path to subdataset in the folder parameter passed in shape_inference.py::get_partial_scans.

Read imvotenet to see how to train the detector.

The code is released under the MIT license.

- [] subdataset

- [] zip shared by Rao