SimTSC

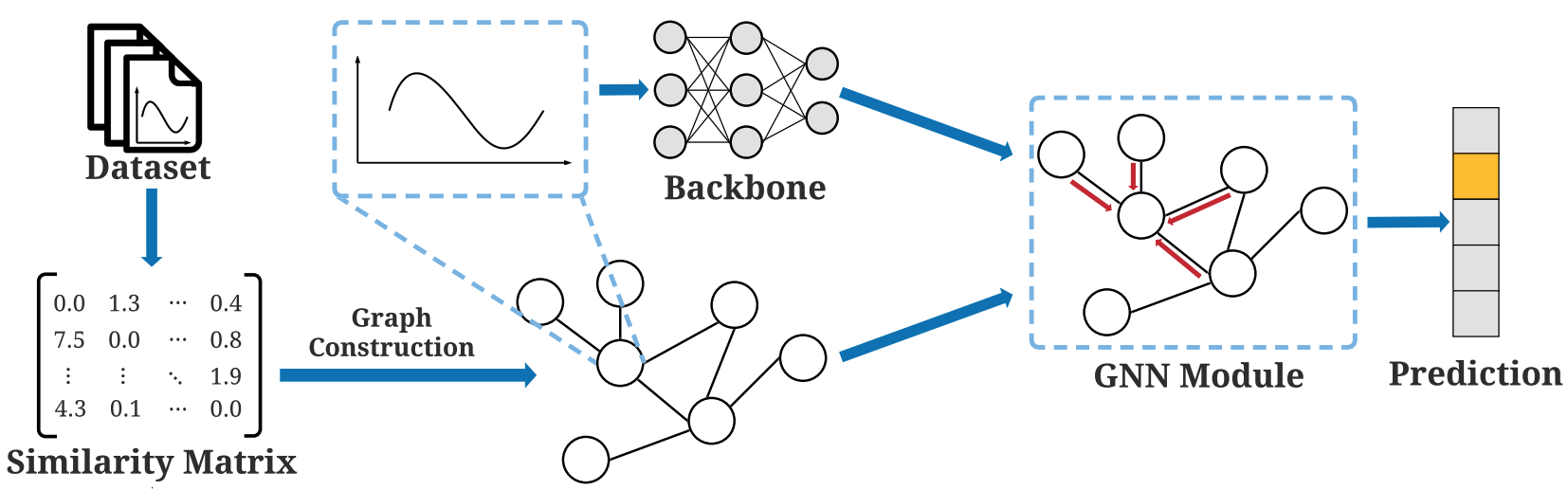

This is the PyTorch implementation of SDM2022 paper Towards Similarity-Aware Time-Series Classification. We propose Similarity-Aware Time-Series Classification (SimTSC), a conceptually simple and general framework that models similarity information with graph neural networks (GNNs). We formulate time-series classification as a node classification problem in graphs, where the nodes correspond to time-series, and the links correspond to pair-wise similarities.

Installation

pip3 install -r requirements.txt

Datasets

We provide an example dataset Coffee in this repo. You may download the full UCR datasets here. Multivariate datasets are provided in this link.

Quick Start

We use Coffee as an example to show how to run the code. You may easily try other datasets with arguments --dataset. We will show how to get the results for DTW+1NN, ResNet, and SimTSC.

First, prepare the dataset with

python3 create_dataset.py

Then install the python wrapper of UCR DTW library with

git clone https://github.com/daochenzha/pydtw.git

cd pydtw

pip3 install -e .

cd ..

Then compute the dtw matrix for Coffee with

python3 create_dtw.py

- For DTW+1NN:

python3 train_knn.py

- For ResNet:

python3 train_resnet.py

- For SimTSC:

python3 train_simtsc.py

All the logs will be saved in logs/

Multivariate Datasets Quick Start

-

Download the datasets and pre-computed DTW with this link.

-

Unzip the file and put it into

datasets/folder -

Prepare the datasets with

python3 create_dataset.py --dataset CharacterTrajectories

- For DTW+1NN:

python3 train_knn.py --dataset CharacterTrajectories

- For ResNet:

python3 train_resnet.py --dataset CharacterTrajectories

- For SimTSC:

python3 train_simtsc.py --dataset CharacterTrajectories

Descriptions of the Files

create_dataset.pyis a script to pre-process dataset and save them into npy. Some important hyperparameters are as follows.

--dataset: what dataset to process--shot: how many training labels are given in each class

create_dtw.pyis a script to calculate pair-wise DTW distances of a dataset and save them into npy. Some important hyperparameters are as follows.

--dataset: what dataset to process

train_knn.pyis a script to do classfication DTW+1NN of a dataset. Some important hyperparameters are as follows.

--dataset: what dataset we operate on--shot: how many training labels are given in each class

train_resnet.pyis a script to do classfication of a dataset with ResNet. Some important hyperparameters are as follows.

--dataset: what dataset we operate on--shot: how many training labels are given in each class--gpu: which GPU to use

train_simtsc.pyis a script to do classfication of a dataset with SimTSC. Some important hyperparameters are as follows.

--dataset: what dataset we operate on--shot: how many training labels are given in each class--gpu: which GPU to use--K: number of neighbors per node in the constructed graph--alpha: the scaling factor of the weights of the constructed graph