Is it possible to bypass the `--full-sort` option if we assign timestamps in MACROS

ztlevi opened this issue · comments

Hi,

First, thanks for the great help all the time!

Problem description

In our case, the trace file is post generated rather using a hook based approach. Which means we pass timestamps to the MACROS, e.g. TRACE_COUNTER(counter_category, counter_track, timestamp, val);.

Currently, I use set_write_into_file option. But because of we're not using a hook based approach, options like set_file_write_period_ms does not work. The events are not fully sorted. Inside each track's data, the events are sorted. But when we generate all the tracks together, we go track by track which makes the events not sorted.

I'm flushing the trace manually with tracing_session->FlushBlocking(flush_timeout); every couple thousands TRACE_EVENT.

I'm getting this sorting error below if not passing the --full-sort option.

name idx source value

---------------------------------------- ---------------------------------------- ---------------------------------------- ----------------------------------------

counter_events_out_of_order [NULL] analysis 393689

slice_out_of_order [NULL] analysis 9178010

sorter_push_event_out_of_order [NULL] trace 9571771

Reason

The reason we want to avoid using --full-sort with trace_processor is it take a lot of memory.

FMHO, I don't know why they need to be sorted. Shouldn't the Sqlite3 database index the timestamp and kind of sort events when inserting them into the database?

Possible solution

One method I can think of is using priority queue and merge K sorted array. But that will change our API completely.

Am I correct with that --full-sort option? Can I bypass that option with other method?

Context

I'm under perfetto v24.2 branch.

Thanks,

Not sure what you mean with " hook based approach" but I guess you want to control the timing of reads.

Note that Flush is not enough. Flush just causes producer processes to commit all the data in the ring buffer, but does not cause the ring buffer to be written into the file. That takes an internal ReadBuffers call.

I don't know how you would achieve that in your case though, if you set set_write_into_file but then don't want to use set_file_write_period_ms (why? what's the rationale for that) you don't have other ways to control when that should happen.

Issuing a ReadBuffer IPC won't work, because that is designed to return data via IPC, but if the trace is write_into_file=true, that will refuse to work, because you can't do IPC reads on a trace that is setup to write into a file.

FMHO, I don't know why they need to be sorted.

Because a lot of code and optimizations internally assume table data is sorted to avoid doing post-facto sorting at query time which would be expensive.

Shouldn't the Sqlite3 database index the timestamp and kind of sort events when inserting them into the database?

No, for many reasons:

- We don't use the standard sqlite in-memory tables. Those are too expensive/slow, they as are tuple based. We have our own columnar-oriented in memory-storage, which allows us to have no indices at all (we create indexes on the flight base on columns).

- But then some columns (e.g. ts) are assumed to be sorted, because re-sorting them in a large trace is too expensve and requires reshuffling all the data. We will never go there, there is no reason to go there.

- It's not just about SQL queries. A lot of events need to be seen in order by the importer, as often some events (E.g. clock snapshots) are requires to interpret/add semantic to events that come after. If we didn't rely on this we'd have to do multi-pass imports on the trace but that's too much.

- If it takes too much memory, enable swap. that's what swap is for literally.

Thanks for the reply!

hook based approach

This refers to how the events are triggered. Since you're using the MACROS every time it's triggered, it uses the current time. This behaves like a hook.

I don't know how you would achieve that in your case though, if you set set_write_into_file but then don't want to use set_file_write_period_ms (why? what's the rationale for that) you don't have other ways to control when that should happen.

I set all the options like this. But it turns out I still need to use --full-sort.

cfg.set_write_into_file(true);

cfg.set_file_write_period_ms((uint32_t)1000);

cfg.set_flush_period_ms((uint32_t)1000);

I assume that period_ms is based on the current time. If we manually pass timestamps to the TRACE_EVENT, e.g.TRACE_COUNTER(counter_category, counter_track, timestamp, val);. Then that file_write_period_ms won't work as we expected.

I created a minimal reproducible example here. This explains our use case.

- Without

tracing_session->FlushBlocking(flush_timeout), it gives metraced_buf_trace_writer_packet_loss. - With

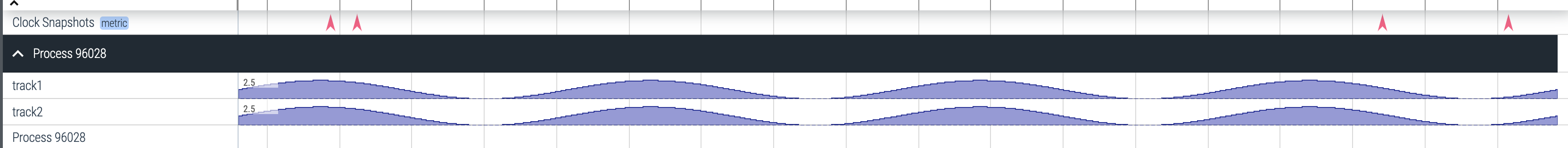

FlushBlocking, I can get a track like the image below. But I'll getcounter_events_out_of_order,sorter_push_event_out_of_orderif--full-sortis not passed.

As file_write_period_ms does not work as we expected, what API should I call to manually do internal ReadBuffers call?

The simple fact of the matter is that you are using the concept of "Flush" in a way which is orthogonal to how Perfetto expects it.

A Flush in Perfetto terms = push all data which was emitted before the flush timestamps to the central buffers. If you're faking events, this essentially means when you flush, you should "never* emit timestamps after the flush which are lower than the max timestamp before the flush.

All the trace processor incremental sorting logic depends on this invariant to hold: otherwise we have no choice but to sort the trace. No matter how you call the APIs or what you do, if you don't respect this invariant, we cannot incrementally sort.

One thing I am working is reducing the amount of memory a full sort takes as we're hitting limits on this ourselves for traces where we do not use write_into_file at all. This is ideal for a new starter so I won't be doing the work myself but I'll oversee it when possible.

As of now, I don't think there's anything we can do in this bug so I'm going to close it.

Thanks for the clarification.

One way on our end is trying merging K sorted lists. Because inside each track, the events are sorted.

👍