This repository contains the implementation code for paper Data-Efficient Graph Grammar Learning for Molecular Generation (ICLR 2022 oral).

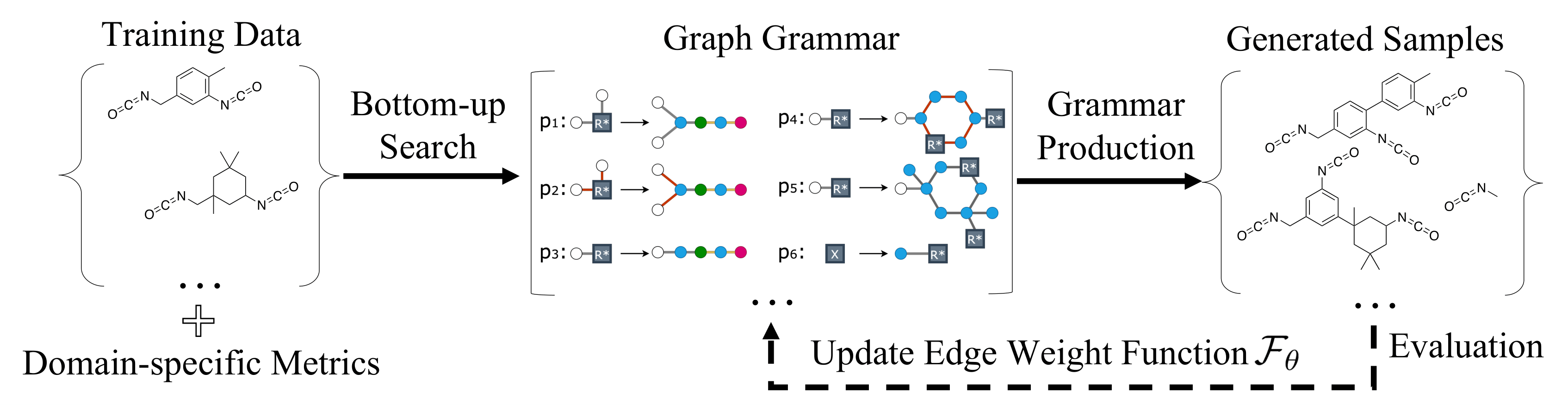

In this work, we propose a data-efficient generative model (DEG) that can be learned from datasets with orders of magnitude smaller sizes than common benchmarks. At the heart of this method is a learnable graph grammar that generates molecules from a sequence of production rules. Our learned graph grammar yields state-of-the-art results on generating high-quality molecules for three monomer datasets that contain only ∼20 samples each.

-

Retro*: The training of our DEG relies on Retro* to calculate the metric. Follow the instruction here to install.

-

Pretrained GNN: We use this codebase for the pretrained GNN used in our paper. The necessary code & pretrained models are built in the current repo.

You can use conda to install the dependencies for DEG from the provided environment.yml file, which can give you the exact python environment we run the code for the paper:

git clone git@github.com:gmh14/data_efficient_grammar.git

cd data_efficient_grammar

conda env create -f environment.yml

conda activate DEG

pip install -e retro_star/packages/mlp_retrosyn

pip install -e retro_star/packages/rdchiralNote: it may take a decent amount of time to build necessary wheels using conda.

-

Download and unzip the files from this link, and put all the folders (

dataset/,one_step_model/andsaved_models/) under theretro_stardirectory. -

Install dependencies:

conda deactivate

conda env create -f retro_star/environment.yml

conda activate retro_star_env

pip install -e retro_star/packages/mlp_retrosyn

pip install -e retro_star/packages/rdchiral

pip install setproctitleFor Acrylates, Chain Extenders, and Isocyanates,

conda activate DEG

python main.py --training_data=./datasets/**dataset_path**where **dataset_path** can be acrylates.txt, chain_extenders.txt, or isocyanates.txt.

For Polymer dataset,

conda activate DEG

python main.py --training_data=./datasets/polymers_117.txt --motifSince Retro* is a major bottleneck of the training speed, we separate it from the main process, run multiple Retro* processes, and use file communication to evaluate the generated grammar during training. This is a compromise on the inefficiency of the built-in python multiprocessing package. We need to run the following command in another terminal window,

conda activate retro_star_env

bash retro_star_listener.sh **num_processes**Note: opening multiple

Retro*is EXTREMELY memory consuming (~5G each). We suggest to start from using only one process bybash retro_star_listener.sh 1and monitor the memory usage, then accordingly increase the number to maximize the efficiency. We use35in the paper.

After finishing the training, to kill all the generated processes related to Retro*, run

killall retro_star_listenerDownload and unzip the log & checkpoint files from this link. See visualization.ipynb for more details.

The implementation of DEG is partly based on Molecular Optimization Using Molecular Hypergraph Grammar and Hierarchical Generation of Molecular Graphs using Structural Motifs .

If you find the idea or code useful for your research, please cite our paper:

@inproceedings{guo2021data,

title={Data-Efficient Graph Grammar Learning for Molecular Generation},

author={Guo, Minghao and Thost, Veronika and Li, Beichen and Das, Payel and Chen, Jie and Matusik, Wojciech},

booktitle={International Conference on Learning Representations},

year={2021}

}Please contact guomh2014@gmail.com if you have any questions. Enjoy!