This repository includes ContainerLab testbeds for studying and analyzing Model-Driven Telemetry (a.k.a. MDT) and network management mechanisms using the NETCONF protocol (RFC 6241) and the YANG data modeling language (RFC 7950).

- ContainerLab testbeds for studying and analyzing telemetry services over NETCONF/YANG

- Table of Contents

- Prerequisites

- Telemetry testbed

- Deploying, playing with, and destroying the network topology

- Building custom Docker image for Linux clients

- Deploying the network topology

- Interacting with containers

- Creating YANG-Push subscriptions to telemetry data through the NETCONF protocol

- Creating queries to get and set configuration information and to get operational status data through the NETCONF protocol

- Retrieving NETCONF server capabilities and YANG modules supported by the network device

- Destroying the network topology

- Deploying, playing with, and destroying the network topology

- IXIA-C laboratory

- Extra information

- Known limitations about YANG-Push

- ContainerLab documentation

- IXIA-C additional documentation

- vrnetlab additional documentation

- Jinja additional documentation for network automation

- ncclient additional documentation

- CISCO YANG Suite

- Related and interesting RFCs

- Related and interesting links with additional information

- Docker: https://docs.docker.com/engine/install/. Tested with version 24.0.2.

- Docker Compose: https://docs.docker.com/compose/install/. Tested with version v2.18.1.

- ContainerLab: https://containerlab.dev/install/. Tested with version 0.41.1.

- A CISCO IOS XE qcow2 image file for CSR 1000v network devices must be converted and imported as a containerized image in Docker with

vrnetlabtool, so that it can be used with ContainerLab: https://github.com/hellt/vrnetlab/tree/master/csr. Tested withCisco IOS XE CSR1000v 17.3.4a(a.k.a.17.03.04a) andCisco IOS XE CSR1000v 17.3.6(a.k.a.17.03.06) models. Already containerizedCisco IOS XE CSR1000vrouters consume a large amount of computing resources on the local machine (on the order of 4GB of RAM and 1-2 CPU/vCPU cores per containerized router). - Python 3 (Tested with version Python 3.8.10).

- Python library for NETCONF client ncclient: https://github.com/ncclient/ncclient

- Python library for

Apache Kafkaclient confluent-kafka: https://github.com/confluentinc/confluent-kafka-python - Go (Tested with version 1.22.0).

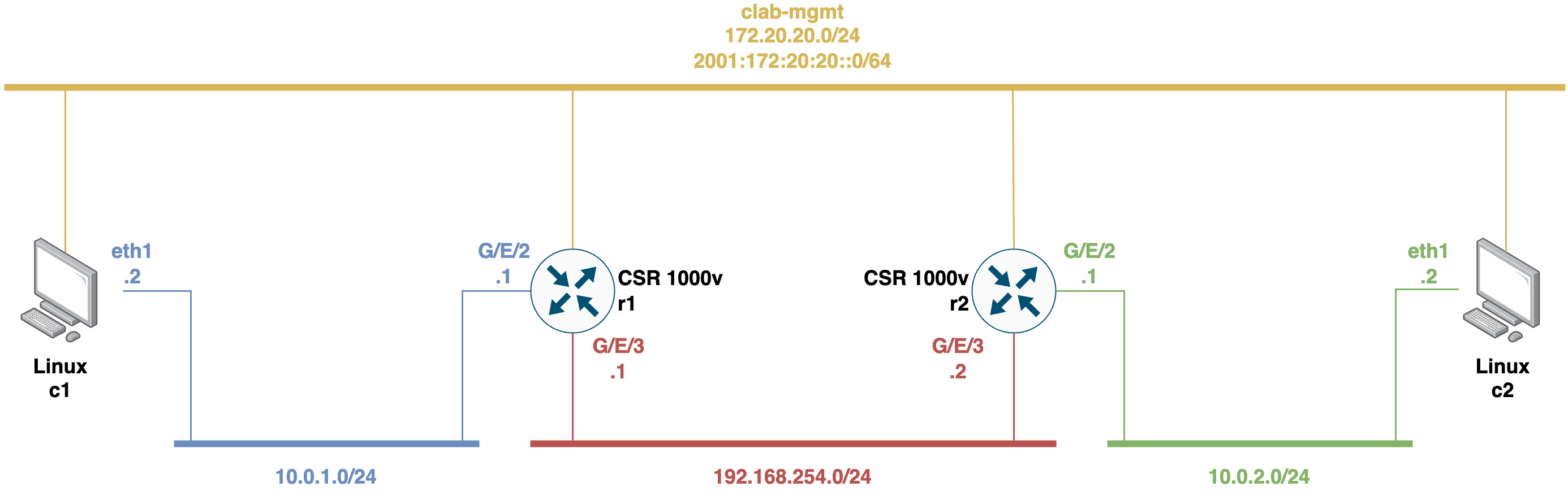

This testbed is a network scenario building with ContainerLab tool and consisting of model-driven telemetry-capable network devices. These network devices (i.e., r1 and r2) are routers from the Cisco vendor, from the IOS XE family and the CSR1000v model. These particular router devices support Model-Driven Telemetry (a.k.a. MDT) and network management mechanisms via NETCONF protocol and YANG data modeling language. Among other things, these Cisco IOS XE CSR1000v nodes support the basic operations of the NETCONF protocol (RFC 6241), have the capability to perform XPath filtering on the operations, and allow dynamic subscriptions using YANG-Push (RFC 8641).

The network topology consists of two Cisco IOS XE CSR1000v routers (i.e., r1 and r2) connected via a point-to-point ethernet link, and also two client end-hosts (i.e., c1 and c2) connected via LANs to each router device. The network scenario is configured to have end-to-end connectivity between the client end-hosts. All the nodes are also connected with their management interfaces to the ContainerLab Docker network.

This testbed allows users to study and analyze with MDT and network management mechanisms using the NETCONF protocol and YANG data modeling language.

To build the custom Docker image for client end-hosts (i.e., c1 and c2), follow the steps below:

$ cd docker/

$ sudo docker build -t giros-dit/clab-telemetry-testbed-ubuntu:latest .

To deploy the network topology, simply run the deploy shell script:

$ ./deploy-testbed-lab.sh

Note 1:

The Docker image of the

Cisco IOS XE CSR1000vrouter nodes (i.e.,r1andr2) should be built using thevrnetlabtool before deploying the network topology (see this link for more details). Thevrnetlabtool enables packaging regular virtual machine images of network operating systems (e.g., VM-based routers) inside a container and make it runnableas as if it was a container image. For this testbed, you would need a qcow2 file with a VM-based image of theCisco IOS XE CSR1000vnetwork device. The testbed has beed tested withCisco IOS XE CSR1000v 17.3.4a(a.k.a.17.03.04a) andCisco IOS XE CSR1000v 17.3.6(a.k.a.17.03.06) models. To deploy the network topology, thedeploy-testbed-lab.shscript executes theContainerLabscenario defined in thetelemetry-testbed.yamltemplate in which you need to specify the specific container image generated for ther1andr2nodes of the topology (e.g., specifyimage: vrnetlab/vr-csr:17.03.06in case of using aCisco IOS XE CSR1000v 17.3.6model image).

Note 2:

Once the network scenario is deployed with

ContainerLab, the containers of theCisco IOS XE CSR1000vrouter nodes (i.e.,r1andr2) take approximately 2-4 minutes to boot and load the default configuration accordingly (depending on your machine's computing resources). To determine when the containers of the router nodes are ready, you can use thedocker logs -f <container_name>command, which shows logs of the router's startup and configuration process. Once a log appears with the messageINFO Startup complete in: <TIME>, the process of starting and configuring the router container will have finished.

For CSR 1000v routers, via SSH to open the CISCO CLI (password is admin):

$ ssh admin@clab-telemetry-testbed-r1 # For r1 router

(or)

$ ssh admin@clab-telemetry-testbed-r2 # For r2 router

or with docker exec to open an interactive bash Linux shell:

$ sudo docker exec -it clab-telemetry-testbed-r1 bash # For r1 router

(or)

$ sudo docker exec -it clab-telemetry-testbed-r2 bash # For r2 router

For Linux containers (clients), with docker exec to open an interactive shell:

$ sudo docker exec -it clab-telemetry-testbed-c1 /bin/sh # For c1 client container

(or)

$ sudo docker exec -it clab-telemetry-testbed-c2 /bin/sh # For c2 client container

In this testbed, YANG-Push (RFC 8641) subscriptions can be triggered to monitor telemetry data from the Cisco IOS XE CSR1000v network devices, which support YANG data modeling language, via the NETCONF protocol. A NETCONF client Python library called ncclient is used which supports all operations and capabilities defined by NETCONF (RFC 6241). This library allows to create dynamic susbcription to YANG modeled data by means of RPC operations in order to receive notifications. The subscription can be of the on-change type or periodic type, and depending on the data to subscribe to, one type of subscription or another will be accepted (see the Known limitations about YANG-Push section for more details).

There is a simple Python script ncclient-scripts/csr-create-subscription.py which allows you to make on-change or periodic subscriptions to an XPath filter for a specific YANG model for a Cisco IOS XE CSR1000v node. The script allows parameterizing the container name of the network device, the XPath, the type of subscription, and the time of the subscription period in centiseconds in the case of periodic subscriptions.

To create a YANG-Push subscription, run the Python script as follows:

$ python3 csr-create-subscription.py <container_name> <XPath> <subscription_type> [<period_in_cs>]

Periodic subscription example:

$ python3 csr-create-subscription.py clab-telemetry-testbed-r1 "/interfaces-state/interface[name='GigabitEthernet2']" periodic 1000

On-change subscription example:

$ python3 csr-create-subscription.py clab-telemetry-testbed-r1 "/native/hostname" on-change

Note:

There is an alternative Python script

ncclient-scripts/csr-create-subscription-jinja2.pywhich allows the mapping and validation of the parameterization data needed for building the RPC of theYANG-Pushsubscriptions via aJinjatemplate decoupled from the Python source code. The regarding Jinja template is available here. This alternative script allows you to parameterize the same arguments as the previous base script.

There is a Python script csr-create-periodic-subscription-interfaces-state-kafka.py which allows publishing periodic telemetry notifications as streaming data into a message queue system based on the Apache Kafka service, thus working as a telemetry data substrate system. The Python script uses the Apache Kafka client Python library called confluent-kafka. By default, the script is prepared to periodically subscribe to the state of a router interface and publish the resulting notifications into a Kafka topic named interfaces-state-subscriptions. To test this script, there is a YAML template (i.e., docker-compose.yaml) that allows you to deploy the Kafka service as a Docker service with Docker Compose.

To deploy the Kafka service, simply run the following command:

$ cd docker/

$ docker compose up -d

When the Kafka service is up, run the Python script as follows:

$ python3 csr-create-periodic-subscription-interfaces-state-kafka.py <container_name> <interface_name> <period_in_cs>

Example:

$ python3 csr-create-periodic-subscription-interfaces-state-kafka.py clab-telemetry-testbed-r1 GigabitEthernet1 1000

After that, we can access the Kafka container's bash with:

docker exec -it <kafka-container> -- bashOnce inside the Kafka container, if we list the current topics with:

kafka-topics.sh --list --bootstrap-server localhost:9092, we will discover that there is a interfaces-state-subscriptions topic where the notifications will be published. These notifications can be read by a Kafka consumer by running the following command:

kafka-console-consumer.sh --topic interfaces-state-subscriptions --from-beginning --bootstrap-server localhost:9092To disable the Kafka Docker service, simply run the following command:

$ docker compose down

Creating queries to get and set configuration information and to get operational status data through the NETCONF protocol

In this testbed, RPC operations can be triggered to get configuration information and operational status data from the Cisco IOS XE CSR1000v network devices, which support YANG data modeling language, via the NETCONF protocol. The NETCONF client Python library called ncclient is used which supports all operations and capabilities defined by NETCONF (RFC 6241). This library supports the following NETCONF query operations:

<edit-config>operation to set the configuration data. It uses a filter to edit only part of the configuration. There is a simple Python scriptncclient-scripts/csr-create-query-edit-config-hostname.pythat allows you to edit the hostname of aCisco IOS XE CSR1000vnode by making use of theCisco-IOS-XE-nativeYANG model. The script allows parameterizing the container name of the network device and the desired hostname to be configured. To create the<edit-config>operation to configure the hostname of the network device, run the Python script as follows:

$ python3 csr-create-query-edit-config-hostname.py <container_name> <hostname>

Example:

$ python3 csr-create-query-edit-config-hostname.py clab-telemetry-testbed-r1 r1-ios-xe-csr1000v

<get-config>operation to retrieve the configuration data. It uses a filter to retrieve only part of the configuration. There is a simple Python scriptncclient-scripts/csr-create-query-get-config-hostname.pythat allows you to get the hostname of aCisco IOS XE CSR1000vnode by making use of theCisco-IOS-XE-nativeYANG model. The script allows parameterizing the container name of the network device. To create the<get-config>operation to get the hostname configuration of the network device, run the Python script as follows:

$ python3 csr-create-query-get-config-hostname.py <container_name>

Example:

$ python3 csr-create-query-get-config-hostname.py clab-telemetry-testbed-r1

<get>operation to retrieve the configuration and state data. It uses a filter to specify the portion of the configuration and state data to retrieve. There is a simple Python scriptncclient-scripts/csr-create-query-get-interface-ietf.pythat allows you to get the interface configuration and operational status information of aCisco IOS XE CSR1000vnode by making use of theietf-interfacesYANG model. The script allows parameterizing the container name of the network device and optionally the name of the specific interface from which we want to obtain the configuration and operational status information. If no interface is specified, the resulting information will be returned for all available interfaces on the network device. To create the<get>operation to get the interface configuration and operational status information of the network device, run the Python script as follows:

$ python3 csr-create-query-get-interface-ietf.py <container_name> [<interface_name>]

Example for the GigabitEthernet1 interface:

$ python3 csr-create-query-get-interface-ietf.py clab-telemetry-testbed-r1 GigabitEthernet1

Example for all interfaces:

$ python3 csr-create-query-get-interface-ietf.py clab-telemetry-testbed-r1

Note:

For the parameterization of the RPC operations in the previous Python scripts that automate the different queries,

Jinjatemplates decoupled from the Python source code have been used. The regarding Jinja templates are available here.

In this testbed, two additional operations can be triggered for the Cisco IOS XE CSR1000v network devices, which support YANG data modeling language, via the NETCONF protocol. The NETCONF client Python library called ncclient is used which supports all operations and capabilities defined by NETCONF (RFC 6241). This library supports the following two additional operations:

- Retrieve the set of NETCONF server capabilities supported by the network device, such as XPath filtering support in RPC operations (e.g.,

urn:ietf:params:netconf:capability:xpath:1.0) and the capability to send notifications to subscribers (e.g.,urn:ietf:params:netconf:capability:notification:1.0). In addition, this operation retrieves the set of YANG modules that the target network device supports. Each NETCONF server capability is identified by its particular namespace URI. There is a simple Python scriptncclient-scripts/csr-get-capabilities.pythat allows you to get the NETCONF capabilities supported by a particularCisco IOS XE CSR1000vnode. The script allows parameterizing the container name of the network device. To discover the NETCONF capabilities of the network device, run the Python script as follows:

$ python3 csr-get-capabilities.py <container_name>

Example:

$ python3 csr-get-capabilities.py clab-telemetry-testbed-r1

There is an alternative Python script ncclient-scripts/csr-get-yang-module-info.py that allows you to get only the information about a requested YANG module supported by a particular Cisco IOS XE CSR1000v node. The script allows parameterizing the container name of the network device and the name of the requested YANG module. Then, to get the information from a particular YANG module of the network device, run the Python script as follows:

$ python3 csr-get-yang-module-info.py <container_name> <yang_module_name>

Example:

$ python3 csr-get-yang-module-info.py clab-telemetry-testbed-r1 ietf-interfaces

- Retrieve the schema representation for a particular YANG module supported by the network device using the NETCONF

<get-schema>RPC operation (RFC 6022). There is a simple Python scriptncclient-scripts/csr-get-yang-module-schema.pythat allows you to get the schema from a specific YANG module supported by a particularCisco IOS XE CSR1000vnode. The script allows parameterizing the container name of the network device, the name of the YANG module, and optionally the specific revision/version of the YANG module. To create the<get-schema>operation to get the schema representation for a specific YANG module of the network device, run the Python script as follows:

$ python3 csr-get-yang-module-schema.py <container_name> <yang-module-name> [<yang-module-revision>]

Example:

$ python3 csr-get-yang-module-schema.py clab-telemetry-testbed-r1 ietf-interfaces 2014-05-08

To destroy the network topology, simply run the destroy shell script:

$ ./destroy-testbed-lab.sh

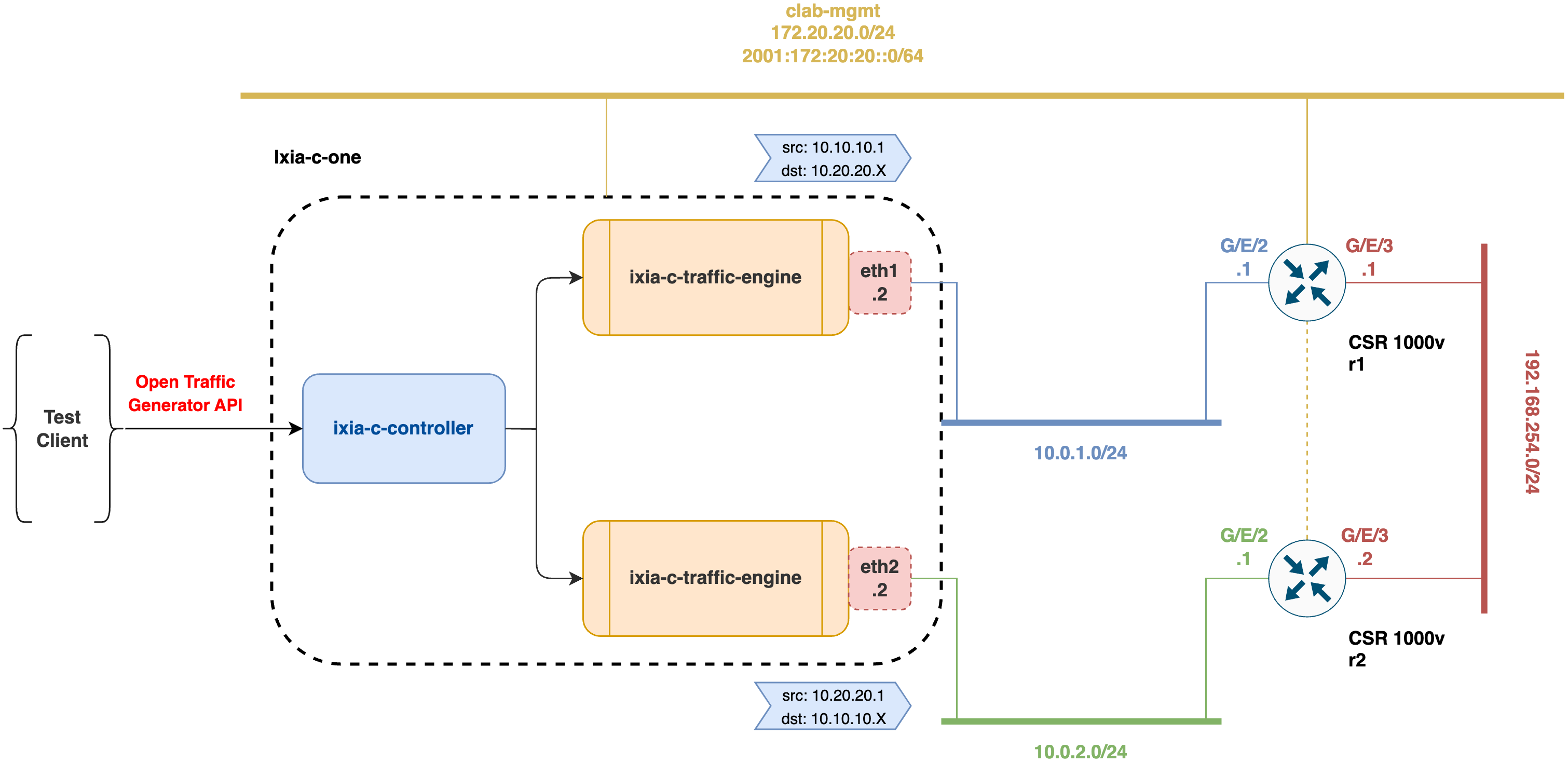

This network lab is another network scenario building with ContainerLab tool and consisting of a Keysight Ixia-c-one node with 2 ports connected to the incoming port on r1 node and the outgoing port on r2 node via two point-to-point ethernet links. There is another point-to-point ethernet connection configured between the router nodes (i.e., r1 and r2) of the network topology to allow end-to-end traffic forwarding throughout the network. All the nodes are also connected with their management interfaces to the ContainerLab Docker network. This network lab is based on the Keysight IXIA-C and Nokia SR Linux lab example of ContainerLab.

Keysight Ixia-c-one is a single-container distribution of Ixia-c, which in turn is Keysight's reference implementation of Open Traffic Generator API.

This network lab allows users to validate an IPv4 traffic forwarding scenario between Keysight ixia-c-one and Cisco IOS XE CSR1000v nodes (i.e., r1 and r2).

To deploy the network topology, simply run the deploy shell script:

$ ./deploy-ixiac-lab.sh

Note 1:

The Docker image of the

Cisco IOS XE CSR1000vrouter nodes (i.e.,r1andr2) should be built using thevrnetlabtool before deploying the network topology (see the link for more details). Thevrnetlabtool enables packaging regular virtual machine images of network operating systems (e.g., VM-based routers) inside a container and make it runnableas as if it was a container image. For this network lab, you would need qcow2 file with a VM-based image of theCisco IOS XE CSR1000vnetwork device. The network lab has beed tested withCisco IOS XE CSR1000v 17.3.4a(a.k.a.17.03.04a) andCisco IOS XE CSR1000v 17.3.6(a.k.a.17.03.06) models. To deploy the network topology, thedeploy-ixiac-lab.shscript executes theContainerLabscenario defined in thetelemetry-ixiac-lab.yamltemplate in which you need to specify the specific container image generated for ther1andr2nodes of the topology (e.g., specifyimage: vrnetlab/vr-csr:17.03.06in case of using aCisco IOS XE CSR1000v 17.3.6model image).

Note 2:

Once the network scenario is deployed with

ContainerLab, the containers of theCisco IOS XE CSR1000vrouter nodes (i.e.,r1andr2) take approximately 2-4 minutes to boot and load the default configuration accordingly (depending on your machine's computing resources). To determine when the containers of the router nodes are ready, you can use thedocker logs -f <container_name>command, which shows logs of the router's startup and configuration process. Once a log appears with the messageINFO Startup complete in: <TIME>, the process of starting and configuring the router container will have finished.

For CSR 1000v routers, via SSH to open the CISCO CLI (password is admin):

$ ssh admin@clab-telemetry-ixiac-lab-r1 # For r1 router

(or)

$ ssh admin@clab-telemetry-ixiac-lab-r2 # For r2 router

or with docker exec to open an interactive bash Linux shell:

$ sudo docker exec -it clab-telemetry-ixiac-lab-r1 bash # For r1 router

(or)

$ sudo docker exec -it clab-telemetry-ixiac-lab-r2 bash # For r2 router

For Ixia-c-one container, with docker exec to open an interactive shell:

$ sudo docker exec -it clab-telemetry-ixiac-lab-ixia-c /bin/sh

Inside the Ixia-c-one container shell, with docker ps -a you can see the ixia-c-controller and ixia-c-traffic-engine containers:

/home/keysight/ixia-c-one # docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

1137908238a7 ixia-c-traffic-engine:1.4.1.23 "./entrypoint.sh" 27 hours ago Up 27 hours ixia-c-port-dp-eth2

f33030b44e60 ixia-c-traffic-engine:1.4.1.23 "./entrypoint.sh" 27 hours ago Up 27 hours ixia-c-port-dp-eth1

7a4a64439484 ixia-c-controller:0.0.1-2755 "./bin/controller --…" 27 hours ago Up 27 hours 0.0.0.0:443->443/tcp, 0.0.0.0:50051->50051/tcp ixia-c-controller

This network lab demonstrates a simple IPv4 traffic forwarding scenario where,

- One

Keysight Ixia-c-oneport acts as a transmit port and the other as receive port. Two-way communication can be configured (i.e.,ixia-c-port1<->r1<->r2<->ixia-c-port2). Cisco IOS XE CSR1000vnodes (i.e.,r1andr2) are configured to forward the traffic in either of the two directions of communication using static routes configuration in the default network instance.

DEPRECATED: Only applies with the

telemetry-ixiac-lab-oldscenario started with thedeploy-ixiac-lab-old.shscript. This release of the scenario uses an older version ofKeysight Ixia-c-oneand an older version of Go v1.20.4.When the network lab is running, we need to fetch the MAC address according to the incoming interface of the router node which is connected to the transmit port of

Ixia-c-onenode. Execute the following script to get the incoming MAC addresses of both router nodes, as they will serve as an argument in the traffic test scripts:$ ./discover_target_mac.shRun the traffic tests with MAC addresses obtained in >previous step:

- For the traffic test

ixia-c-port1->r1->r2>->ixia-c-port2:$ cd ixia-c-scripts/old/ $ go run ipv4_forwarding_r1_r2.go -dstMac="<incoming MAC address of r1>"

- For the traffic test

ixia-c-port2->r2->r1>->ixia-c-port1:$ cd ixia-c-scripts/old/ $ go run ipv4_forwarding_r2_r1.go -dstMac="<incoming MAC address of r2>"

- For the traffic test

ixia-c-port1->r1->r2->ixia-c-port2:

$ cd ixia-c-scripts/new/

$ go run ip_forwarding_r1_r2.go

- For the traffic test

ixia-c-port2->r2->r1->ixia-c-port1:

$ cd ixia-c-scripts/new/

$ go run ip_forwarding_r2_r1.go

The tests are configured to send 1000 IPv4 packets with a rate 100pps from 10.10.10.1 to 10.20.20.X or from 10.20.20.1 to 10.10.10.X, where X is changed from 1 to 5. Once 1000 packets are sent, the test script checks that we received all the sent packets.

As with the initial testbed, this network lab supports MDT and network management mechanisms using the NETCONF protocol and the YANG data modeling language. On the one hand, to play with YANG-Push subscriptions via the NETCONF protocol in order to monitor telemetry data from network devices go here. On the other hand, to play with query operations via the NETCONF protocol to get and set configuration information and to get operational status data from network devices go here. Finally, to play with additional operations for retrieving the NETCONF server capabilities and the YANG modules supported by the network devices go here.

Note:

Remember that the name assigned by

ContainerLabto the nodes or network devices in this network lab is different.

To destroy the network topology, simply run the destroy shell script:

$ ./destroy-ixiac-lab.sh

YANG-Pushon-change notifications do not work with all datastores. There is a proposed-standard method to know which YANG modules support this kind of notifications (see RFC 9196 linked below), but it is not implemented, at least in the17.03.04aand17.03.06versions ofCISCO's IOS XEnetwork operating system. YANG modulesietf-interfaces,openconfig-interfacesandcisco-ios-xe-interfaces-operdo not allow this type of notifications, not even foroper/admin-statusnodes. Periodic notifications work without issues. According to RFC 8641, page 17, chapter 3.10 (also linked below), "a publisher supporting on-change notifications may not be able to push on-change updates for some object types", and some reasons for this are given. While there is an additional method to apparently know which modules support on-change notifications (show platform software ndbman {R0|RP} modelscommand in IOS CLI, see this link), it does not seem to match the experimented results. In addition, the YANG modulesietf-event-notificationsandietf-yang-push, which include the specifications for supporting NETCONF Event Notifications (see RFC 5277 linked below) andYANG Pushsubscriptions, indicate that they have the YANG modulescisco-xe-ietf-event-notifications-deviationandcisco-xe-ietf-yang-push-notificationsas deviations.

- ContainerLab home page: https://containerlab.dev/

- VM-based routers integration: https://containerlab.dev/manual/vrnetlab/

- Linux containers: https://containerlab.dev/manual/kinds/linux/

- Keysight IXIA-C One container: https://containerlab.dev/manual/kinds/keysight_ixia-c-one/

- Usage with Keysight IXIA-C traffic generator: https://containerlab.dev/lab-examples/ixiacone-srl/

- Ixia-c "A powerful traffic generator based on Open Traffic Generator API": https://github.com/open-traffic-generator/ixia-c

- Deploy Ixia-c-one using ContainerLab: https://github.com/open-traffic-generator/ixia-c/blob/main/docs/deployments.md#deploy-ixia-c-one-using-containerlab

- Open Traffic Generator (OTG): https://github.com/open-traffic-generator

- Open Traffic Generator APIs & Data Model: https://otg.dev/

- vrnetlab - VR Network Lab: https://github.com/vrnetlab/vrnetlab

- vrnetlab - VR Network Lab (ContainerLab fork): https://github.com/hellt/vrnetlab

- vrnetlab / Cisco CSR1000v: https://github.com/hellt/vrnetlab/tree/master/csr

- ContainerLab - VM-based routers integration: https://containerlab.dev/manual/vrnetlab/

- Jinja Documentation: https://jinja.palletsprojects.com/

- Jinja2 Tutorial - A Crash Course for Beginners: https://ultraconfig.com.au/blog/jinja2-a-crash-course-for-beginners/

- Python Automation on Cisco Routers in 2019 - NETCONF, YANG & Jinja2: https://ultraconfig.com.au/blog/python-automation-on-cisco-routers-in-2019/

- ncclient: Python library for NETCONF clients: https://github.com/ncclient/ncclient

- ncclient documentation: https://ncclient.readthedocs.io/en/latest/

- Open Management - ncclient: https://aristanetworks.github.io/openmgmt/examples/netconf/ncclient/

Web-based GUI and set of tools to perform NETCONF/RESTCONF/gNMI/gRPC operations supported over YANG modules.

- Official GitHub repository with installation instructions: https://github.com/CiscoDevNet/yangsuite

- Official documentation: https://developer.cisco.com/docs/yangsuite/

- RFC 6020: YANG - A Data Modeling Language for the Network Configuration Protocol (NETCONF): https://datatracker.ietf.org/doc/html/rfc6020

- RFC 7950: The YANG 1.1 Data Modeling Language: https://datatracker.ietf.org/doc/html/rfc7950

- RFC 6241: Network Configuration Protocol (NETCONF): https://datatracker.ietf.org/doc/html/rfc6241

- RFC 6242: Using the NETCONF Protocol over Secure Shell (SSH): https://datatracker.ietf.org/doc/html/rfc6242

- RFC 5277: NETCONF Event Notifications: https://datatracker.ietf.org/doc/html/rfc5277.html

- RFC 8641: Subscription to YANG Notifications for Datastore Updates: https://datatracker.ietf.org/doc/html/rfc8641

- RFC 9196: YANG Modules Describing Capabilities for Systems and Datastore Update Notifications: https://datatracker.ietf.org/doc/html/rfc9196

- RFC 6022: YANG Module for NETCONF Monitoring: https://datatracker.ietf.org/doc/html/rfc6022

- CISCO DevNet Sandbox: https://developer.cisco.com/site/sandbox/

- CISCO IOS XE Model Driven Telemetry (for version 17.3.X): https://www.cisco.com/c/en/us/td/docs/ios-xml/ios/prog/configuration/173/b_173_programmability_cg/model_driven_telemetry.html

- CISCO IOS XE Programmability Configuration Guide - NETCONF Protocol (for version 16.7.X): https://www.cisco.com/c/en/us/td/docs/ios-xml/ios/prog/configuration/167/b_167_programmability_cg/configuring_yang_datamodel.html?bookSearch=true

- ContainerLab: CISCO CSRs in containers?!: https://devnetdan.com/2021/12/15/containerlab-cisco-csrs-in-containers/

- Cisco CSR 1000v and Cisco ISRv Software Configuration Guide: https://www.cisco.com/c/en/us/td/docs/routers/csr1000/software/configuration/b_CSR1000v_Configuration_Guide.pdf

- CISCO Networking Learning Labs: https://developer.cisco.com/learning/search/categories/Networking/

- How to Configure a Cisco CSR device using NETCONF/YANG: https://www.fir3net.com/Networking/Concepts-and-Terminology/how-to-configure-a-cisco-csr-using-netconf-yang.html

- YANG Models GitHub repository: https://github.com/YangModels/yang

- YANG Catalog Search: https://yangcatalog.org/yang-search

- Andrés Ripoll's TFG GitHub repository: https://github.com/andresripoll/TFG_NETCONF

- NETCONF Telemetry PoC: https://github.com/giros-dit/netconf-telemetry-poc

- Model-driven Telemetry: IETF YANG Push and/or Openconfig Streaming Telemetry?: https://www.claise.be/model-driven-telemetry-ietf-yang-push-and-or-openconfig-streaming-telemetry/