Yihang Yin, Siyu Huang, Xiang Zhang

Please check our arXiv version here for the full paper with supplementary. We also provide our poster in this repo. Our oral presentation video at AAAI-2022 can be found on YouTube, for both the brief introduction and the full presentation.

The latest tested versions are:

pytorch==1.10.1

opencv-python==4.5.5.62

sklearn==1.10.1

tqdm

IPython

graphviz (you need excutebles, not only Python API)

The backbones (checkpoints) and pre-processed datasets (BM-NAS_dataset) are available at here, you can download them and put them in the root directory. For some weird reason, ego checkpoints are blocked by AliCloud, There is an alternative Google Drive link for checkpoints at here.

You can just use our pre-processed dataset, but you should cite the original MM-IMDB dataset.

If you want to use the original one, you can follow these steps.

You can download multimodal_imdb.hdf5 from the original repo of MM-IMDB. Then use our pre-processing script to split the dataset.

$ python datasets/prepare_mmimdb.py$ python main_darts_searchable_mmimdb.py

$ python main_darts_found_mmimdb.py --search_exp_dir=<dir of search exp>You can just use our pre-processed dataset, but you should cite the original NTU RGB-D dataset.

If you want to use the original one, you can follow these steps.

First, request and download the NTU RGB+D dataset (not NTU RGB+D 120) from the official site. We only use the 3D skeletons (body joints) and RGB videos modality.

Then, run the following script to reshape all RGB videos to 256x256 with 30 fps:

$ python datasets/prepare_ntu.py --dir=<dir of RGB videos>First search the hypernets. You can use --parallel for data-parallel. The default setting will require about 128GB of GPU memeroy, you may adjust the --batchsize according to your budget.

$ python main_darts_searchable_ntu.py --parallelThen train the searched fusion network. You need to assign the searching experiment by --search_exp_dir.

$ python main_darts_found_ntu.py --search_exp_dir=<dir of search exp>If you want to just run the test process (no training of the fusion network), you can also use this script, you need to assign both the searching and evaluation experiments directories.

$ python main_darts_found_ntu.py --search_exp_dir=<dir of search exp> --eval_exp_dir=<dir of eval exp>Download the EgoGesture dataset from the official site. You only need to download the image data. And unzip the data into this kind of structure.

├── EgoGesture

│ ├── Subject01

│ ├── Subject02

First search the hypernets. You can use --parallel for data-parallel. You may adjust the --batchsize according to your GPU memory budget.

$ python main_darts_searchable_ego.py --parallelThen train the searched fusion network. You need to assign the searching experiment by --search_exp_dir.

$ python main_darts_found_ego.py --search_exp_dir=<dir of search exp>If you want to just run the test process (no training of the fusion network), you can also use this script, you need to assign both the searching and evaluation experiments directories.

$ python main_darts_found_ego.py --search_exp_dir=<dir of search exp> --eval_exp_dir=<dir of eval exp>You can use structure_vis.ipynb to visualize the searched genotypes.

If you find this work helpful, please kindly cite our paper.

@article{yin2021bm,

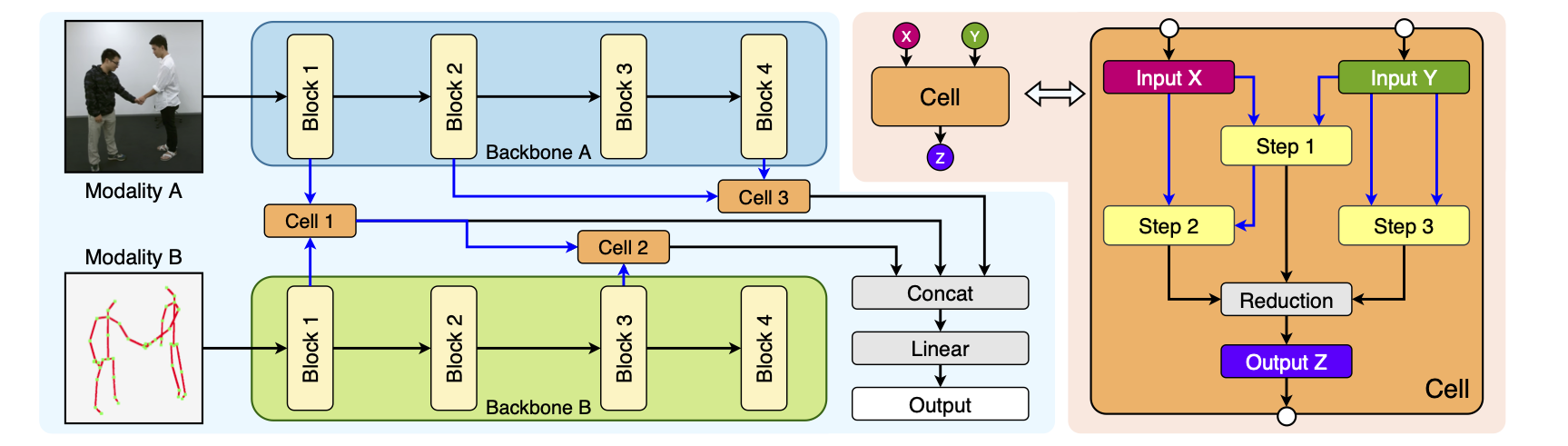

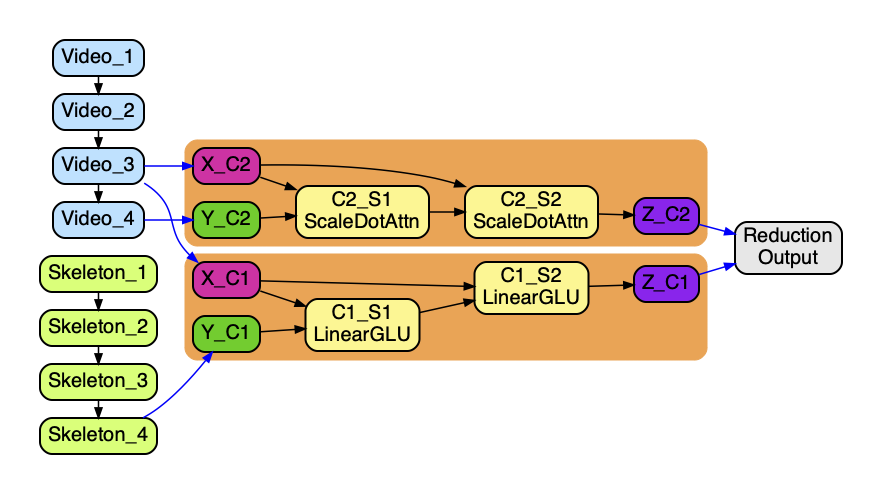

title={BM-NAS: Bilevel Multimodal Neural Architecture Search},

author={Yin, Yihang and Huang, Siyu and Zhang, Xiang},

journal={arXiv preprint arXiv:2104.09379},

year={2021}

}