Gradient disappears in CIFAR10

huanranchen opened this issue · comments

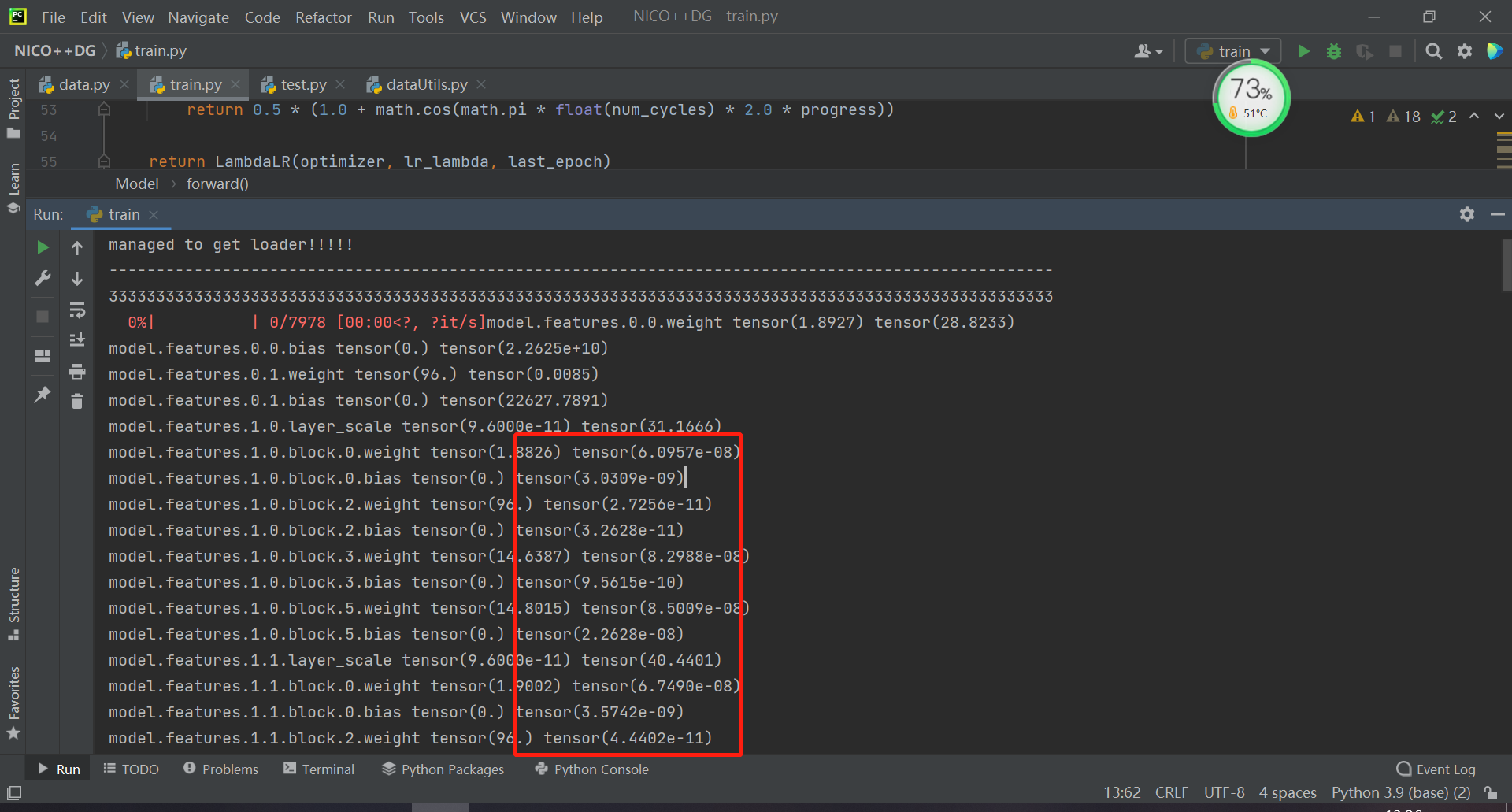

Hi~ When training on cifar10, I discovered that even in the first iteration, the gradient of my model disappears. But if I fine tune pretrained ConvNeXt on cifar10, the gradient is normal.

I use convnext in pytorch, with learning_rate 4e-3, AdamW optimizer, batch_size 64.

class Model(nn.Module):

def __init__(self):

super(Model, self).__init__()

self.model = models.convnext_tiny(pretrained=False)

self.classifier = nn.Sequential(

nn.Flatten(start_dim=1, end_dim=-1),

nn.LayerNorm([768], eps=1e-6, elementwise_affine=True),

nn.Linear(768, 60)

)

def forward(self, x):

x=self.model.features(x)

x = self.model.avgpool(x)

x = self.classifier(x)

return x

This problem is not only in cifar10, but also in NICO dataset where the input image size is 224x224. I tried to resize the input image to 448x448, but the gradient is still abnormal.

So, why the gradient will disappear~

I don't know how I solved this problem. I use same code, same environment, and managed to train on cifar10 today.....