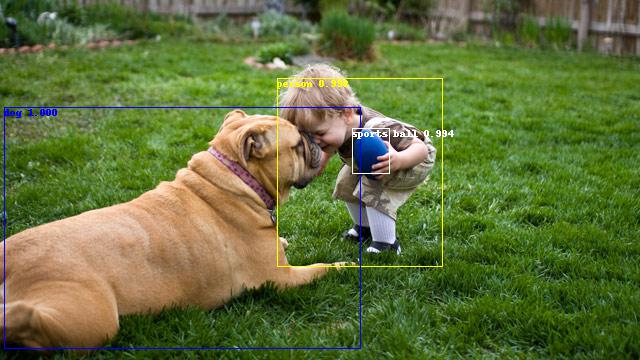

An easy implementation of Faster R-CNN in PyTorch.

- Download checkpoint from here

- Follow the instructions in Setup 2 & 3

- Run inference script

$ python infer.py -s=coco2017 -b=resnet101 -c=/path/to/checkpoint.pth --image_min_side=800 --image_max_side=1333 --anchor_sizes="[64, 128, 256, 512]" --rpn_post_nms_top_n=1000 /path/to/input/image.jpg /path/to/output/image.jpg

- Supports PyTorch 1.0

- Supports

PASCAL VOC 2007andMS COCO 2017datasets - Supports

ResNet-18,ResNet-50andResNet-101backbones (from official PyTorch model) - Supports

ROI PoolingandROI Alignpooler modes - Supports

Multi-BatchandMulti-GPUtraining - Matches the performance reported by the original paper

- It's efficient with maintainable, readable and clean code

-

PASCAL VOC 2007

- Train: 2007 trainval (5011 images)

- Eval: 2007 test (4952 images)

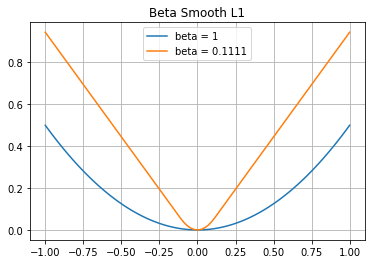

Implementation Backbone GPU #GPUs #Batches/GPU Training Speed (FPS) Inference Speed (FPS) mAP image_min_side image_max_side anchor_ratios anchor_sizes pooler_mode rpn_pre_nms_top_n (train) rpn_post_nms_top_n (train) rpn_pre_nms_top_n (eval) rpn_post_nms_top_n (eval) anchor_smooth_l1_loss_beta proposal_smooth_l1_loss_beta batch_size learning_rate momentum weight_decay step_lr_sizes step_lr_gamma warm_up_factor warm_up_num_iters num_steps_to_finish Original Paper VGG-16 Tesla K40 1 1 - ~ 5 0.699 - - - - - - - - - - - - - - - - - - - - ruotianluo/pytorch-faster-rcnn ResNet-101 TITAN Xp - - - - 0.7576 - - - - - - - - - - - - - - - - - - - - jwyang/faster-rcnn.pytorch ResNet-101 TITAN Xp 1 1 - - 0.752 - - - - - - - - - - - - - - - - - - - - Ours ResNet-101 GTX 1080 Ti 1 4 7.12 15.05 0.7562 600 1000 [(1, 2), (1, 1), (2, 1)] [128, 256, 512] align 12000 2000 6000 300 1.0 1.0 4 0.004 0.9 0.0005 [12500, 17500] 0.1 0.3333 500 22500 -

MS COCO 2017

- Train: 2017 Train drops images without any objects (117266 images)

- Eval: 2017 Val drops images without any objects (4952 images)

Implementation Backbone GPU #GPUs #Batches/GPU Training Speed (FPS) Inference Speed (FPS) AP@[.5:.95] AP@[.5] AP@[.75] AP S AP M AP L image_min_side image_max_side anchor_ratios anchor_sizes pooler_mode rpn_pre_nms_top_n (train) rpn_post_nms_top_n (train) rpn_pre_nms_top_n (eval) rpn_post_nms_top_n (eval) anchor_smooth_l1_loss_beta proposal_smooth_l1_loss_beta batch_size learning_rate momentum weight_decay step_lr_sizes step_lr_gamma warm_up_factor warm_up_num_iters num_steps_to_finish ruotianluo/pytorch-faster-rcnn ResNet-101 TITAN Xp - - - - 0.354 - - - - - - - - - - - - - - - - - - - - - - - - - jwyang/faster-rcnn.pytorch ResNet-101 TITAN Xp 8 2 - - 0.370 - - - - - - - - - - - - - - - - - - - - - - - - - Ours ResNet-101 GTX 1080 Ti 1 2 4.84 8.00 0.356 0.562 0.389 0.176 0.398 0.511 800 1333 [(1, 2), (1, 1), (2, 1)] [64, 128, 256, 512] align 12000 2000 6000 1000 0.1111 1.0 2 0.0025 0.9 0.0001 [480000, 640000] 0.1 0.3333 500 720000 Ours ResNet-101 Telsa P100 4 4 11.64 5.10 0.370 0.576 0.403 0.187 0.414 0.522 800 1333 [(1, 2), (1, 1), (2, 1)] [64, 128, 256, 512] align 12000 2000 6000 1000 0.1111 1.0 16 0.02 0.9 0.0001 [120000, 160000] 0.1 0.3333 500 180000 -

PASCAL VOC 2007 Cat Dog

- Train: 2007 trainval drops categories other than cat and dog (750 images)

- Eval: 2007 test drops categories other than cat and dog (728 images)

-

MS COCO 2017 Person

- Train: 2017 Train drops categories other than person (64115 images)

- Eval: 2017 Val drops categories other than person (2693 images)

-

MS COCO 2017 Car

- Train: 2017 Train drops categories other than car (12251 images)

- Eval: 2017 Val drops categories other than car (535 images)

-

MS COCO 2017 Animal

- Train: 2017 Train drops categories other than bird, cat, dog, horse, sheep, cow, elephant, bear, zebra and giraffe (23989 images)

- Eval: 2017 Val drops categories other than bird, cat, dog, horse, sheep, cow, elephant, bear, zebra and giraffe (1016 images)

-

Python 3.6

-

torch 1.0

-

torchvision 0.2.1

-

tqdm

$ pip install tqdm -

tensorboardX

$ pip install tensorboardX -

OpenCV 3.4 (required by

infer_stream.py)$ pip install opencv-python~=3.4 -

websockets (required by

infer_websocket.py)$ pip install websockets

-

Prepare data

-

For

PASCAL VOC 2007-

Download dataset

- Training / Validation (5011 images)

- Test (4952 images)

-

Extract to data folder, now your folder structure should be like:

easy-faster-rcnn.pytorch - data - VOCdevkit - VOC2007 - Annotations - 000001.xml - 000002.xml ... - ImageSets - Main ... test.txt ... trainval.txt ... - JPEGImages - 000001.jpg - 000002.jpg ... - ...

-

-

For

MS COCO 2017-

Download dataset

- 2017 Train images [18GB] (118287 images)

COCO 2017 Train = COCO 2015 Train + COCO 2015 Val - COCO 2015 Val Sample 5k

- 2017 Val images [1GB] (5000 images)

COCO 2017 Val = COCO 2015 Val Sample 5k (formerly known as

minival) - 2017 Train/Val annotations [241MB]

- 2017 Train images [18GB] (118287 images)

-

Extract to data folder, now your folder structure should be like:

easy-faster-rcnn.pytorch - data - COCO - annotations - instances_train2017.json - instances_val2017.json ... - train2017 - 000000000009.jpg - 000000000025.jpg ... - val2017 - 000000000139.jpg - 000000000285.jpg ... - ...

-

-

-

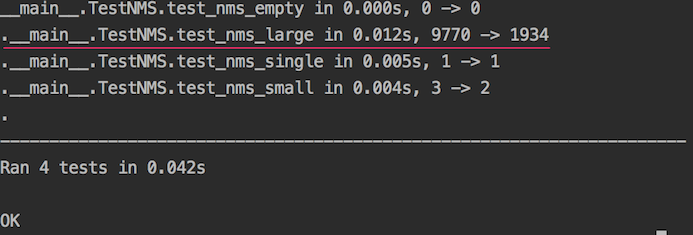

Build

Non Maximum SuppressionandROI Alignmodules (modified from facebookresearch/maskrcnn-benchmark) -

Install

pycocotoolsforMS COCO 2017dataset-

Clone and build COCO API

$ git clone https://github.com/cocodataset/cocoapi $ cd cocoapi/PythonAPI $ makeIt's not necessary to be under project directory

-

If an error with message

pycocotools/_mask.c: No such file or directoryhas occurred, please installcythonand try again$ pip install cython -

Copy

pycocotoolsinto project$ cp -R pycocotools /path/to/project

-

-

Train

-

To apply default configuration (see also

config/)$ python train.py -s=voc2007 -b=resnet101 -

To apply custom configuration (see also

train.py)$ python train.py -s=voc2007 -b=resnet101 --weight_decay=0.0001 -

To apply recommended configuration (see also

scripts/)$ bash ./scripts/voc2007/train-bs2.sh resnet101 /path/to/outputs/dir

-

-

Evaluate

-

To apply default configuration (see also

config/)$ python eval.py -s=voc2007 -b=resnet101 /path/to/checkpoint.pth -

To apply custom configuration (see also

eval.py)$ python eval.py -s=voc2007 -b=resnet101 --rpn_post_nms_top_n=1000 /path/to/checkpoint.pth -

To apply recommended configuration (see also

scripts/)$ bash ./scripts/voc2007/eval.sh resnet101 /path/to/checkpoint.pth

-

-

Infer

-

To apply default configuration (see also

config/)$ python infer.py -s=voc2007 -b=resnet101 -c=/path/to/checkpoint.pth /path/to/input/image.jpg /path/to/output/image.jpg -

To apply custom configuration (see also

infer.py)$ python infer.py -s=voc2007 -b=resnet101 -c=/path/to/checkpoint.pth -p=0.9 /path/to/input/image.jpg /path/to/output/image.jpg -

To apply recommended configuration (see also

scripts/)$ bash ./scripts/voc2007/infer.sh resnet101 /path/to/checkpoint.pth /path/to/input/image.jpg /path/to/output/image.jpg

-

-

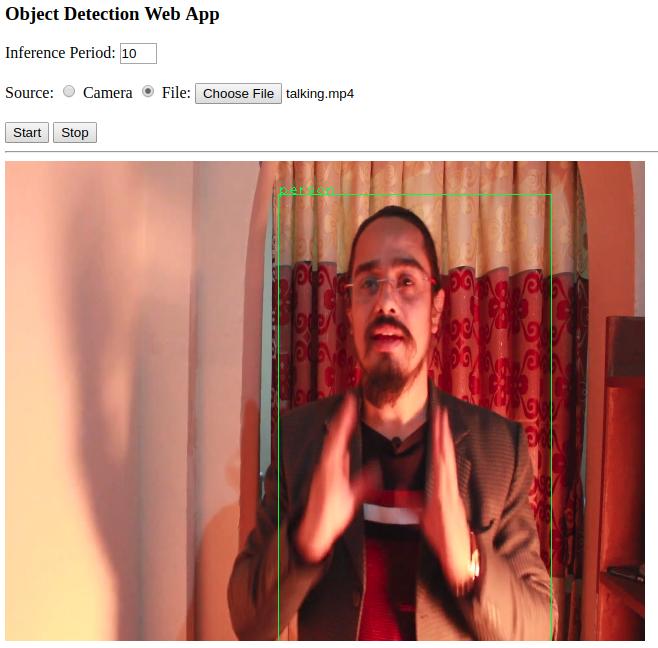

Infer other sources

-

Source from stream (see also

infer_stream.py)# Camera $ python infer_stream.py -s=voc2007 -b=resnet101 -c=/path/to/checkpoint.pth -p=0.9 0 5 # Video $ python infer_stream.py -s=voc2007 -b=resnet101 -c=/path/to/checkpoint.pth -p=0.9 /path/to/file.mp4 5 # Remote $ python infer_stream.py -s=voc2007 -b=resnet101 -c=/path/to/checkpoint.pth -p=0.9 rtsp://184.72.239.149/vod/mp4:BigBuckBunny_115k.mov 5 -

Source from websocket (see also

infer_websocket.py)-

Start web server

$ cd webapp $ python -m http.server 8000 -

Launch service

$ python infer_websocket.py -s=voc2007 -b=resnet101 -c=/path/to/checkpoint.pth -p=0.9 -

Navigate website:

http://127.0.0.1:8000/Sample video from Pexels

-

-