This repository contains the implementation of the above paper. It is accepted to T-PAMI (2022).

- Authors: Jiahui Huang, Tolga Birdal, Zan Gojcic, Leonidas Guibas, Shi-Min Hu

- Contact Jiahui either via email or github issues :)

If you find our code or paper useful, please consider citing

@article{huang2021multiway,

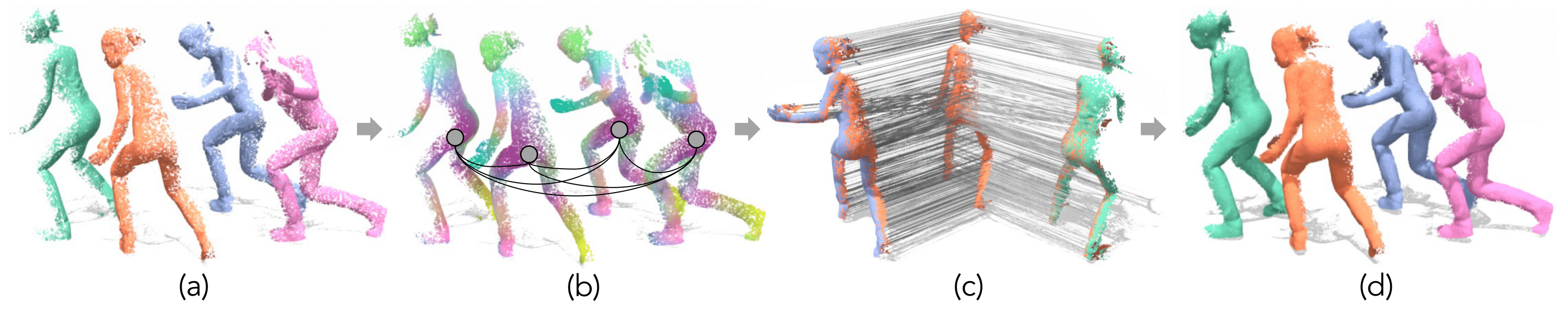

title={Multiway Non-rigid Point Cloud Registration via Learned Functional Map Synchronization},

author={Huang, Jiahui and Birdal, Tolga and Gojcic, Zan and Guibas, Leonidas J and Hu, Shi-Min},

journal={arXiv preprint arXiv:2111.12878},

year={2021}

}We suggest to use Anaconda to manage your environment. Following is the suggested way to install the dependencies:

# Create a new conda environment

conda create -n synorim python=3.8

conda activate synorim

# Install pytorch

conda install pytorch==1.10.2 cudatoolkit=11.1 -c pytorch -c nvidia

# Install other packages (ninja is required for MinkowskiEngine)

pip install -r requirements.txtNote: You can also choose to use our MinkowskiEngine fork from here, with a RAM leak bug fixed.

| Name | # Train / Val. | # Test | Term of Use | Downloads | L2 Error |

|---|---|---|---|---|---|

| MPC-CAPE | 3015 / 798 | 209 | Link |

Data (11.2G) Model | 1.08 |

| MPC-DT4D | 3907 / 1701 | 1299 | Link | Data (20.6G) Model | 3.53 |

| MPC-DD | 1754 / 200 | 267 | Link | Data (2.4G) Model | 2.54 |

| MPC-SAPIEN | 530 / 88 | 266 | Link | Data (1.3G) Model | 3.05 |

We provide all the datasets used in our paper. However, in order to download them, please accept the respective 'Term of Use' by sending the agreement form if needed. The data are stored on Google Drive. Please use a VPN if you cannot access it directly.

You can also run the following script to download all datasets and automatically place them in the correct place (../dataset). Please make sure you have write access to that folder because it is outside of the project folder.

bash scripts/download_datasets.shEach dataset is organized in the following structure.

<dataset-name>/

├ meta.json # Specifies train/val/test split and #frames in each npz file

└ data/

├ 0000.npz # Each npz file contains point clouds and all pairwise flows.

├ 0001.npz # Please refer to 'dataset/flow_dataset.py' to see how to parse them.

└ ...

We provide pretrained models for all the datasets. You can either download them from the links in the table above, or use the following script:

bash scripts/download_pretrained.shIf you manually download the checkpoints, put them into out_pretrained folder.

You can then run the evaluation script to reproduce our metrics:

python evaluate.py configs/<DATASET-NAME>/test_pretrained.yamlOur full training procedure is divided into two steps. First, train the descriptor network using:

python train.py configs/<DATASET-NAME>/train_desc.yamlAfter the training converges, train the basis network with:

python train.py configs/<DATASET-NAME>/train_basis.yamlThe script will log config files, models, and tensorboard logs into out folder. You may need to use tensorboard to monitor the training process:

tensorboard --logdir=out

Please refer to all the yaml files for available training options. We use a inclusion mechanism so also check out the yaml files linked in include_configs.

The full evaluation process takes multiple input point clouds and run synchronization algorithm introduced in our paper. Please run the following script to evaluate a trained model.

python evaluate.py configs/<DATASET-NAME>/test.yamlNote: after the basis training phase is completed, the weights in the descriptor network are included in the basis checkpoint. However the config file for the descriptor network is still needed to recover meta-data.