A bad mapping can cause Rally can get into a bad state during bulk indexing

inqueue opened this issue · comments

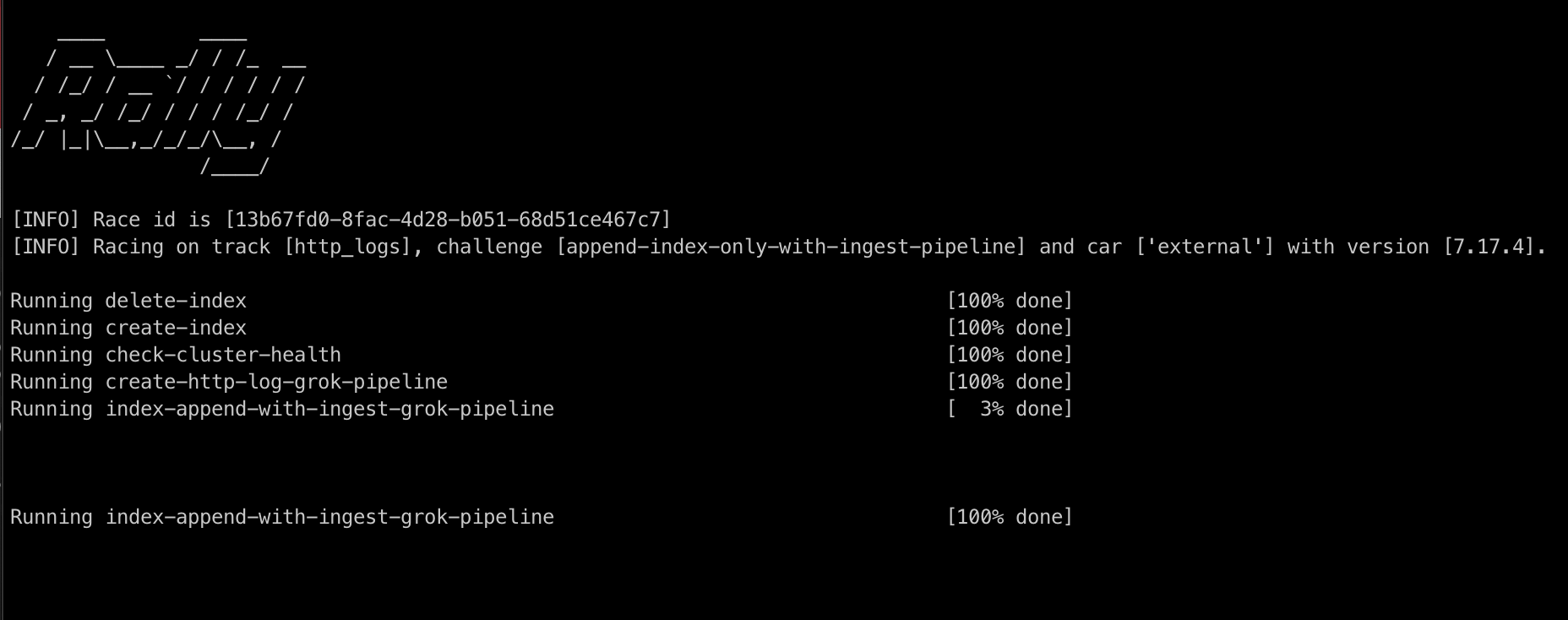

elastic/rally-tracks@cffc864 led to a strange stream of daily failures in the nightly benchmarks for http_logs where jobs were taking a full 24 hours to fail1. The simplified Rally race invocation:

rally race --track="http_logs" --challenge="append-index-only-with-ingest-pipeline" --on-error="abort" --runtime-jdk="bundled" --track-params="{\"source_enabled\": false, \"ingest_pipeline\": \"grok\"}" --pipeline="from-sources"

Use elastic/rally-tracks@cffc864 without --test-mode to reproduce. The issue does not reproduce in test mode. When left to run, Rally displays Running index-append-with-ingest-grok-pipeline [100% done], then remains in the state forever.

2023-03-30 20:38:16,376 ActorAddr-(T|:33597)/PID:8312 esrally.actor ERROR Error in driver

Traceback (most recent call last):

File "/home/esbench/rally/esrally/actor.py", line 92, in guard

return f(self, msg, sender)

^^^^^^^^^^^^^^^^^^^^

File "/home/esbench/rally/esrally/driver/driver.py", line 303, in receiveMsg_WakeupMessage

self.coordinator.post_process_samples()

File "/home/esbench/rally/esrally/driver/driver.py", line 955, in post_process_samples

self.sample_post_processor(raw_samples)

File "/home/esbench/rally/esrally/driver/driver.py", line 1068, in __call__

self.metrics_store.flush(refresh=False)

File "/home/esbench/rally/esrally/metrics.py", line 906, in flush

self._client.bulk_index(index=self._index, items=self._docs)

File "/home/esbench/rally/esrally/metrics.py", line 92, in bulk_index

self.guarded(elasticsearch.helpers.bulk, self._client, items, index=index, chunk_size=5000)

File "/home/esbench/rally/esrally/metrics.py", line 116, in guarded

return target(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^

File "/home/esbench/.local/lib/python3.11/site-packages/elasticsearch/helpers/actions.py", line 524, in bulk

for ok, item in streaming_bulk(

File "/home/esbench/.local/lib/python3.11/site-packages/elasticsearch/helpers/actions.py", line 438, in streaming_bulk

for data, (ok, info) in zip(

File "/home/esbench/.local/lib/python3.11/site-packages/elasticsearch/helpers/actions.py", line 355, in _process_bulk_chunk

yield from gen

File "/home/esbench/.local/lib/python3.11/site-packages/elasticsearch/helpers/actions.py", line 274, in _process_bulk_chunk_success

raise BulkIndexError(f"{len(errors)} document(s) failed to index.", errors)

elasticsearch.helpers.BulkIndexError: 118 document(s) failed to index.

2023-03-30 20:38:16,384 ActorAddr-(T|:33597)/PID:8312 esrally.actor ERROR Main driver received a fatal exception from a load generator. Shutting down.

2023-03-30 20:38:22,339 ActorAddr-(T|:33597)/PID:8312 esrally.driver.driver.DriverActor WARNING Actor esrally.driver.driver.DriverActor @ ActorAddr-(T|:33597) retryable exception on message <esrally.actor.BenchmarkFailure object at 0x7f083132c

6d0>

Traceback (most recent call last):

File "/home/esbench/.local/lib/python3.11/site-packages/thespian/system/actorManager.py", line 163, in _handleOneMessage

actor_result = self.actorInst.receiveMessage(msg, envelope.sender)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/esbench/.local/lib/python3.11/site-packages/thespian/actors.py", line 838, in receiveMessage

r = getattr(klass, methodName)(self, message, sender)

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

File "/home/esbench/rally/esrally/driver/driver.py", line 242, in receiveMsg_BenchmarkFailure

self.coordinator.close()

File "/home/esbench/rally/esrally/driver/driver.py", line 921, in close

self.metrics_store.close()

File "/home/esbench/rally/esrally/metrics.py", line 454, in close

self.flush()

File "/home/esbench/rally/esrally/metrics.py", line 906, in flush

self._client.bulk_index(index=self._index, items=self._docs)

File "/home/esbench/rally/esrally/metrics.py", line 92, in bulk_index

self.guarded(elasticsearch.helpers.bulk, self._client, items, index=index, chunk_size=5000)

File "/home/esbench/rally/esrally/metrics.py", line 116, in guarded

return target(*args, **kwargs)

^^^^^^^^^^^^^^^^^^^^^^^

File "/home/esbench/.local/lib/python3.11/site-packages/elasticsearch/helpers/actions.py", line 524, in bulk

for ok, item in streaming_bulk(

File "/home/esbench/.local/lib/python3.11/site-packages/elasticsearch/helpers/actions.py", line 438, in streaming_bulk

for data, (ok, info) in zip(

File "/home/esbench/.local/lib/python3.11/site-packages/elasticsearch/helpers/actions.py", line 355, in _process_bulk_chunk

yield from gen

File "/home/esbench/.local/lib/python3.11/site-packages/elasticsearch/helpers/actions.py", line 274, in _process_bulk_chunk_success

raise BulkIndexError(f"{len(errors)} document(s) failed to index.", errors)

OS: "Ubuntu 18.04.6 LTS

Bad Mapping

The mapping issue was about the date format of @timestamp. The index mapping was set to epoch_second when it should have been strict_date_optional_time. Bulk requests would have encountered this response for every single document in the corpus:

{

"took": 0,

"ingest_took": 0,

"errors": true,

"items": [

{

"index": {

"_index": "logs-181998",

"_type": "_doc",

"_id": "br7lM4cBM1F21Bvv-SHk",

"status": 400,

"error": {

"type": "mapper_parsing_exception",

"reason": "failed to parse field [@timestamp] of type [date] in document with id 'br7lM4cBM1F21Bvv-SHk'. Preview of field's value: '1998-04-30T14:30:17-05:00'",

"caused_by": {

"type": "illegal_argument_exception",

"reason": "failed to parse date field [1998-04-30T14:30:17-05:00] with format [epoch_second]",

"caused_by": {

"type": "date_time_parse_exception",

"reason": "Failed to parse with all enclosed parsers"

}

}

}

}

}

...

}

}These result in a 200 status code response, even though the error status of the individual document was a 400. We should consider exiting if the bulk request responds with errors: true.