Photo ID Creator - A tool to prepare photo IDs

I started this project several years ago with the dream of covering the gaps of existing web-based and Android/iOS apps that can produce valid photos for IDs and official applications. Being an immigrant, firstly in Australia, then in the USA, meant that I often found myself needing passport-sized photos for myself and my family members. Of course, this is a service that is offered in many places, but it is never cheap, especially for people starting from the ground up in a new country, possibly without a job, or with a large family. Traditional photo ID services also have the inconvenience of a trip to your nearest location. This is usually not a big deal, but what about parents with babies or people with disability or mobility problems?

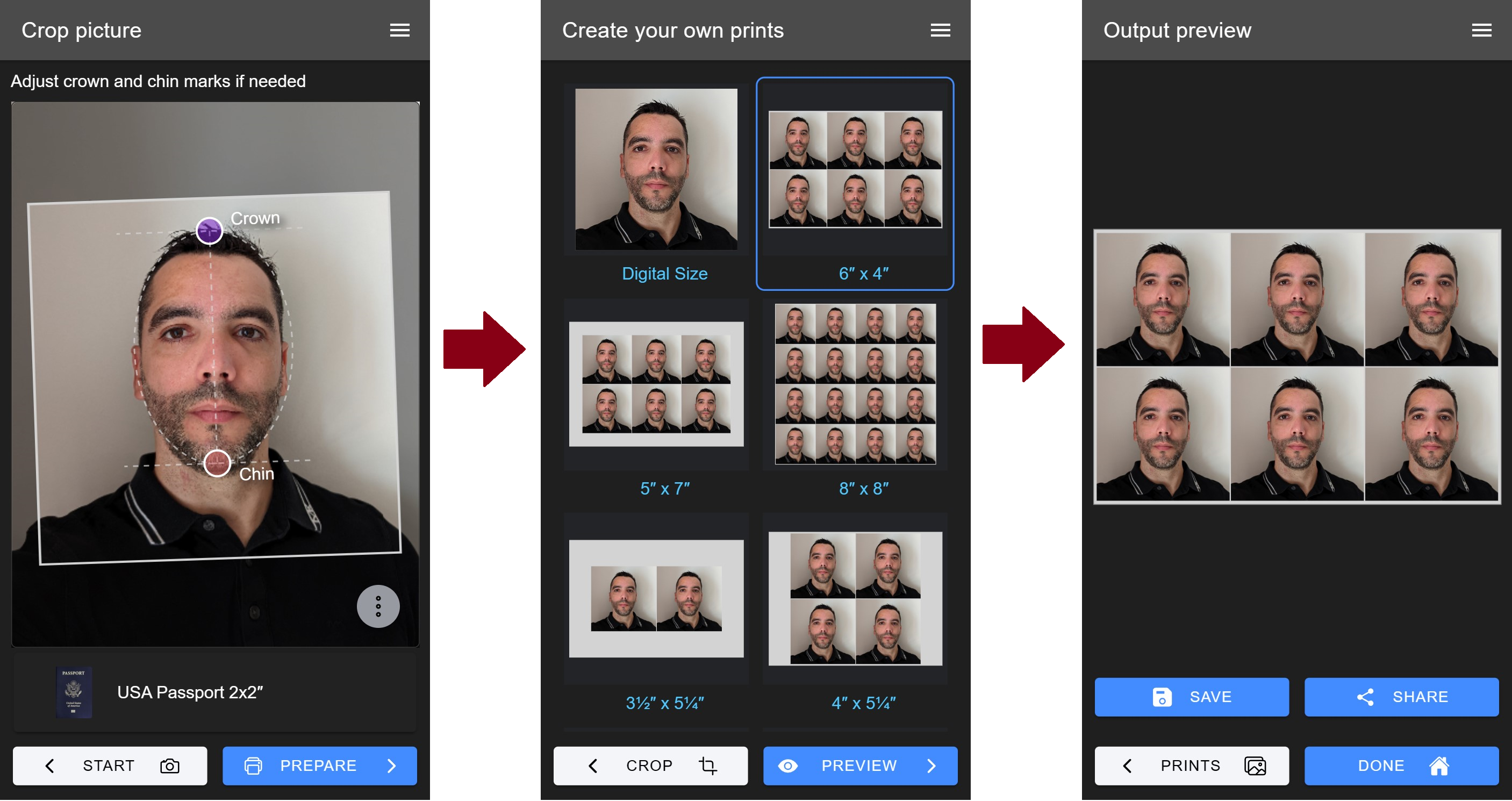

Over the years, the tech stack I've used in this project has changed significantly, but the goal has always been the same: provide a free client-side application that people can use to prepare their own photographs. Firstly, I always wanted it to be a tool where personal data (i.e. the user's photographs) never leave their PC or phone, unless they explicitly decide to print it online or share the result electronically. Secondly, I want the workflow to be so simple that if you are capable of taking a picture of someone's face with your phone and know what type of document you need (e.g. a US Passport), the app should take care of the rest with just a couple of clicks.

Obviously, not all photos taken at home with a smartphone camera will have the quality to meet photo ID requirements, but if you are on the budget and/or have the time to ensure you capture a picture of yourself with good resolution, proper focus and illumination, then this app will do the maths to prepare an output tiled photo ready to be printed for much less than a dollar at your closest photo center.

The application is made out out two components, a C++ library compiled to WebAssembly and a front-end application developed using Ionic with Angular backend in TypeScript.

Image processing algorithms of the application are developed in modern C++ and compiled to WebAssembly with Emscripten. The C++ code lives inside folder libppp and is built using Bazel. Originally, all C++ code was building with CMake, but since the app now uses MediaPipe, it only made sense to move the build system to Bazel given the complexities of porting MediaPipe to a CMake-based build system.

Libraries used in C++ code:

- OpenCV (core, imgproc, imgcodecs, objdetect, etc.)

- MediaPipe and its dependencies (TensorFlow Lite)

- Google Test

The front-end of the application is built using

- Ionic Framework with Angular

- Interact.js (for gesture handling)

- ngx-color-picker (color picker component)

- flag-icons (icons of country flags)

You'll need a POSIX system with Docker and bash available (WSL2, Linux or Mac). See section Installing WSL2 on Windows if you need help setting it up.

Then, either you install NPM or run the following by opening the folder in VSCode using DevContainers.

# build the webassembly package

npm run wasm:all

# Start the app in development mode

cd webapp && npm install && npm startTo setup WSL2 with docker, run the following steps in a Powershell or CMD as Administrator.

:: Check for wsl updates

wsl --update

:: Install Ubuntu 22.04

wsl --install Ubuntu-22.04Now inside WSL install docker and exit:

echo $(whoami) ALL=\(root\) NOPASSWD:ALL | sudo tee /etc/sudoers.d/$(whoami)

printf '[boot]\nsystemd=true\n' | sudo tee /etc/wsl.conf

sudo apt-get update

sudo apt-get install apt-transport-https ca-certificates curl software-properties-common

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add -

sudo add-apt-repository "deb [arch=amd64] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable"

sudo apt-get update

sudo apt-get install docker-ce -y

sudo usermod -aG docker $USER

sudo service docker start

sudo rebootIf everything worked well, then you should be able to run in CMD or Powershell:

wsl -d Ubuntu-22.04 docker run hello-worldIf you want to do Typescript/Javascript development directly on Windows, install Node.js and NPM on Windows. With Powershell this can be achieved via winget install OpenJS.NodeJS.LTS --accept-source-agreements.

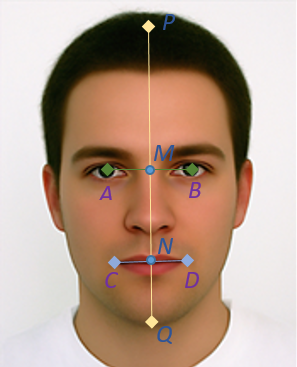

In order to crop and scale the face of the person to a particular photo ID requirement, the following approach was investigated. Given the set of detected facial landmarks A, B, C and D, we would like to estimate P and Q with accuracy that is sufficient to ensure that the face in the output photo fall within the limits of the size requirements. In other words, the estimated location of the crown (P’) and chin point (Q’) should be such that the distance P’Q’ scaled by the distance between the ideal location of the crown (P) and chin point (Q) falls within the tolerance range allowed in photo ID specifications. For instance, for the case of Australian passport, the allowed scale drift range is ±5.88% given that the face height (chin to crown) must be between 32mm and 36mm:

To develop and validate the proposed approach, facial landmarks from the SCFace database were used. The SCFace database contains images for 130 different subjects and frontal images of each individual were carefully annotated by the Biometric Recognition Group - ATVS at Escuela Politecnica Superior of the Universidad Autonoma de Madrid [ATVS]. The procedure to estimate P’ and Q’ from A, B, C and D is as follow: Firstly, points M and N are found as the center of segments AB and CD respectively. P’ and Q’ are expected to fall in the line that passes through M and N. Then using a normalization distance KK = |AB| + |MN| and scale constants α and β, we estimate P’Q’ = αKK and M’Q’ = βKK. From the dataset α and β were adjusted to minimize the estimation error of P' and Q'. Having estimated positions P' and Q' allows us to crop to most photo ID standards defined throughout the world.