This is my solution to Continuous Control Project of Udacity Deep Reinforcement Learning course. Original project template is available at https://github.com/udacity/deep-reinforcement-learning/tree/master/p2_continuous-control

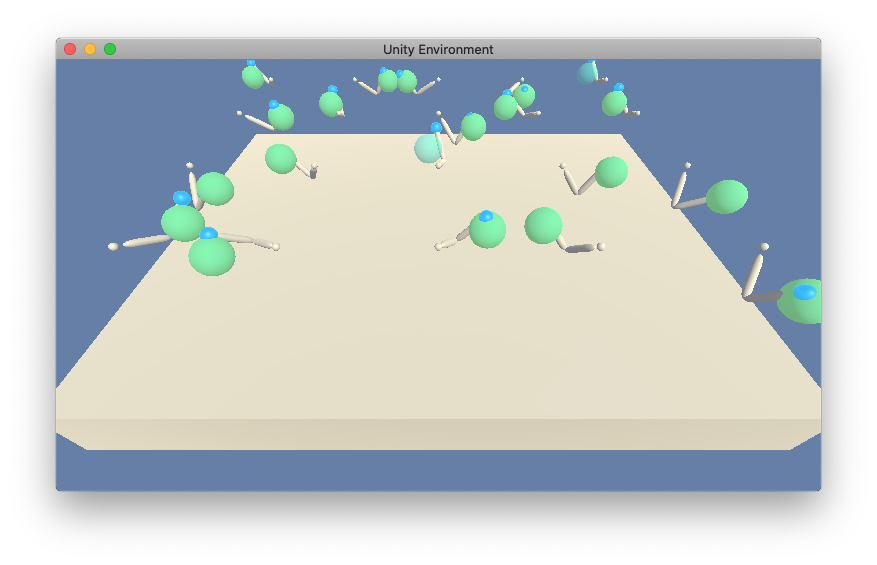

In this environment, a double-jointed arm can move to target locations. A reward of +0.1 is provided for each step that the agent's hand is in the goal location. Thus, the goal of the agent is to maintain its position at the target location for as many time steps as possible.

The observation space consists of 33 variables corresponding to position, rotation, velocity, and angular velocities of the arm. Each action is a vector with four numbers, corresponding to torque applicable to two joints. Every entry in the action vector should be a number between -1 and 1.

The world contains 20 identical agents, each with its own copy of the environment. The environment is considered solved, when the average over 100 episodes over all 20 agents at least +30.

Besides README.md, this repository holds of the following files:

- Report.md provides a description of the implementation

- test.py is the main file for testing

- train.py is the main file for training

- actor.pth is the Actor neural network trained parameters

- critic.pth is the Critic neural network trained parameters

- agent.py implements an agent for training and testing

- env_agent_factory.py creates an environment and its agent

- neural_nets.py creates neural networks for an Actor and a Critic.

- replay_buffer.py implements a Replay Buffer

- *_test.py unit tests of corresponding modules

All the Python code is pylint-compliant.

Follow the steps, described in https://github.com/udacity/deep-reinforcement-learning/tree/dc65050c8f47b365560a30a112fb84f762005c6b README.md, Dependencies section, to deploy your development environment for this project.

Basically, you will need:

- Python 3.6

- PyTorch 0.4.0

- Numpy and Matplotlib, compatible with PyTorch

- Unity ML Agents. Udacity Navigation Project requires its own version of this environment, available https://github.com/udacity/deep-reinforcement-learning/tree/dc65050c8f47b365560a30a112fb84f762005c6b/python with references to other libraries

The project has been developed and tested on Mac OS Catalina with a CPU version of PyTorch 0.4.0, and in Udacity Workspace with a CUDA version of PyTorch.

- Download the project to your PC

- Open environment.py in your text editor and set a correct path to Reacher

simulator with 20 agents in

ENV_PATHvariable - Open your terminal, cd to the project folder

- Run test.py to test previously trained agent over 100 episodes

- Run train.py to retrain the agent

- Look through Report.md of this repository to learn further details about my solution