Train on a custom dataset

Daniil-Osokin opened this issue · comments

Hi! Here are my notes on how to debug custom data (draw rays) to run with this code. We will use complete_kitchen scene for example. Everything is ok with its poses, I have manually broke them for illustration purposes.

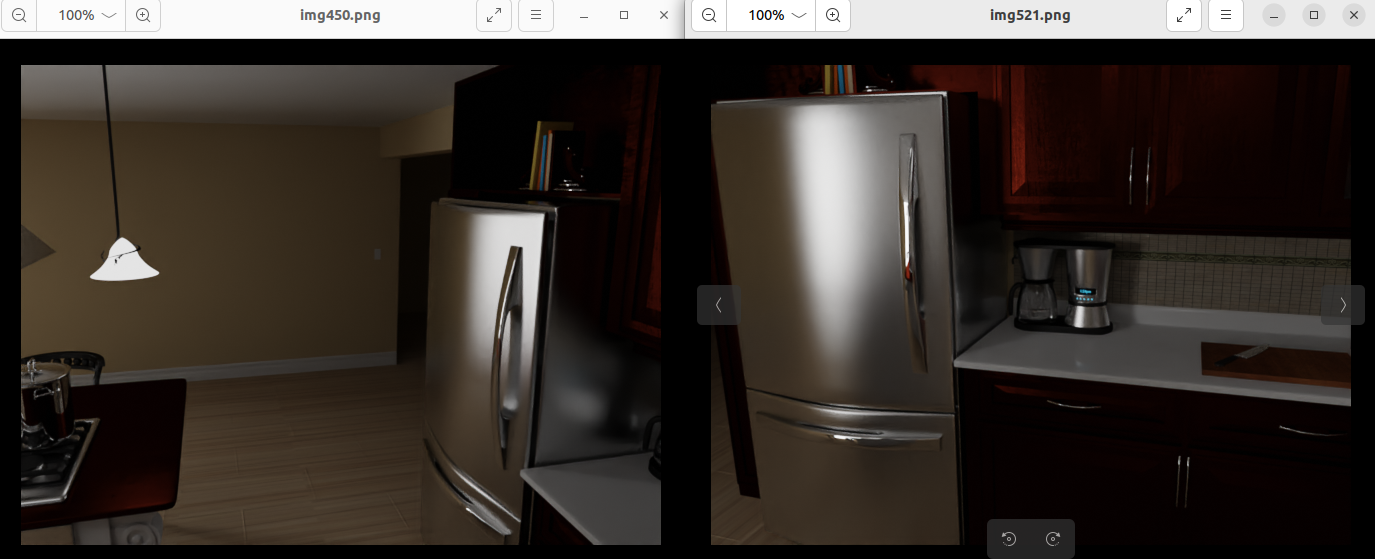

- Create a subset of 4 samples from your dataset (poses, depth and image frames). You can use the attached script for that. The important thing here is to select images with the same object, but captured from different poses. We will use the fridge for this purpose:

- Apply the attached patch to save the rays with colors for selected frames in

.OBJfiles (git apply dump_rays.diff). These rays can be visualized with the MeshLab. So, since we have captured the same object from different poses, its rays should intersect or be near each other from different frames. However if poses are in inconsistent coordinate system, we will have splitted object:

Those white blobs are our fridge, broken to different sides on the frame rays. - So, you should pick up a transform, to have the object on proper sides of frame rays:

Here the fridge is in the common part of frame rays. - In my case the transform was to negate

yandzaxis:

diff --git a/load_scannet.py b/load_scannet.py

index e9d9018..364a924 100644

--- a/load_scannet.py

+++ b/load_scannet.py

@@ -1,7 +1,7 @@

import os

import imageio

from dataloader_util import *

-

+import numpy as np

def get_training_poses(basedir, translation=0.0, sc_factor=1.0, trainskip=1):

all_poses, valid = load_poses(os.path.join(basedir, 'trainval_poses.txt'))

@@ -76,6 +76,8 @@ def load_scannet_data(basedir, trainskip, downsample_factor=1, translation=0.0,

poses = np.array(poses).astype(np.float32)

poses[:, :3, 3] += translation

poses[:, :3, 3] *= sc_factor

+ to_correct_poses = np.array([[1, 0, 0, 0], [0, -1, 0, 0], [0, 0, -1, 0], [0, 0, 0, 1]], np.float32)

+ poses = poses @ to_correct_poses.T

Hope, it will help to someone :) #2, #5, #9, #16, #21, #31.

dump_rays.zip

@dazinovic Just added my findings on rays visualization.

Hi @Daniil-Osokin, Thanks for your explanation. I followed your recommendations, but I got an IndexError on valid_poses[i] condition in load_scannet.py.

I don't know if it is about my camera poses because I am not sure if they're correct or if it's about something I don't do correctely.

Can you please advise me ?

Hi! I believe it is due to the dataset format. You can set a breakpoint and check why you are getting IndexError.