Code for paper "StableVQA: A Deep No-Reference Quality Assessment Model for Video Stability" (Accepted by ACM MM'23)

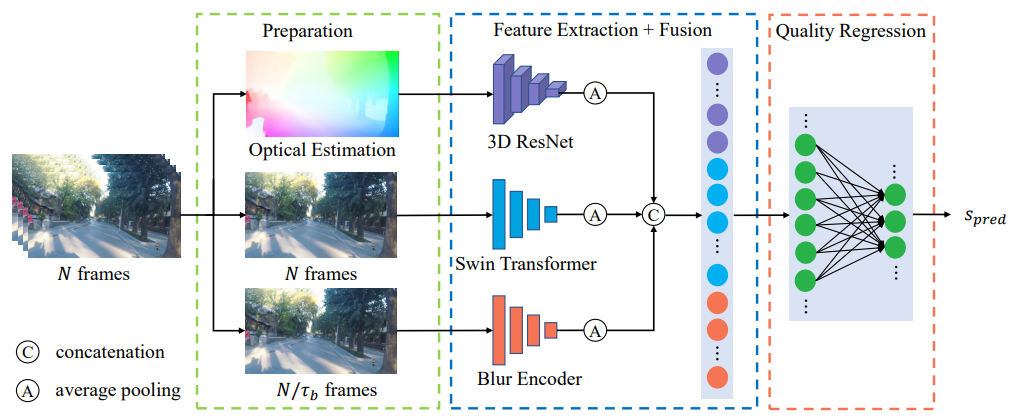

We propose StableVQA for accurate prediction of video stability. Flow Feature: After sampling N frames from the video clip, the optical flows between adjacent frames are estimated and are taken as input to a 3D CNN to implicitly analyze the camera movement. Semantic feature: We use a Swin-T for the extraction of semantic features. Blur Feature: We analyze the motion blur effect within frames. Features from three dimensions are fused and regressed to give the final prediction stability score.

Download the StableDB, including 1952 unstable videos with corresponding MOSs.

Download RAFT pre-trained weight raft-things.pth

Download StripFormer pre-trained weight Stripformer_realblur_J.pth

Download Swin Transformer pre-trained weight swin_tiny_patch4_window7_224.pth

You can download the pre-trained weight of StableVQA here.

The default path for these pre-trained weights is ./pretrained_weights/*.pth.

The project is built on python 3.7. Run pip install -r requirements.txt to install the dependencies.

python new_train.py -o ./options/stable.yml

python new_test.py -o ./options/stable.yml

If you use any part of this code, please kindly cite

@article{kou2023stablevqa,

title={StableVQA: A Deep No-Reference Quality Assessment Model for Video Stability},

author={Kou, Tengchuan and Liu, Xiaohong and Sun, Wei and Jia, Jun and Min, Xiongkuo and Zhai, Guangtao and Liu, Ning},

journal={arXiv preprint arXiv:2308.04904},

year={2023}

}