NSNet

This is the official PyTorch implementation of the paper

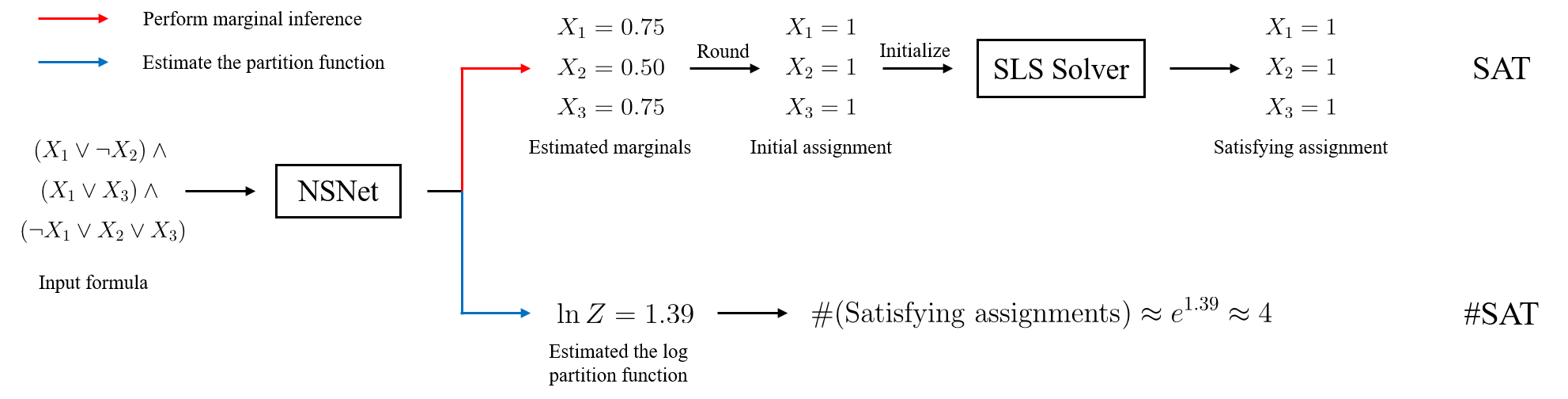

NSNet: A General Neural Probabilistic Framework for Satisfiability Problems

Zhaoyu Li and Xujie Si

In 36th Conference on Neural Information Processing Systems (NeurIPS 2022).

Installation

Our implementation is mainly based on Python 3.8, PyTorch 1.11.0, and PyG 2.0.4.

Since our codebase includes some external repositories, you can use the following line to clone the repository:

git clone --recurse-submodules https://github.com/zhaoyu-li/NSNet.gitTo compile/use some external solvers (DSHARP1, MIS2), you may use the following lines to set up:

# install GMP and Boost libraries for DSHARP and MIS

sudo apt-get install libgmp3-dev libboost-all-dev

cd NSNet/external/MIS

make

cd ../..One can then install other dependencies to a virtual environment using the following lines:

pip install torch==1.11.0

pip install torch-geometric==2.0.4 torch-scatter==2.0.9 torch-sparse==0.6.13

pip install -r requirements.txtReproduction

We provide scripts to generate/download the SAT and #SAT datasets and train/evaluate NSNet and other baselines for both SAT and #SAT problems.

To obtain the SAT and #SAT datasets, you may first use the following scripts:

# replace ~/scratch/NSNet in the following scripts to your own data directory

bash scripts/sat_data.sh

bash scripts/mc_data.shTo train and evaluate a specific model on a SAT dataset without local search, you may try or modify these scripts:

# test BP on the SR dataset

bash scripts/sat_bp_sr.sh

# train and test NSNet on the 3-SAT dataset

bash scripts/sat_nsnet_3-sat.sh

# train and test NeuroSAT on the CA dataset

bash scripts/sat_neurosat_ca.shNote that each of the above scripts evaluates a model on both same-size and larger instances as training. NSNet and NeuroSAT are also trained in two settings (with marginal supervision/assignment supervision).

To test a specific model on a SAT dataset with local search (i.e., combine a model with the SLS solver Sparrow), you may try these scripts:

# test Sparrow using BP as the initialization method on the SR dataset

bash scripts/sat_bp-sparrow_sr.sh

# test Sparrow using NSNet as the initialization method on the 3-SAT dataset

# one may modify the checkpoint path in the script

bash scripts/sat_nsnet-sparrow_3-sat.sh

# test Sparrow using NeuroSAT as the initialization method on the CA dataset

# one may modify the checkpoint path in the script

bash scripts/sat_neurosat-sparrow_ca.shTo train/evaluate a specific approach on a #SAT dataset, you may try or modify these scripts:

# test ApproxMC3 on the BIRD benchmark

bash scripts/mc_approxmc3_bird.sh

# train and test NSNet on the BIRD benchmark

bash scripts/mc_nsnet_bird.sh

# test F2 on the SATLIB benchmark

bash scripts/mc_f2_satlib.shFor the neural baseline BPNN, we run it using the official implementation3, training and testing in the same setting as NSNet.

Citation

If you find this codebase useful in your research, please consider citing the following paper.

@inproceedings{li2022nsnet,

title={{NSN}et: A General Neural Probabilistic Framework for Satisfiability Problems},

author={Zhaoyu Li and Xujie Si},

booktitle={Advances in Neural Information Processing Systems (NeurIPS)},

year={2022},

}