How to override StandardUpdater.update_core method when using TorchModule class

take0212 opened this issue · comments

I'm trying to call a pytorch model using the TorchModule class.

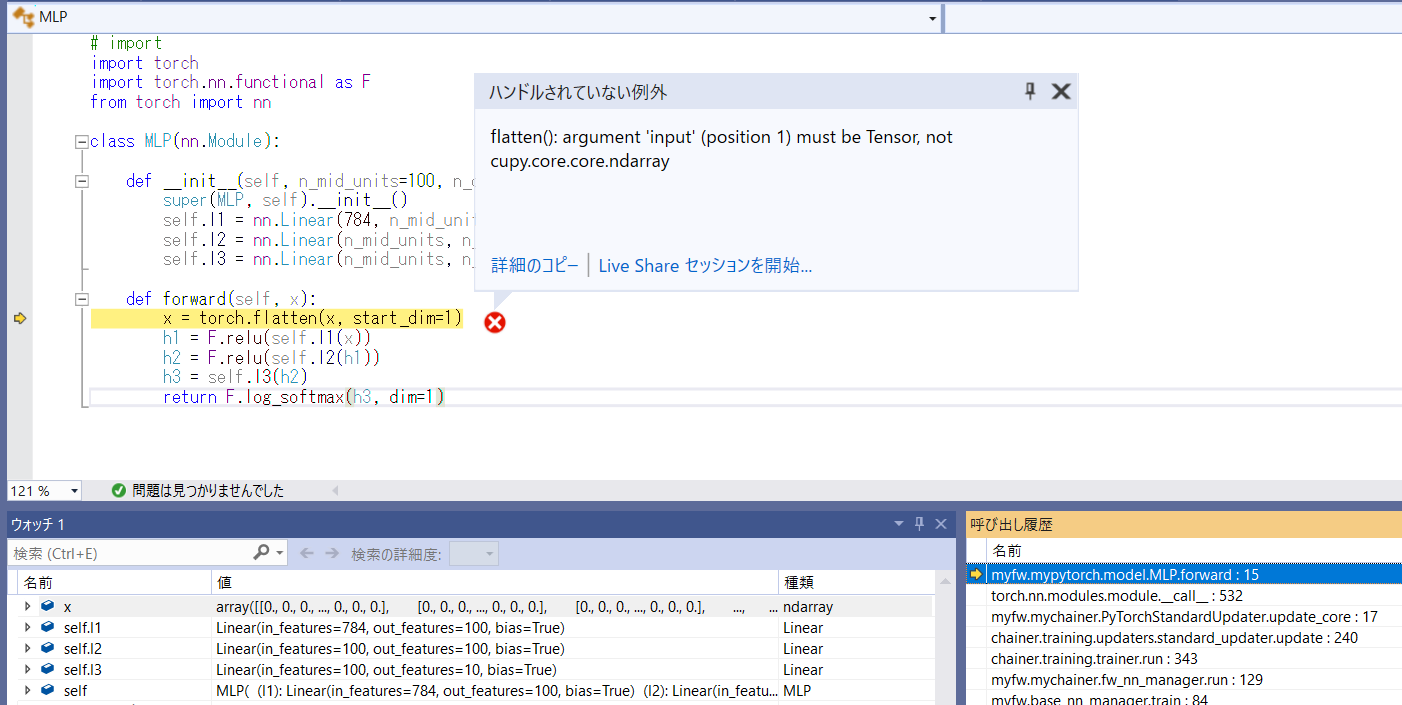

However, while running the StandardUpdater.update_core method, I get the following error.

Can you give me any advice?

the code of the overridden update_core method is below.

from chainer.training.updaters import StandardUpdater

from chainer.dataset import convert

class PyTorchStandardUpdater(StandardUpdater):

def update_core(self):

iterator = self._iterators['main']

batch = iterator.next()

in_arrays = convert._call_converter(

self.converter, batch, self.input_device)

optimizer = self._optimizers['main']

# loss_func = self.loss_func or optimizer.target

model = optimizer.target.module

y = model(in_arrays[0])

t = in_arrays[1]

import torch.nn.functional as F

loss = F.nll_loss(y, t)

loss.backward()

optimizer.update()

# if isinstance(in_arrays, tuple):

# optimizer.update(loss_func, *in_arrays)

# elif isinstance(in_arrays, dict):

# optimizer.update(loss_func, **in_arrays)

# else:

# optimizer.update(loss_func, in_arrays)

if self.auto_new_epoch and iterator.is_new_epoch:

optimizer.new_epoch(auto=True)

I want to call a pytorch model from a chain trainer.

To implement the code, go to

https://chainer.github.io/migration-guide/#h.79n4spowwnun

I created the subclass of the StandardUpdater class and overrode the update_core method.

It seems that it is necessary to convert from cupy variables to tensor variables.

But that is difficult for me.

I need more sample code.

You need to convert the x argument of the forward method to a torch.tensor instead of using a cupy array.

Try to use the torch.utils.data.DataLoader instead of chainer Iterators

Thank you for your advice.

I should rewrite the code as follows, shouldn't I?

- rewrite to call the DataLoader class instead of the SerialIterator class.

- no need to rewrite to set datasets.

(in this case, mnist.get_mnist())

It should be fine that way.

I uploaded my codes to the github.

https://github.com/take0212/pytorch-chainer-combination

Could you please check them?

In the migration guide it says

https://chainer.github.io/migration-guide/#h.im0z6zf5ujzw

The graph (forward/backward) must be constructed and traversed in PyTorch.

The model needs its inputs to be torch tensors, with the chainer converter in your updater you are creating chainer arrays

def update_core(self):

iterator = self._iterators['main']

batch = iterator.next()

in_arrays = convert._call_converter(

self.converter, batch, self.input_device)

X = torch.tensor(in_arrays[0]).to('cuda:0')

t = torch.tensor(in_arrays[1]).long().to('cuda:0')

optimizer = self._optimizers['main']

# loss_func = self.loss_func or optimizer.target

model = optimizer.target.module

y = model(X)The self.input_device needs to be also a torch device.

You can pass a custom converter function to the updater so you can

just cast the outputs of the chainer converter to torch tensors.

Also, you may have problems when using chainer extensions with torch models, so expect errors to happen there.

Thank you for your kind support.

I will confirm it within a few days.

I got your following news.

https://preferred.jp/en/news/pr20200512/

I consider using the Extensions Manager class.

Thanks for your sample code.

Now it works fine.

I'm investigating a little more.

Evaluator does not work.

It didn't work as expected from the migration guide.

However, from the information in pytorch-pfn-extras, I expect it can work fine.

As you commented, I made the following custom converter function.

def tensor_converter(batch, device):

in_arrays = convert.concat_examples(batch, device)

in_arrays_tensor = (torch.tensor(in_arrays[0]).to(device), torch.tensor(in_arrays[1]).long().to(device))

return in_arrays_tensor

I passed the converter function to the updater.

updater = PyTorchStandardUpdater(self.train_loader, self.optimizer, converter=tensor_converter, device=self.device, loss_func=F.nll_loss)

I modified my sample code and it can run without error.

But in fact, it can't be learned at all.

I updated my code to my github.

Could you check it again?

I would like to use PyTorch model in Chainer code(chainer.datasets and chainer.training.extensions).

Is it possible?

Sould I convert chainer.iterators.SerialIterator to torch.utils.data.DataLoader instead of passing a custom converter to StandardUpdater in order to convert cupy to Tensor?

Same as the other issue.

Please provide a single .py with the minimum possible code to reproduce the issue.

Thank you

If I use PyTorch model with Chainer trainer, can I get it working with following improvements?

- Pass TorchModule, loss function of PyTorch, and precision function of PyTorch to L.Classsifier.

Model = pytorch_model()

Model = model.cuda()

Model = cpm.TorchModule(model)

Model = L.Classifier(model, lossfun = pytorch_loss_function, accfun = pytorch_accuracy_function)

model.to_gpu(device)

- Pass custom concat_examples function that returns tensors to StandardUpdater.

I added a PR with an example of the above, it is not easy but doable.

This library is not being thought to use it for production or new developments from zero.

If you want to use PyTorch models with chainer-like trainers and extensions please use pytorch-pfn-extras with ignite. This library support will be ending soon.

Thank you very much for the sample code.

I'll check within a few days.

Thank you for your great advice.

Finally I was able to port your sample code to my code.

I will upload it on my github and comment here this weekend.

Next, I'll try to fix another sample code this weekend.

It is the code that runs Chainer model with Ignite and pytorch-pfn-extras, as I shared you previously.

Thanks for letting us know!

Let us close this issue.

Thank you!

I uploaded the fixed code to my github.

When I ported your code to my sample code, I made the following changes.

- pass classifier instance as loss_func argument to updater instance.

Therefore, it is not necessary to call updater.set_model method. - use pytorch_pfn_extras.nn.LazyLinear class in PyTorch model.

If you have any comments, could you reply to me?

If you don't reply to me, I'll publish the sample code.

I appreciate your support so far.

I published it.

https://github.com/take0212/pytorch-chainer-combination