Yuanhao Cai*, Jing Lin*, Xiaowan Hu, Haoqian Wang, Xin Yuan, Yulun Zhang, Radu Timofte, and Luc Van Gool

- Binarized Spectral Compressive Imaging (NeurIPS 2023)

- Mask-guided Spectral-wise Transformer for Efficient Hyperspectral Image Reconstruction (CVPR 2022)

- Coarse-to-Fine Sparse Transformer for Hyperspectral Image Reconstruction (ECCV 2022)

- Degradation-Aware Unfolding Half-Shuffle Transformer for Spectral Compressive Imaging (NeurIPS 2022)

- MST++: Multi-stage Spectral-wise Transformer for Efficient Spectral Reconstruction (CVPRW 2022)

- HDNet: High-resolution Dual-domain Learning for Spectral Compressive Imaging (CVPR 2022)

This is a baseline and toolbox for spectral compressive imaging reconstruction. This repo supports over 15 algorithms. Our method MST++ won the NTIRE 2022 Challenge on spectral recovery from RGB images. If you find this repo useful, please give it a star ⭐ and consider citing our paper in your research. Thank you.

- 2024.04.09 : We release the results of the three traditional model-based methods, i.e., TwIST, GAP-TV, and DeSCI for your convenience to conduct research. Feel free to use them. 😄

- 2024.03.21 : Our methods Retinexformer and MST++ (NTIRE 2022 Spectral Reconstruction Challenge Winner) ranked top-2 in the NTIRE 2024 Challenge on Low Light Enhancement. Code, pre-trained models, training logs, and enhancement results will be released in the repo of Retinexformer. Stay tuned! 🚀

- 2024.02.15 : NTIRE 2024 Challenge on Low Light Enhancement begins. Welcome to use our Retinexformer or MST++ (NTIRE 2022 Spectral Reconstruction Challenge Winner) to participate in this challenge! 🏆

- 2023.12.02 : Codes for real experiments have been updated. Welcome to check and use them. 🥳

- 2023.11.24 : Code, models, and results of BiSRNet (NeurIPS 2023) are released at this repo. We also develop a toolbox BiSCI for binarized SCI reconstruction. Feel free to check and use them. 🌟

- 2023.11.02 : MST, MST++, CST, and DAUHST are added to the Awesome-Transformer-Attention collection. 💫

- 2023.09.21 : Our new work BiSRNet is accepted by NeurIPS 23. Code will be released at this repo and BiSCI

- 2023.02.26 : We release the RGB images of five real scenes and ten simulation scenes. Please feel free to check and use them. 🌟

- 2022.11.02 : We have provided more visual results of state-of-the-art methods and the function to evaluate the parameters and computational complexity of models. Please feel free to check and use them. 🔆

- 2022.10.23 : Code, models, and reconstructed HSI results of DAUHST have been released. 🔥

- 2022.09.15 : Our DAUHST has been accepted by NeurIPS 2022, code and models are coming soon. 🚀

- 2022.07.20 : Code, models, and reconstructed HSI results of CST have been released. 🔥

- 2022.07.04 : Our paper CST has been accepted by ECCV 2022, code and models are coming soon. 🚀

- 2022.06.14 : Code and models of MST and MST++ have been released. This repo supports 12 learning-based methods to serve as toolbox for Spectral Compressive Imaging. The model zoo will be enlarged. 🔥

- 2022.05.20 : Our work DAUHST is on arxiv. 💫

- 2022.04.02 : Further work MST++ has won the NTIRE 2022 Spectral Reconstruction Challenge. 🏆

- 2022.03.09 : Our work CST is on arxiv. 💫

- 2022.03.02 : Our paper MST has been accepted by CVPR 2022, code and models are coming soon. 🚀

| Scene 2 | Scene 3 | Scene 4 | Scene 7 |

|---|---|---|---|

|

|

|

|

12 learning-based algorithms and 3 model-based methods are supported.

Supported algorithms:

We are going to enlarge our model zoo in the future.

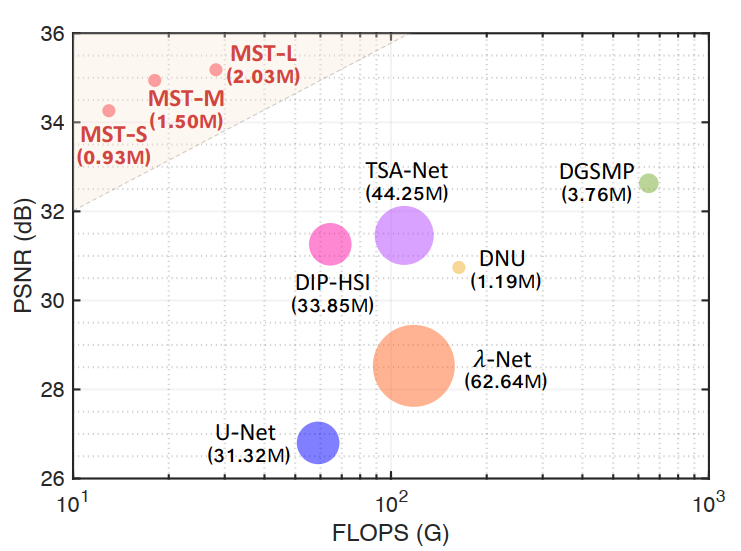

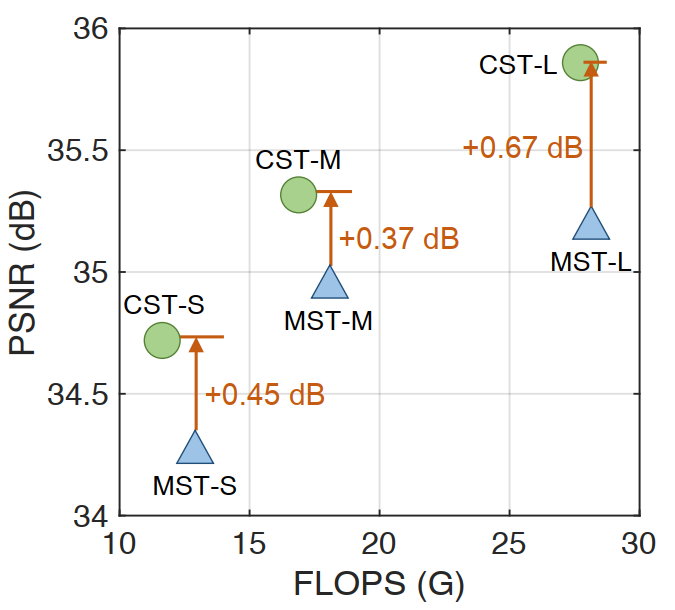

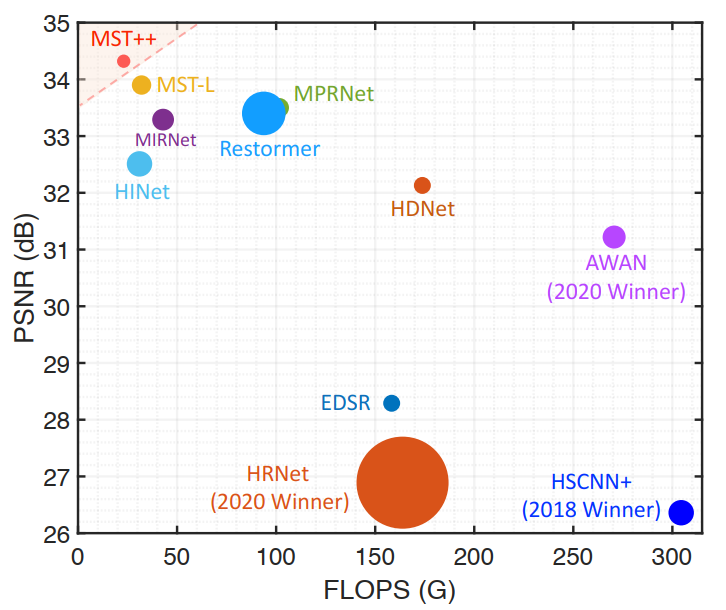

| MST vs. SOTA | CST vs. MST |

|---|---|

|

|

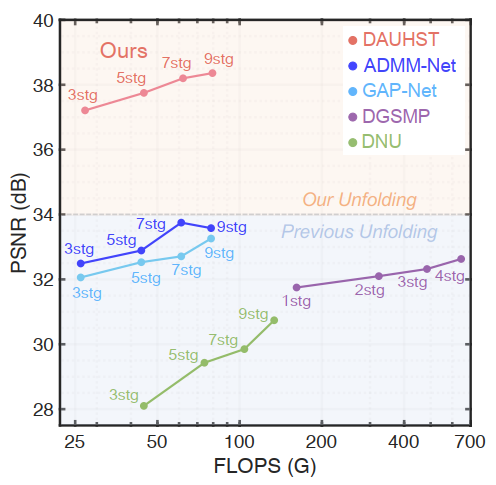

| MST++ vs. SOTA | DAUHST vs. SOTA |

|

|

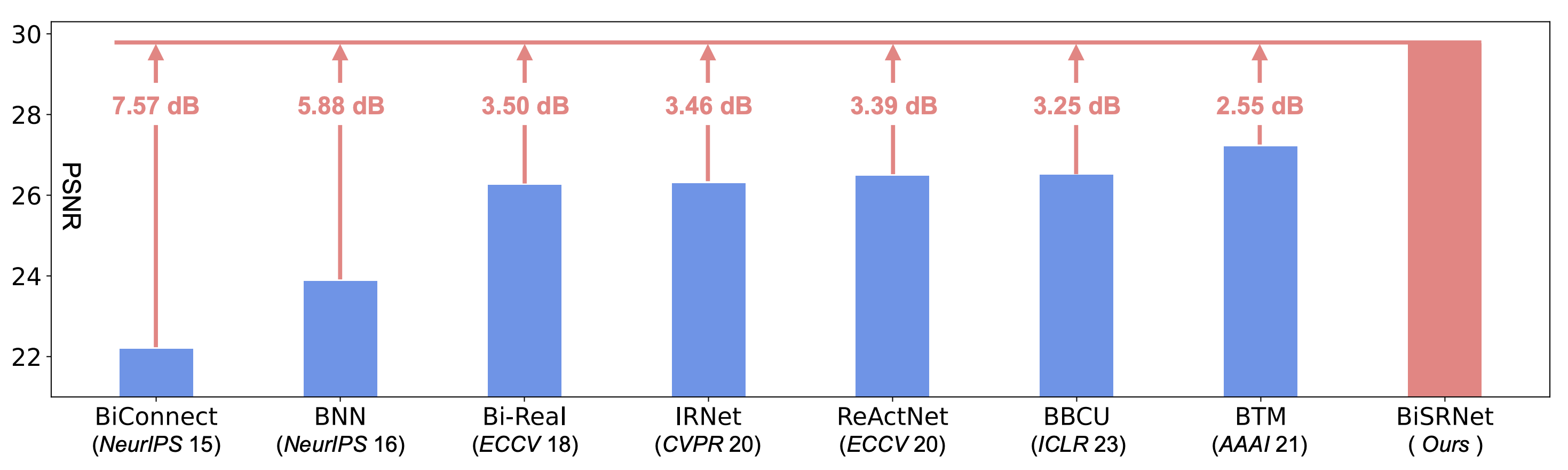

| BiSRNet vs. SOTA BNNs |

|---|

|

The performance are reported on 10 scenes of the KAIST dataset. The test size of FLOPS is 256 x 256.

We also provide the RGB images of five real scenes and ten simulation scenes for your convenience to draw a figure.

Note: access code for Baidu Disk is mst1

pip install -r requirements.txt

Download cave_1024_28 (Baidu Disk, code: fo0q | One Drive), CAVE_512_28 (Baidu Disk, code: ixoe | One Drive), KAIST_CVPR2021 (Baidu Disk, code: 5mmn | One Drive), TSA_simu_data (Baidu Disk, code: efu8 | One Drive), TSA_real_data (Baidu Disk, code: eaqe | One Drive), and then put them into the corresponding folders of datasets/ and recollect them as the following form:

|--MST

|--real

|-- test_code

|-- train_code

|--simulation

|-- test_code

|-- train_code

|--visualization

|--datasets

|--cave_1024_28

|--scene1.mat

|--scene2.mat

:

|--scene205.mat

|--CAVE_512_28

|--scene1.mat

|--scene2.mat

:

|--scene30.mat

|--KAIST_CVPR2021

|--1.mat

|--2.mat

:

|--30.mat

|--TSA_simu_data

|--mask.mat

|--Truth

|--scene01.mat

|--scene02.mat

:

|--scene10.mat

|--TSA_real_data

|--mask.mat

|--Measurements

|--scene1.mat

|--scene2.mat

:

|--scene5.matFollowing TSA-Net and DGSMP, we use the CAVE dataset (cave_1024_28) as the simulation training set. Both the CAVE (CAVE_512_28) and KAIST (KAIST_CVPR2021) datasets are used as the real training set.

cd MST/simulation/train_code/

# MST_S

python train.py --template mst_s --outf ./exp/mst_s/ --method mst_s

# MST_M

python train.py --template mst_m --outf ./exp/mst_m/ --method mst_m

# MST_L

python train.py --template mst_l --outf ./exp/mst_l/ --method mst_l

# CST_S

python train.py --template cst_s --outf ./exp/cst_s/ --method cst_s

# CST_M

python train.py --template cst_m --outf ./exp/cst_m/ --method cst_m

# CST_L

python train.py --template cst_l --outf ./exp/cst_l/ --method cst_l

# CST_L_Plus

python train.py --template cst_l_plus --outf ./exp/cst_l_plus/ --method cst_l_plus

# GAP-Net

python train.py --template gap_net --outf ./exp/gap_net/ --method gap_net

# ADMM-Net

python train.py --template admm_net --outf ./exp/admm_net/ --method admm_net

# TSA-Net

python train.py --template tsa_net --outf ./exp/tsa_net/ --method tsa_net

# HDNet

python train.py --template hdnet --outf ./exp/hdnet/ --method hdnet

# DGSMP

python train.py --template dgsmp --outf ./exp/dgsmp/ --method dgsmp

# BIRNAT

python train.py --template birnat --outf ./exp/birnat/ --method birnat

# MST_Plus_Plus

python train.py --template mst_plus_plus --outf ./exp/mst_plus_plus/ --method mst_plus_plus

# λ-Net

python train.py --template lambda_net --outf ./exp/lambda_net/ --method lambda_net

# DAUHST-2stg

python train.py --template dauhst_2stg --outf ./exp/dauhst_2stg/ --method dauhst_2stg

# DAUHST-3stg

python train.py --template dauhst_3stg --outf ./exp/dauhst_3stg/ --method dauhst_3stg

# DAUHST-5stg

python train.py --template dauhst_5stg --outf ./exp/dauhst_5stg/ --method dauhst_5stg

# DAUHST-9stg

python train.py --template dauhst_9stg --outf ./exp/dauhst_9stg/ --method dauhst_9stg

# BiSRNet

python train.py --template bisrnet --outf ./exp/bisrnet/ --method bisrnet- The training log, trained model, and reconstrcuted HSI will be available in

MST/simulation/train_code/exp/

Download the pretrained model zoo from (Google Drive / Baidu Disk, code: mst1) and place them to MST/simulation/test_code/model_zoo/

Run the following command to test the model on the simulation dataset.

cd MST/simulation/test_code/

# MST_S

python test.py --template mst_s --outf ./exp/mst_s/ --method mst_s --pretrained_model_path ./model_zoo/mst/mst_s.pth

# MST_M

python test.py --template mst_m --outf ./exp/mst_m/ --method mst_m --pretrained_model_path ./model_zoo/mst/mst_m.pth

# MST_L

python test.py --template mst_l --outf ./exp/mst_l/ --method mst_l --pretrained_model_path ./model_zoo/mst/mst_l.pth

# CST_S

python test.py --template cst_s --outf ./exp/cst_s/ --method cst_s --pretrained_model_path ./model_zoo/cst/cst_s.pth

# CST_M

python test.py --template cst_m --outf ./exp/cst_m/ --method cst_m --pretrained_model_path ./model_zoo/cst/cst_m.pth

# CST_L

python test.py --template cst_l --outf ./exp/cst_l/ --method cst_l --pretrained_model_path ./model_zoo/cst/cst_l.pth

# CST_L_Plus

python test.py --template cst_l_plus --outf ./exp/cst_l_plus/ --method cst_l_plus --pretrained_model_path ./model_zoo/cst/cst_l_plus.pth

# GAP_Net

python test.py --template gap_net --outf ./exp/gap_net/ --method gap_net --pretrained_model_path ./model_zoo/gap_net/gap_net.pth

# ADMM_Net

python test.py --template admm_net --outf ./exp/admm_net/ --method admm_net --pretrained_model_path ./model_zoo/admm_net/admm_net.pth

# TSA_Net

python test.py --template tsa_net --outf ./exp/tsa_net/ --method tsa_net --pretrained_model_path ./model_zoo/tsa_net/tsa_net.pth

# HDNet

python test.py --template hdnet --outf ./exp/hdnet/ --method hdnet --pretrained_model_path ./model_zoo/hdnet/hdnet.pth

# DGSMP

python test.py --template dgsmp --outf ./exp/dgsmp/ --method dgsmp --pretrained_model_path ./model_zoo/dgsmp/dgsmp.pth

# BIRNAT

python test.py --template birnat --outf ./exp/birnat/ --method birnat --pretrained_model_path ./model_zoo/birnat/birnat.pth

# MST_Plus_Plus

python test.py --template mst_plus_plus --outf ./exp/mst_plus_plus/ --method mst_plus_plus --pretrained_model_path ./model_zoo/mst_plus_plus/mst_plus_plus.pth

# λ-Net

python test.py --template lambda_net --outf ./exp/lambda_net/ --method lambda_net --pretrained_model_path ./model_zoo/lambda_net/lambda_net.pth

# DAUHST-2stg

python test.py --template dauhst_2stg --outf ./exp/dauhst_2stg/ --method dauhst_2stg --pretrained_model_path ./model_zoo/dauhst_2stg/dauhst_2stg.pth

# DAUHST-3stg

python test.py --template dauhst_3stg --outf ./exp/dauhst_3stg/ --method dauhst_3stg --pretrained_model_path ./model_zoo/dauhst_3stg/dauhst_3stg.pth

# DAUHST-5stg

python test.py --template dauhst_5stg --outf ./exp/dauhst_5stg/ --method dauhst_5stg --pretrained_model_path ./model_zoo/dauhst_5stg/dauhst_5stg.pth

# DAUHST-9stg

python test.py --template dauhst_9stg --outf ./exp/dauhst_9stg/ --method dauhst_9stg --pretrained_model_path ./model_zoo/dauhst_9stg/dauhst_9stg.pth

# BiSRNet

python test.py --template bisrnet --outf ./exp/bisrnet/ --method bisrnet --pretrained_model_path ./model_zoo/bisrnet/bisrnet.pth- The reconstrcuted HSIs will be output into

MST/simulation/test_code/exp/. Then place the reconstructed results intoMST/simulation/test_code/Quality_Metrics/resultsand run the following MATLAB command to calculate the PSNR and SSIM of the reconstructed HSIs.

Run cal_quality_assessment.m-

We provide two functions

my_summary()andmy_summary_bnn()insimulation/test_code/utils.py. Use them to evaluate the parameters and FLOPS of full-precision and binarized models

from utils import my_summary, my_summary_bnn

my_summary(MST(), 256, 256, 28, 1)

my_summary_bnn(BiSRNet(), 256, 256, 28, 1)-

Put the reconstruted HSI in

MST/visualization/simulation_results/resultsand rename it as method.mat, e.g., mst_s.mat. -

Generate the RGB images of the reconstructed HSIs

cd MST/visualization/

Run show_simulation.m - Draw the spetral density lines

cd MST/visualization/

Run show_line.m

cd MST/real/train_code/

# MST_S

python train.py --template mst_s --outf ./exp/mst_s/ --method mst_s

# MST_M

python train.py --template mst_m --outf ./exp/mst_m/ --method mst_m

# MST_L

python train.py --template mst_l --outf ./exp/mst_l/ --method mst_l

# CST_S

python train.py --template cst_s --outf ./exp/cst_s/ --method cst_s

# CST_M

python train.py --template cst_m --outf ./exp/cst_m/ --method cst_m

# CST_L

python train.py --template cst_l --outf ./exp/cst_l/ --method cst_l

# CST_L_Plus

python train.py --template cst_l_plus --outf ./exp/cst_l_plus/ --method cst_l_plus

# GAP-Net

python train.py --template gap_net --outf ./exp/gap_net/ --method gap_net

# ADMM-Net

python train.py --template admm_net --outf ./exp/admm_net/ --method admm_net

# TSA-Net

python train.py --template tsa_net --outf ./exp/tsa_net/ --method tsa_net

# HDNet

python train.py --template hdnet --outf ./exp/hdnet/ --method hdnet

# DGSMP

python train.py --template dgsmp --outf ./exp/dgsmp/ --method dgsmp

# BIRNAT

python train.py --template birnat --outf ./exp/birnat/ --method birnat

# MST_Plus_Plus

python train.py --template mst_plus_plus --outf ./exp/mst_plus_plus/ --method mst_plus_plus

# λ-Net

python train.py --template lambda_net --outf ./exp/lambda_net/ --method lambda_net

# DAUHST-2stg

python train.py --template dauhst_2stg --outf ./exp/dauhst_2stg/ --method dauhst_2stg

# DAUHST-3stg

python train.py --template dauhst_3stg --outf ./exp/dauhst_3stg/ --method dauhst_3stg

# DAUHST-5stg

python train.py --template dauhst_5stg --outf ./exp/dauhst_5stg/ --method dauhst_5stg

# DAUHST-9stg

python train.py --template dauhst_9stg --outf ./exp/dauhst_9stg/ --method dauhst_9stg

# BiSRNet

python train_s.py --outf ./exp/bisrnet/ --method bisrnet-

If you do not have a large memory GPU, add

--size 128to use a small patch size. -

The training log, trained model, and reconstrcuted HSI will be available in

MST/real/train_code/exp/ -

Note: you can use

train_s.pyfor other methods except BiSRNet if you cannot access the mask data or you have limited GPU resources. In this case, you need to replace the--methodparamter in the above commands and make some modifications.

The pretrained model of BiSRNet can be download from (Google Drive / Baidu Disk, code: mst1) and place them to MST/real/test_code/model_zoo/

cd MST/real/test_code/

# MST_S

python test.py --outf ./exp/mst_s/ --pretrained_model_path ./model_zoo/mst/mst_s.pth

# MST_M

python test.py --outf ./exp/mst_m/ --pretrained_model_path ./model_zoo/mst/mst_m.pth

# MST_L

python test.py --outf ./exp/mst_l/ --pretrained_model_path ./model_zoo/mst/mst_l.pth

# CST_S

python test.py --outf ./exp/cst_s/ --pretrained_model_path ./model_zoo/cst/cst_s.pth

# CST_M

python test.py --outf ./exp/cst_m/ --pretrained_model_path ./model_zoo/cst/cst_m.pth

# CST_L

python test.py --outf ./exp/cst_l/ --pretrained_model_path ./model_zoo/cst/cst_l.pth

# CST_L_Plus

python test.py --outf ./exp/cst_l_plus/ --pretrained_model_path ./model_zoo/cst/cst_l_plus.pth

# GAP_Net

python test.py --outf ./exp/gap_net/ --pretrained_model_path ./model_zoo/gap_net/gap_net.pth

# ADMM_Net

python test.py --outf ./exp/admm_net/ --pretrained_model_path ./model_zoo/admm_net/admm_net.pth

# TSA_Net

python test.py --outf ./exp/tsa_net/ --pretrained_model_path ./model_zoo/tsa_net/tsa_net.pth

# HDNet

python test.py --template hdnet --outf ./exp/hdnet/ --method hdnet --pretrained_model_path ./model_zoo/hdnet/hdnet.pth

# DGSMP

python test.py --outf ./exp/dgsmp/ --pretrained_model_path ./model_zoo/dgsmp/dgsmp.pth

# BIRNAT

python test.py --outf ./exp/birnat/ --pretrained_model_path ./model_zoo/birnat/birnat.pth

# MST_Plus_Plus

python test.py --outf ./exp/mst_plus_plus/ --pretrained_model_path ./model_zoo/mst_plus_plus/mst_plus_plus.pth

# λ-Net

python test.py --outf ./exp/lambda_net/ --pretrained_model_path ./model_zoo/lambda_net/lambda_net.pth

# DAUHST_2stg

python test.py --outf ./exp/dauhst_2stg/ --pretrained_model_path ./model_zoo/dauhst/dauhst_2stg.pth

# DAUHST_3stg

python test.py --outf ./exp/dauhst_3stg/ --pretrained_model_path ./model_zoo/dauhst/dauhst_3stg.pth

# DAUHST_5stg

python test.py --outf ./exp/dauhst_5stg/ --pretrained_model_path ./model_zoo/dauhst/dauhst_5stg.pth

# DAUHST_9stg

python test.py --outf ./exp/dauhst_9stg/ --pretrained_model_path ./model_zoo/dauhst/dauhst_9stg.pth

# BiSRNet

python test.py --outf ./exp/bisrnet --pretrained_model_path ./model_zoo/bisrnet/bisrnet.pth --method bisrnet- The reconstrcuted HSI will be output into

MST/real/test_code/exp/

-

Put the reconstruted HSI in

MST/visualization/real_results/resultsand rename it as method.mat, e.g., mst_plus_plus.mat. -

Generate the RGB images of the reconstructed HSI

cd MST/visualization/

Run show_real.m

If this repo helps you, please consider citing our works:

# MST

@inproceedings{mst,

title={Mask-guided Spectral-wise Transformer for Efficient Hyperspectral Image Reconstruction},

author={Yuanhao Cai and Jing Lin and Xiaowan Hu and Haoqian Wang and Xin Yuan and Yulun Zhang and Radu Timofte and Luc Van Gool},

booktitle={CVPR},

year={2022}

}

# CST

@inproceedings{cst,

title={Coarse-to-Fine Sparse Transformer for Hyperspectral Image Reconstruction},

author={Yuanhao Cai and Jing Lin and Xiaowan Hu and Haoqian Wang and Xin Yuan and Yulun Zhang and Radu Timofte and Luc Van Gool},

booktitle={ECCV},

year={2022}

}

# DAUHST

@inproceedings{dauhst,

title={Degradation-Aware Unfolding Half-Shuffle Transformer for Spectral Compressive Imaging},

author={Yuanhao Cai and Jing Lin and Haoqian Wang and Xin Yuan and Henghui Ding and Yulun Zhang and Radu Timofte and Luc Van Gool},

booktitle={NeurIPS},

year={2022}

}

# BiSCI

@inproceedings{bisci,

title={Binarized Spectral Compressive Imaging},

author={Yuanhao Cai and Yuxin Zheng and Jing Lin and Xin Yuan and Yulun Zhang and Haoqian Wang},

booktitle={NeurIPS},

year={2023}

}

# MST++

@inproceedings{mst_pp,

title={MST++: Multi-stage Spectral-wise Transformer for Efficient Spectral Reconstruction},

author={Yuanhao Cai and Jing Lin and Zudi Lin and Haoqian Wang and Yulun Zhang and Hanspeter Pfister and Radu Timofte and Luc Van Gool},

booktitle={CVPRW},

year={2022}

}

# HDNet

@inproceedings{hdnet,

title={HDNet: High-resolution Dual-domain Learning for Spectral Compressive Imaging},

author={Xiaowan Hu and Yuanhao Cai and Jing Lin and Haoqian Wang and Xin Yuan and Yulun Zhang and Radu Timofte and Luc Van Gool},

booktitle={CVPR},

year={2022}

}